bsky.app/profile/maso...

bsky.app/profile/maso...

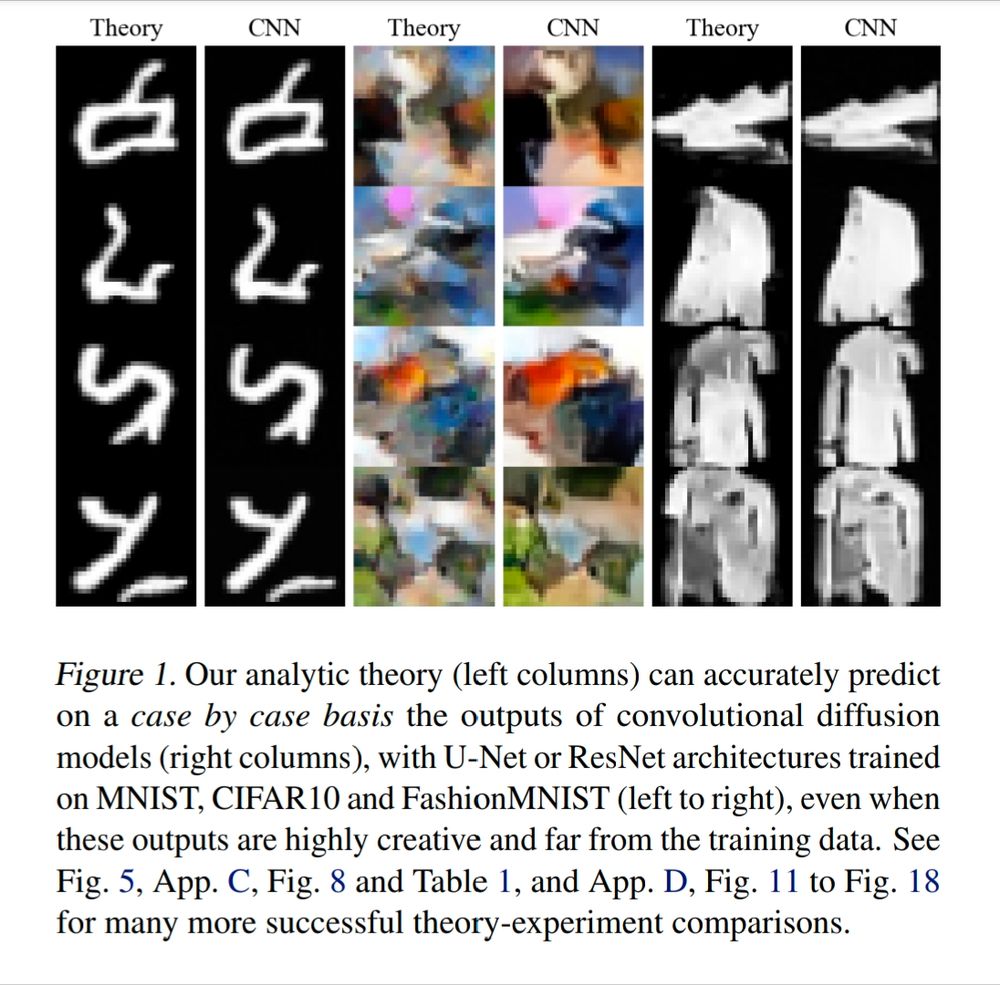

- read our paper (now with faces!): arxiv.org/pdf/2412.202...

- use our code + weights:

github.com/Kambm/convol...

- read our paper (now with faces!): arxiv.org/pdf/2412.202...

- use our code + weights:

github.com/Kambm/convol...

Code should be out soonish, working to bring the repo into a fit state for public consumption (currently it's a bit spaghettified). Colab not yet in the works, but perhaps it should be…

Code should be out soonish, working to bring the repo into a fit state for public consumption (currently it's a bit spaghettified). Colab not yet in the works, but perhaps it should be…