Look at our new work "Chronoberg": an open-source dataset spanning 250 years of books with analysis of shifts in meaning & continual learning of LLMs:

arxiv.org/pdf/2509.22360

huggingface.co/datasets/spa...

Look at our new work "Chronoberg": an open-source dataset spanning 250 years of books with analysis of shifts in meaning & continual learning of LLMs:

arxiv.org/pdf/2509.22360

huggingface.co/datasets/spa...

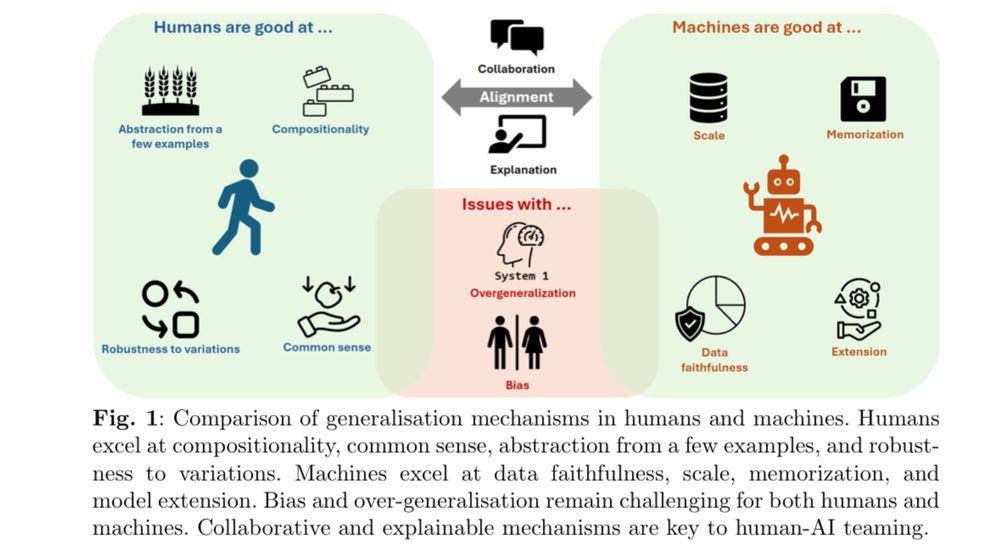

In short, we identified interdisciplinary commonalities & differences with respect to notions of, methods for, & evaluation of generalization

In short, we identified interdisciplinary commonalities & differences with respect to notions of, methods for, & evaluation of generalization

And of course our own oral presentation as well :)

Very excited to be the next program chair & ensure its success for CoLLAs in Romania next year!

And of course our own oral presentation as well :)

Very excited to be the next program chair & ensure its success for CoLLAs in Romania next year!

Very excited about a fantastic program!

I’ll be around all week, but feel free to drop me a message or catch me directly at the debate on Monday morning, after our oral presentation on Tuesday, or in any of the poster sessions

Very excited about a fantastic program!

I’ll be around all week, but feel free to drop me a message or catch me directly at the debate on Monday morning, after our oral presentation on Tuesday, or in any of the poster sessions

Overwhelmingly happy to be part of RAI & continue working with the smart minds at TU Darmstadt & hessian.AI, while also seeing my new home at Uni Bremen achieve a historic success in the excellence strategy!

Overwhelmingly happy to be part of RAI & continue working with the smart minds at TU Darmstadt & hessian.AI, while also seeing my new home at Uni Bremen achieve a historic success in the excellence strategy!

In "BOWL: A Deceptively Simple Open World Learner" we leverage BN stats to enable OoD detection & rapid active + continual learning!

arxiv.org/abs/2402.04814

🚀 now accepted @collasconf.bsky.social

In "BOWL: A Deceptively Simple Open World Learner" we leverage BN stats to enable OoD detection & rapid active + continual learning!

arxiv.org/abs/2402.04814

🚀 now accepted @collasconf.bsky.social

🔥In “Scaling Probabilistic Circuits via Data Partitioning" - accepted at #UAI25 - we unify the different settings through aggregation of learned client distributions: arxiv.org/abs/2503.08141

🔥In “Scaling Probabilistic Circuits via Data Partitioning" - accepted at #UAI25 - we unify the different settings through aggregation of learned client distributions: arxiv.org/abs/2503.08141

arxiv.org/abs/2402.06434

-> we introduce continual confounding + the ConCon dataset, where confounders over time render continual knowledge accumulation insufficient ⬇️

arxiv.org/abs/2402.06434

-> we introduce continual confounding + the ConCon dataset, where confounders over time render continual knowledge accumulation insufficient ⬇️

I can’t travel myself due to family medical reasons 😢, but we have an exciting program with amazing speakers on #continuallearning & #causality:

www.continualcausality.org/program/

I can’t travel myself due to family medical reasons 😢, but we have an exciting program with amazing speakers on #continuallearning & #causality:

www.continualcausality.org/program/

In our new collaborative paper w/ many amazing authors, we argue that “Continual Learning Should Move Beyond Incremental Classification”!

We highlight 5 examples to show where CL algos can fail & pinpoint 3 key challenges

arxiv.org/abs/2502.11927

In our new collaborative paper w/ many amazing authors, we argue that “Continual Learning Should Move Beyond Incremental Classification”!

We highlight 5 examples to show where CL algos can fail & pinpoint 3 key challenges

arxiv.org/abs/2502.11927

In turn, they allow us to distill a set of first technical considerations & recommendations to initiate the next wave of research to combat some of the worst pitfalls. 3/4

In turn, they allow us to distill a set of first technical considerations & recommendations to initiate the next wave of research to combat some of the worst pitfalls. 3/4

“The Cake that is Intelligence and Who Gets to Bake it: An AI Analogy and its Implications for Participation” - arxiv.org/abs/2502.03038

🍰We extend Yann LeCun’s AI cake analogy to relate socio-technical outcomes to the AI life-cycle!

More details below ⬇️ 1/4

“The Cake that is Intelligence and Who Gets to Bake it: An AI Analogy and its Implications for Participation” - arxiv.org/abs/2502.03038

🍰We extend Yann LeCun’s AI cake analogy to relate socio-technical outcomes to the AI life-cycle!

More details below ⬇️ 1/4

Read about the different ways generalisation is defined, parallels between humans & machines, methods & evaluation in our new paper: arxiv.org/abs/2411.15626

co-authored with many smart minds as a product of Dagstuhl 🙏🎉

Read about the different ways generalisation is defined, parallels between humans & machines, methods & evaluation in our new paper: arxiv.org/abs/2411.15626

co-authored with many smart minds as a product of Dagstuhl 🙏🎉

App deadline Dec. 10: www.uni-bremen.de/en/universit...

Please share or reach out!

#MachineLearning #AI #PhD

App deadline Dec. 10: www.uni-bremen.de/en/universit...

Please share or reach out!

#MachineLearning #AI #PhD