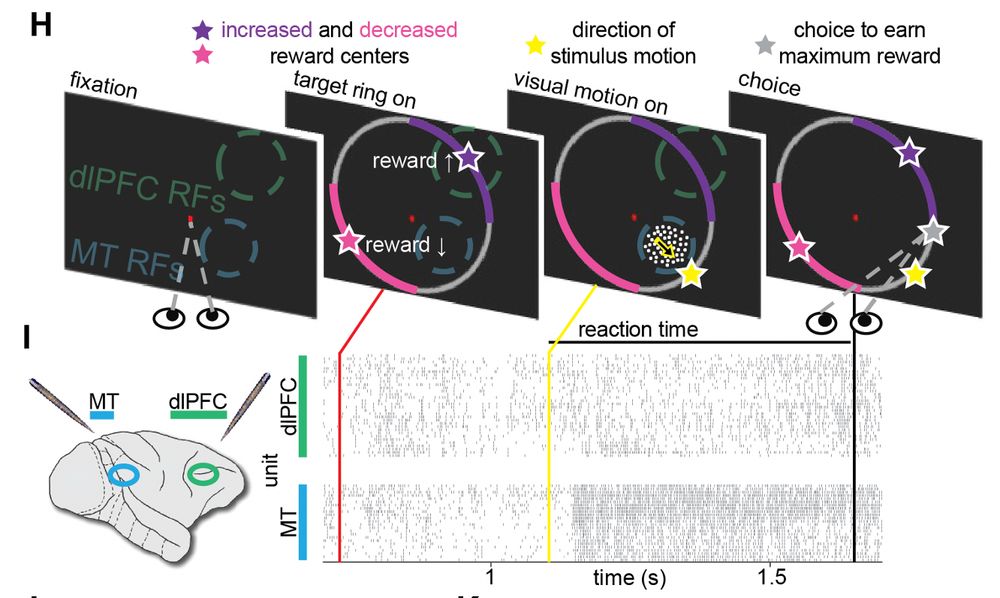

Keon and Doug Ruff asked how brains do that. 2/

Keon and Doug Ruff asked how brains do that. 2/

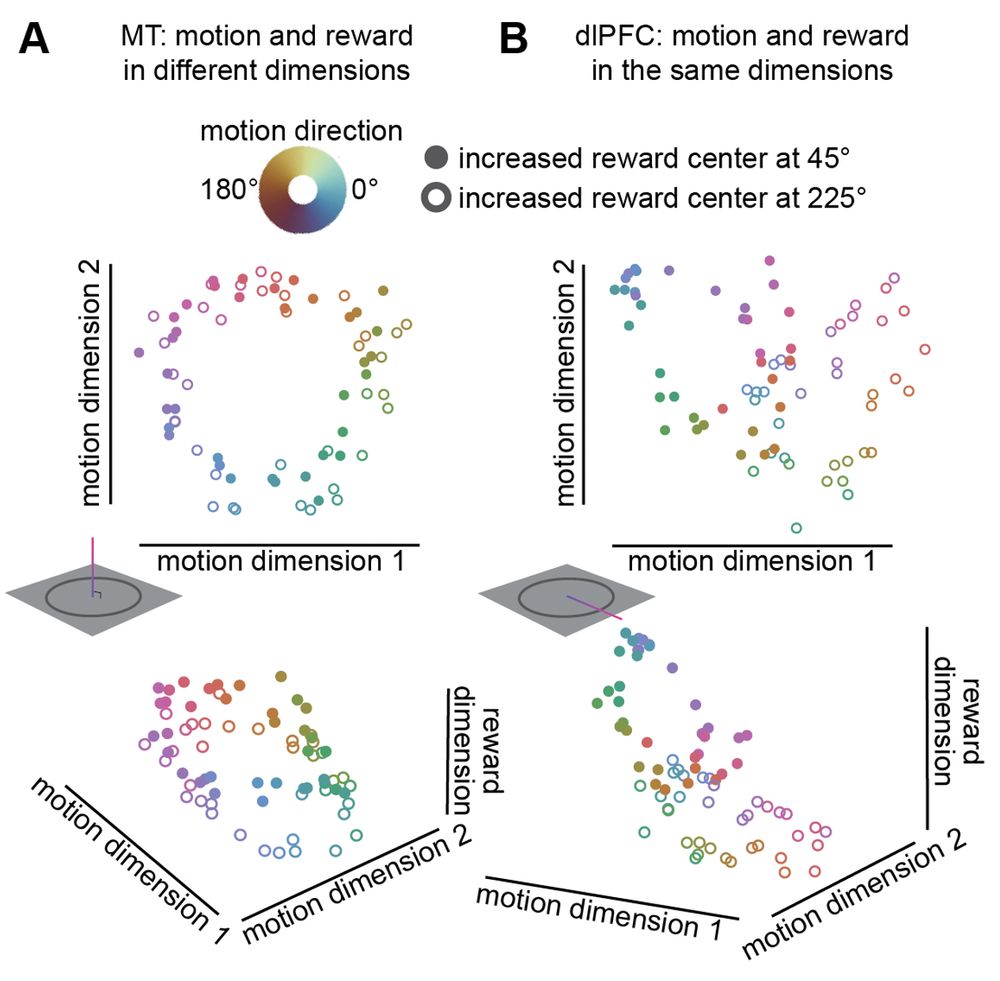

10/

10/

8/

8/

7/

7/

6/

6/

3/

3/

(illustrations from the awesome bioart.niaid.nih.gov)

2/

(illustrations from the awesome bioart.niaid.nih.gov)

2/