https://marian-nmt.github.io

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

https://simonwillison.net/2024/Dec/15/phi-4-technical-report/

https://simonwillison.net/2024/Dec/15/phi-4-technical-report/

arxiv.org/abs/2412.04984

arxiv.org/abs/2412.04984

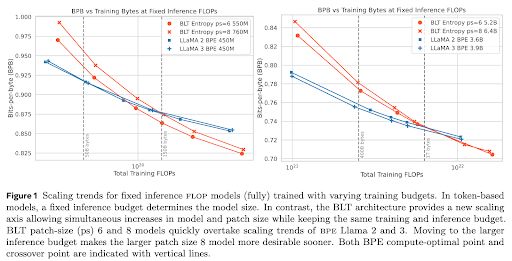

Paper 📄 dl.fbaipublicfiles.com/blt/BLT__Pat...

Code 🛠️ github.com/facebookrese...

Paper 📄 dl.fbaipublicfiles.com/blt/BLT__Pat...

Code 🛠️ github.com/facebookrese...