Results? We show that the criteria generated by EvalAgent (EA-Web) are 🎯 highly specific and 💭 implicit.

Results? We show that the criteria generated by EvalAgent (EA-Web) are 🎯 highly specific and 💭 implicit.

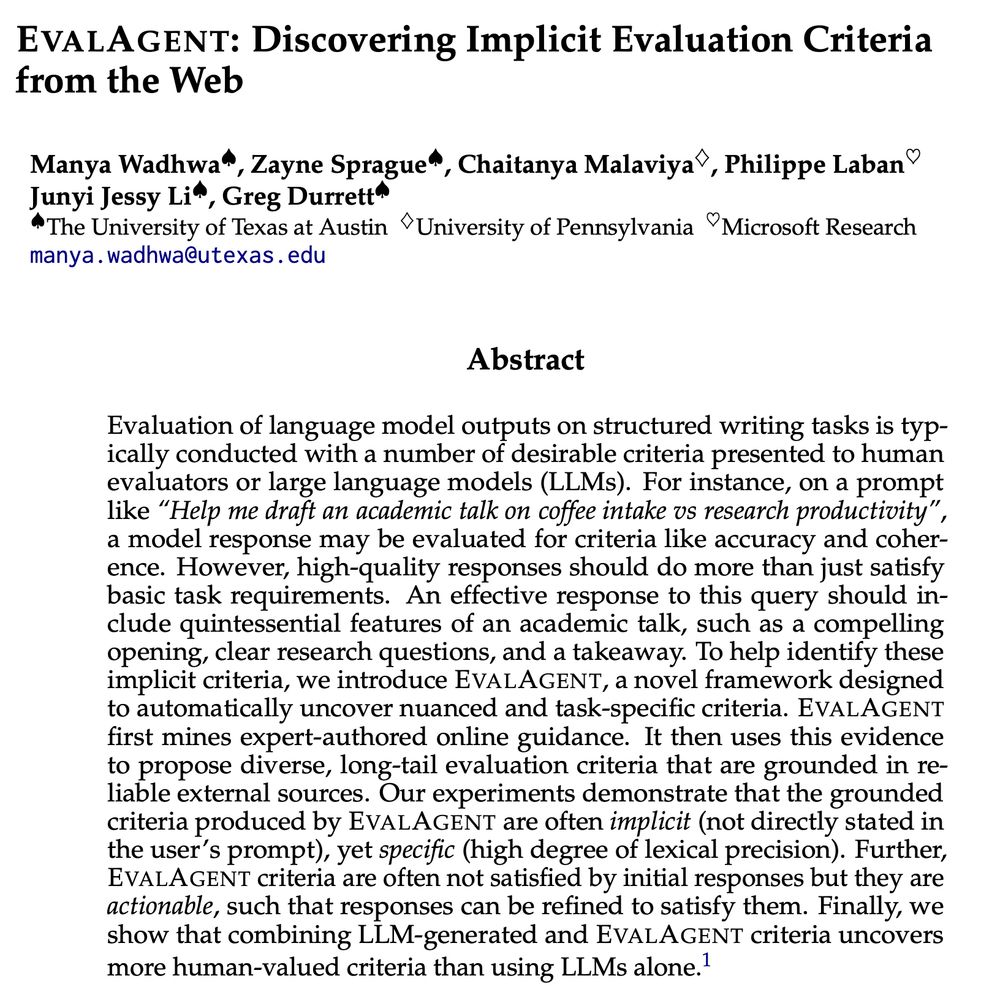

📌 Decomposing the user prompt into key conceptual queries

🌐 Searching the web for expert advice and summarizing it

📋 Aggregating web-retrieved information into specific and actionable evaluation criteria

📌 Decomposing the user prompt into key conceptual queries

🌐 Searching the web for expert advice and summarizing it

📋 Aggregating web-retrieved information into specific and actionable evaluation criteria

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

Jifan Chen, @jessyjli.bsky.social , @gregdnlp.bsky.social )

📜 arxiv.org/pdf/2305.147...

We show that LLMs can help understand nuances of annotation: they can convert the expressiveness of natural language explanations to a numerical form.

🧵

Jifan Chen, @jessyjli.bsky.social , @gregdnlp.bsky.social )

📜 arxiv.org/pdf/2305.147...

We show that LLMs can help understand nuances of annotation: they can convert the expressiveness of natural language explanations to a numerical form.

🧵