💻 github.com/apple/ml-lin...

📄 arxiv.org/abs/2503.10679

💻 github.com/apple/ml-lin...

📄 arxiv.org/abs/2503.10679

machinelearning.apple.com/research/tra...

This work will be presented at ICLR2025 in Singapore. See you there!

machinelearning.apple.com/research/tra...

This work will be presented at ICLR2025 in Singapore. See you there!

@Apple

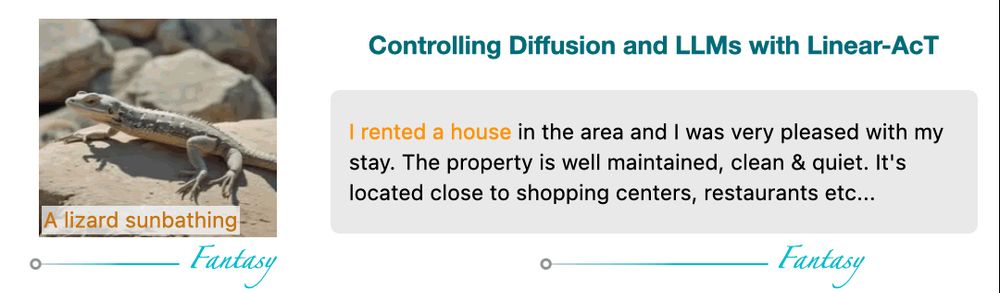

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵

@Apple

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵

We explored this through the lens of MoEs:

We explored this through the lens of MoEs: