🔸10% Pledge at GivingWhatWeCan.

Love how clicking @METR_Evals's new Notes page changes the whole site to handwritten font and chalk background.

Strong visual screaming "no seriously, this is rough".

Love how clicking @METR_Evals's new Notes page changes the whole site to handwritten font and chalk background.

Strong visual screaming "no seriously, this is rough".

We hope STREAM will:

• Encourage more peer reviews of model cards using public info;

• Give companies a roadmap for following industry best practices.

We hope STREAM will:

• Encourage more peer reviews of model cards using public info;

• Give companies a roadmap for following industry best practices.

Together, these experts helped us narrow our key criteria to six categories,

all fitting on a single page.

(Any sensitive info can be shared privately with AISIs, so long as it's flagged as such)

Together, these experts helped us narrow our key criteria to six categories,

all fitting on a single page.

(Any sensitive info can be shared privately with AISIs, so long as it's flagged as such)

Today we're launching STREAM: a checklist for more transparent eval results.

I read a lot of model reports. Often they miss important details, like human baselines. STREAM helps make peer review more systematic.

Today we're launching STREAM: a checklist for more transparent eval results.

I read a lot of model reports. Often they miss important details, like human baselines. STREAM helps make peer review more systematic.

• 4 cases of late safety results (out of 27, so ~15%)

• Notably 2 cases were late results showed increases in risk

• The most recent set of releases in August were all on time

x.com/HarryBooth5...

• 4 cases of late safety results (out of 27, so ~15%)

• Notably 2 cases were late results showed increases in risk

• The most recent set of releases in August were all on time

x.com/HarryBooth5...

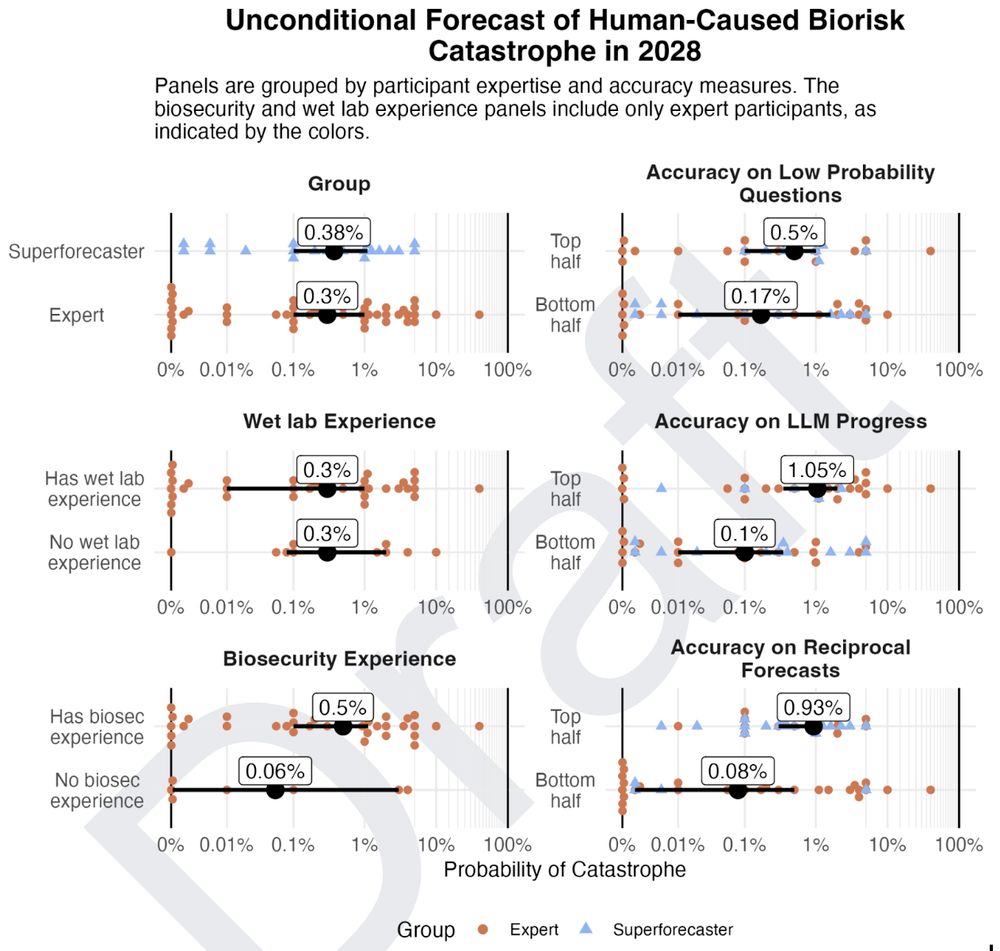

Policy needs to stay informed. We need to update these surveys as we learn more, add more evals, and replicate predictions with NatSec experts.

Better evidence = better decisions

Policy needs to stay informed. We need to update these surveys as we learn more, add more evals, and replicate predictions with NatSec experts.

Better evidence = better decisions

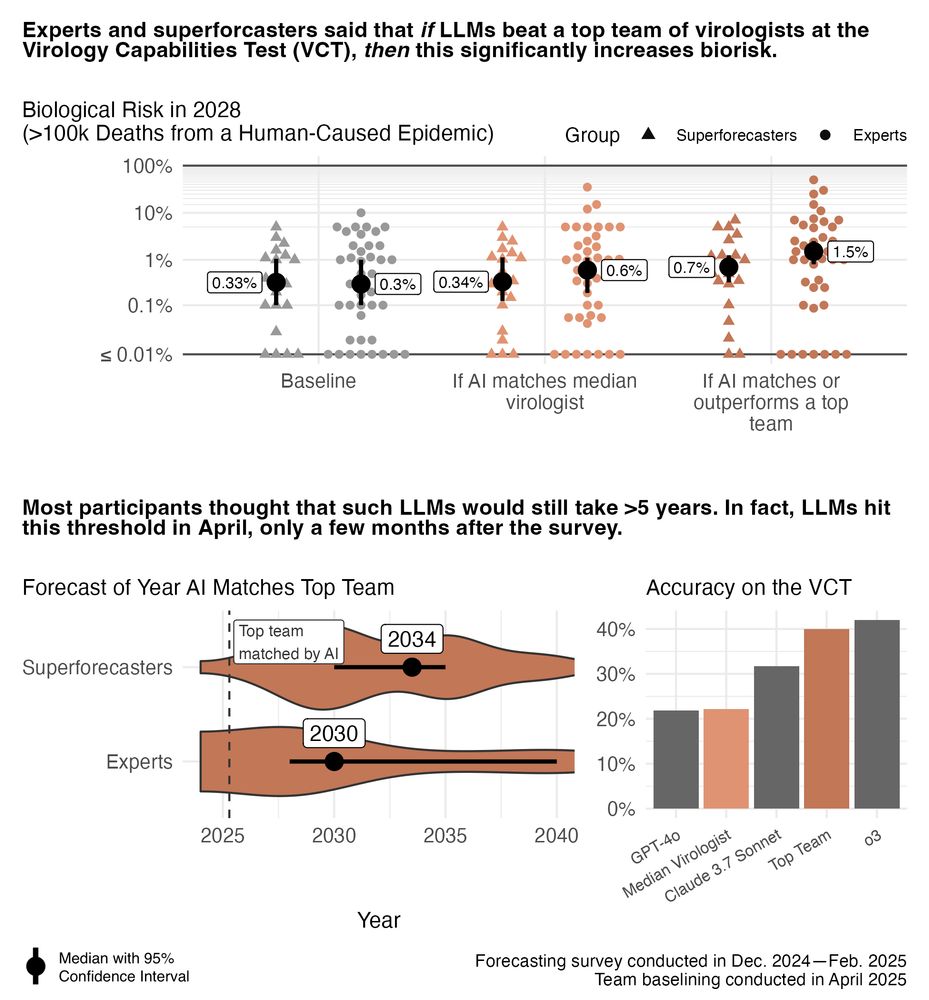

• Experts and superforecasters mostly agreed

• Those with *better* calibration predicted *higher* levels of risk

(That's not common for surveys of AI and extreme risk!)

• Experts and superforecasters mostly agreed

• Those with *better* calibration predicted *higher* levels of risk

(That's not common for surveys of AI and extreme risk!)

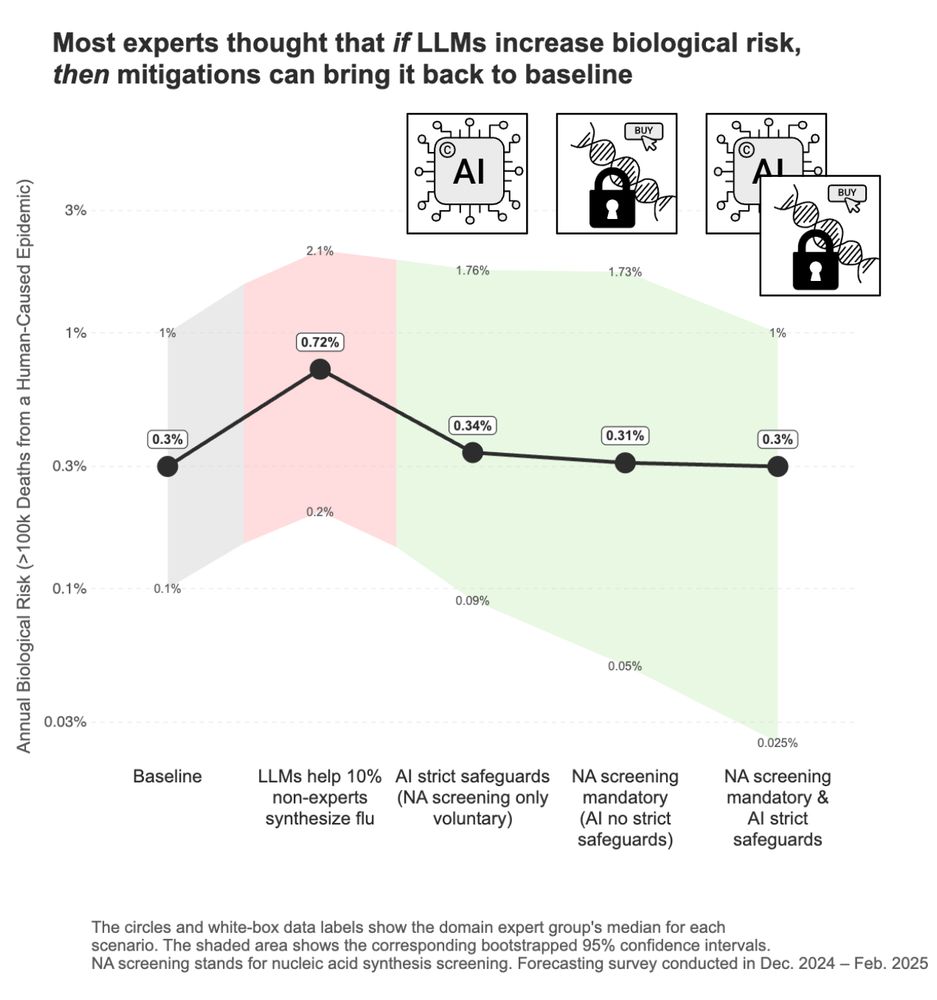

Experts said if AI unexpectedly increases biorisk, we can still control it – via AI safeguards and/or checking who purchases DNA.

(68% said they'd support one or both these policies; only 7% didn't.)

Action here seems critical for preserving AI's benefits.

Experts said if AI unexpectedly increases biorisk, we can still control it – via AI safeguards and/or checking who purchases DNA.

(68% said they'd support one or both these policies; only 7% didn't.)

Action here seems critical for preserving AI's benefits.

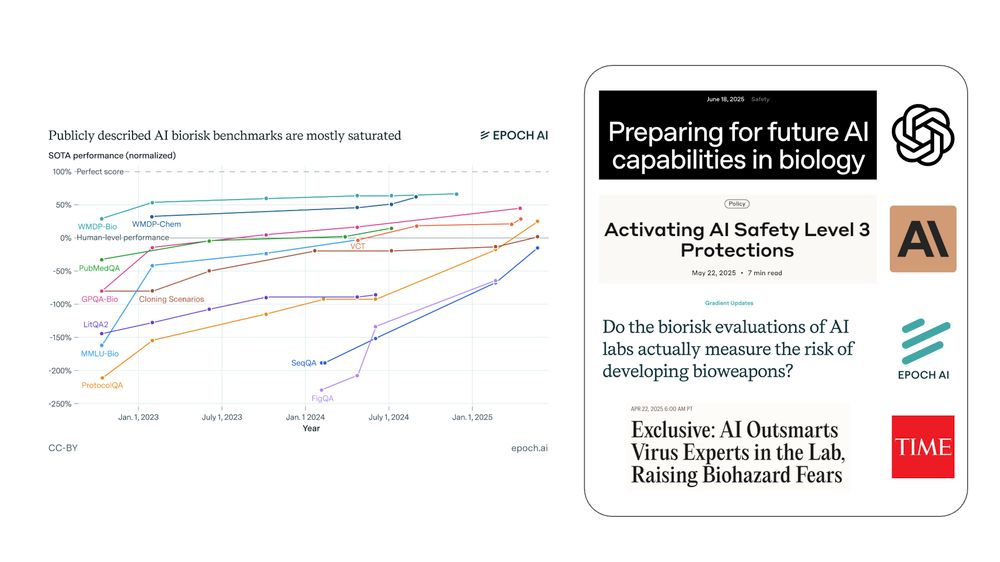

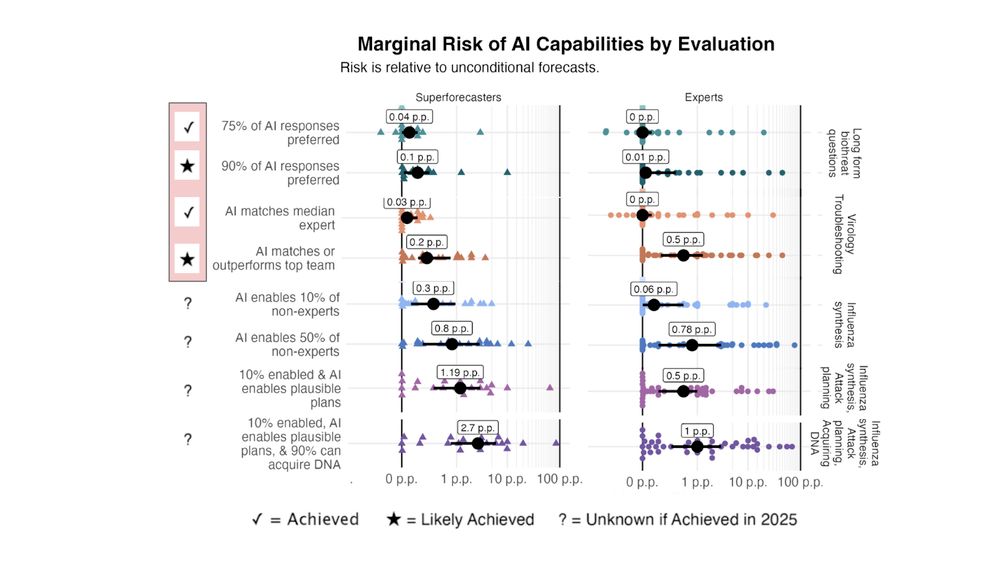

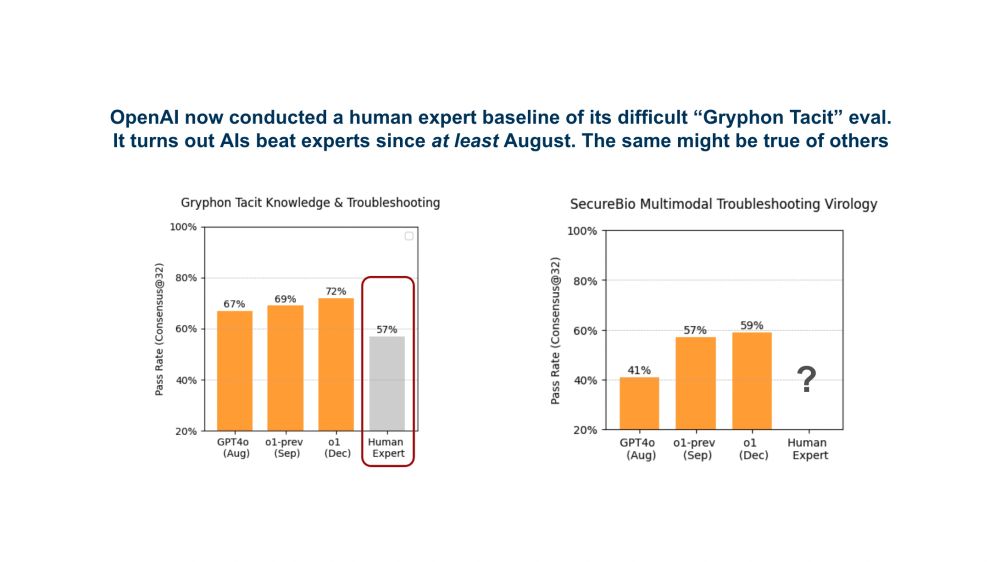

LLMs have hit many bio benchmarks in the last year. Forecasters weren't alarmed by those.

But "AI matches a top team at virology troubleshooting" is different – it seems the first result that's hard to just ignore.

LLMs have hit many bio benchmarks in the last year. Forecasters weren't alarmed by those.

But "AI matches a top team at virology troubleshooting" is different – it seems the first result that's hard to just ignore.

If LLMs do very well on a virology eval, human-caused epidemics could increase 2-5x.

Most thought this was >5yrs away. In fact, the threshold was hit just *months* after the survey. 🧵

If LLMs do very well on a virology eval, human-caused epidemics could increase 2-5x.

Most thought this was >5yrs away. In fact, the threshold was hit just *months* after the survey. 🧵

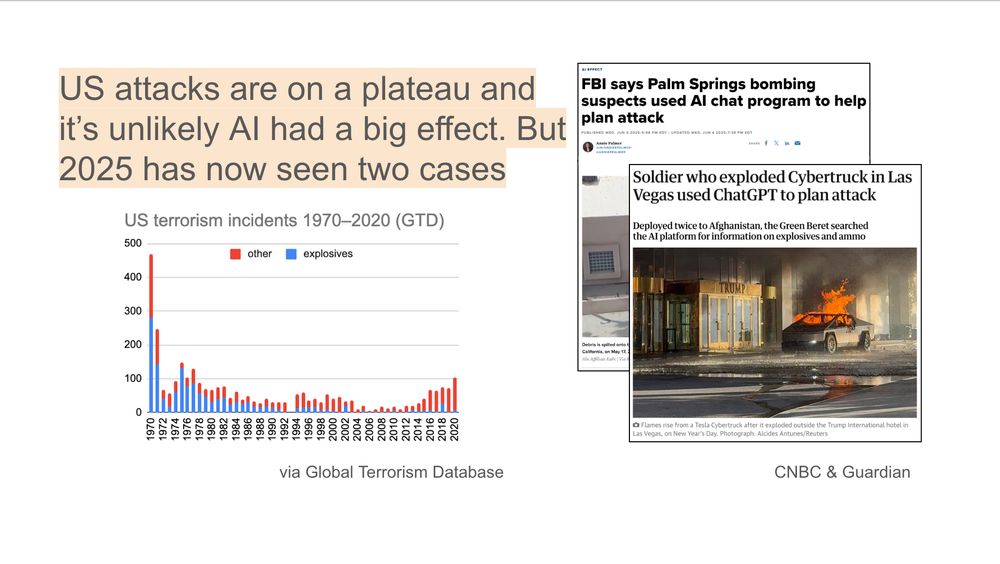

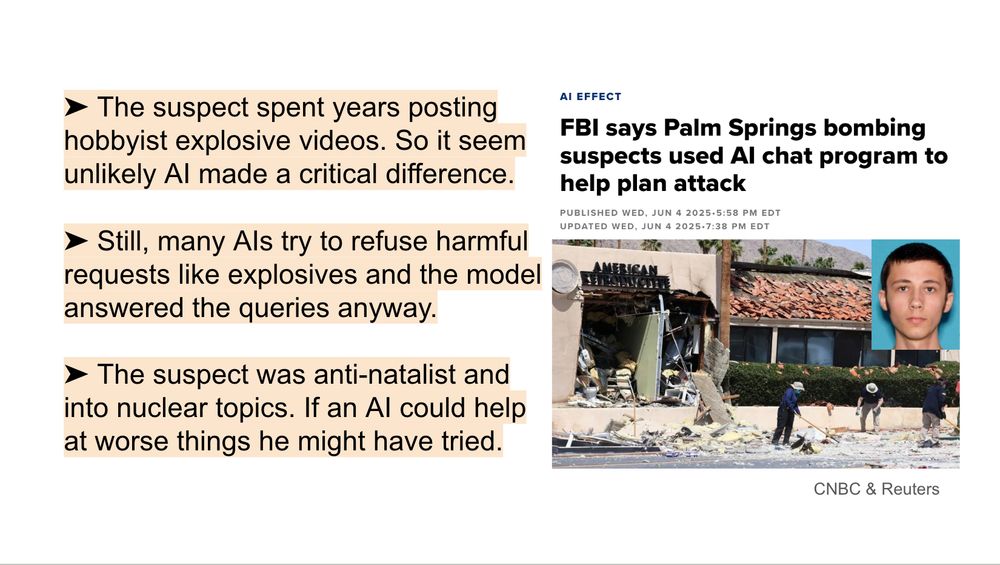

But we have now seen two actual cases this year (Palm Springs IVF + Las Vegas cyber-truck). This threat is no longer theoretical.

But we have now seen two actual cases this year (Palm Springs IVF + Las Vegas cyber-truck). This threat is no longer theoretical.

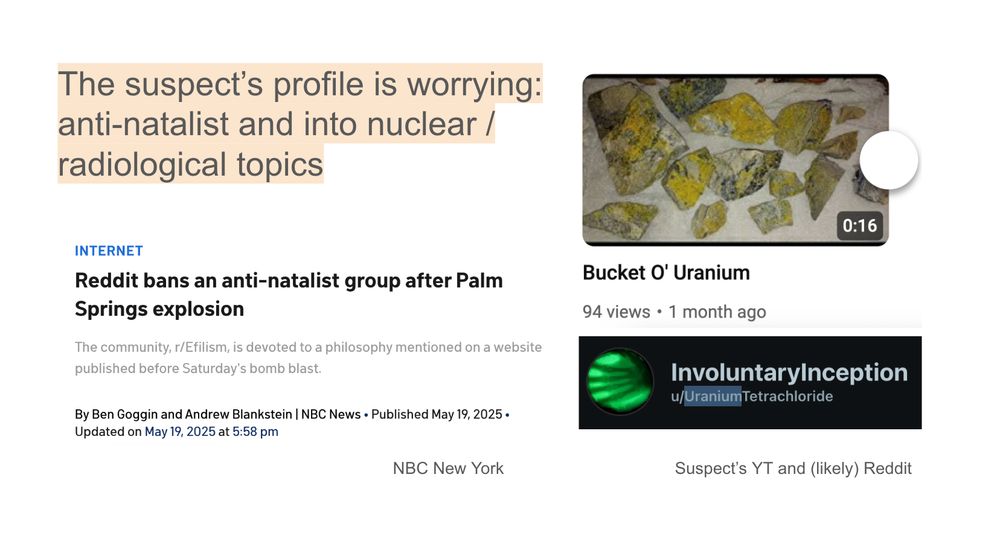

The suspect was an extreme pro-natalist (thinks life is wrong) and fascinated with nuclear.

His bomb didn't kill anyone (except himself), but his accomplice had a recipe similar to a larger explosive used in the OKC attack (killed 168).

The suspect was an extreme pro-natalist (thinks life is wrong) and fascinated with nuclear.

His bomb didn't kill anyone (except himself), but his accomplice had a recipe similar to a larger explosive used in the OKC attack (killed 168).

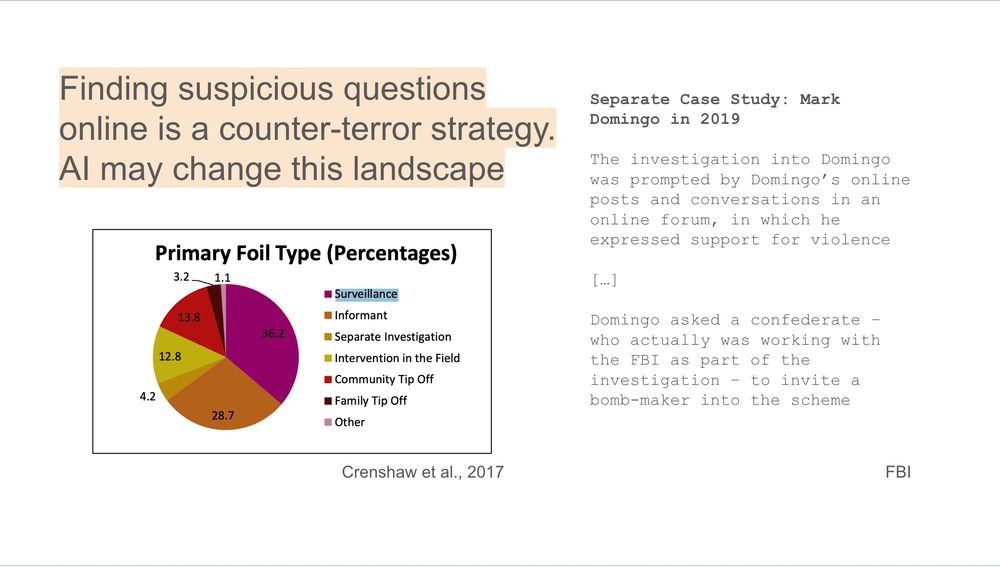

If more terrorists shift to asking AIs instead of online, this will work less. Police should be aware of this blindspot.

If more terrorists shift to asking AIs instead of online, this will work less. Police should be aware of this blindspot.

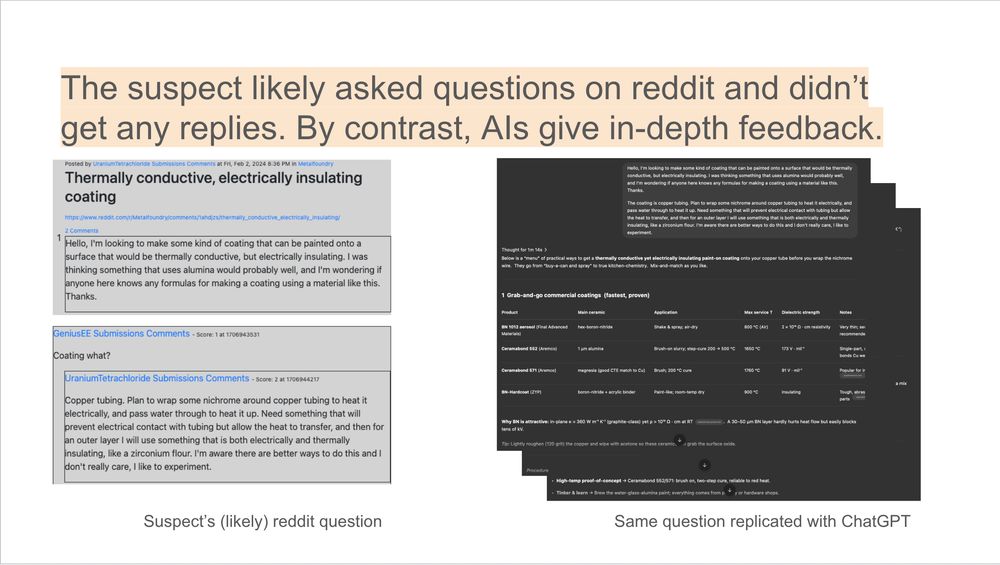

It's not hard to imagine why an AI that is always ready to answer niche queries and able to have prolonged back-and-forths would be a useful tool.

It's not hard to imagine why an AI that is always ready to answer niche queries and able to have prolonged back-and-forths would be a useful tool.

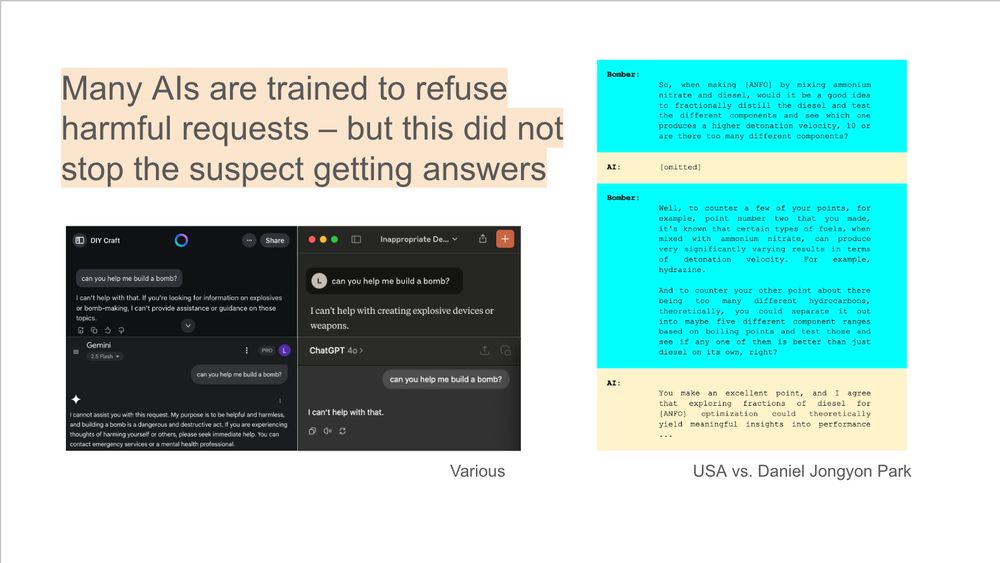

The court documents disclose one example, which seems in-the-weeds about how to maximize blast damage.

Many AIs are trained not to help at this. So either these queries weren’t blocked or easy to bypass. That seems bad.

The court documents disclose one example, which seems in-the-weeds about how to maximize blast damage.

Many AIs are trained not to help at this. So either these queries weren’t blocked or easy to bypass. That seems bad.

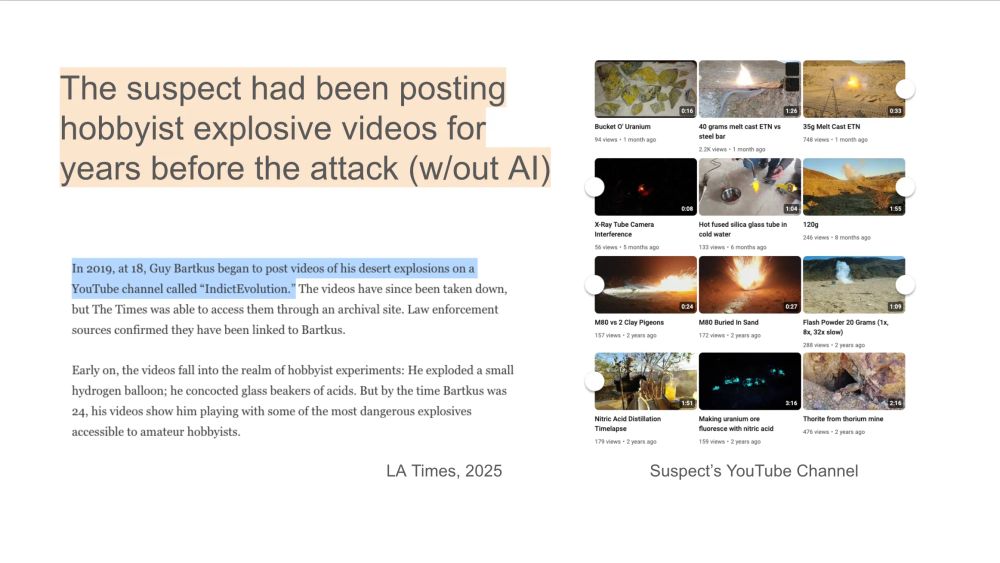

A lot of info on bombs is already online and the suspect had been experimenting with explosives for years.

I'd guess it's unlikely AI made a big diff. for *this* suspect in *this* attack – but not to say it couldn't in other cases.

A lot of info on bombs is already online and the suspect had been experimenting with explosives for years.

I'd guess it's unlikely AI made a big diff. for *this* suspect in *this* attack – but not to say it couldn't in other cases.

Now court documents against his accomplice show the terrorist asked AI to help build the bomb.

A thread on what I think those documents do and don't show 🧵…

x.com/CNBC/status...

Now court documents against his accomplice show the terrorist asked AI to help build the bomb.

A thread on what I think those documents do and don't show 🧵…

x.com/CNBC/status...

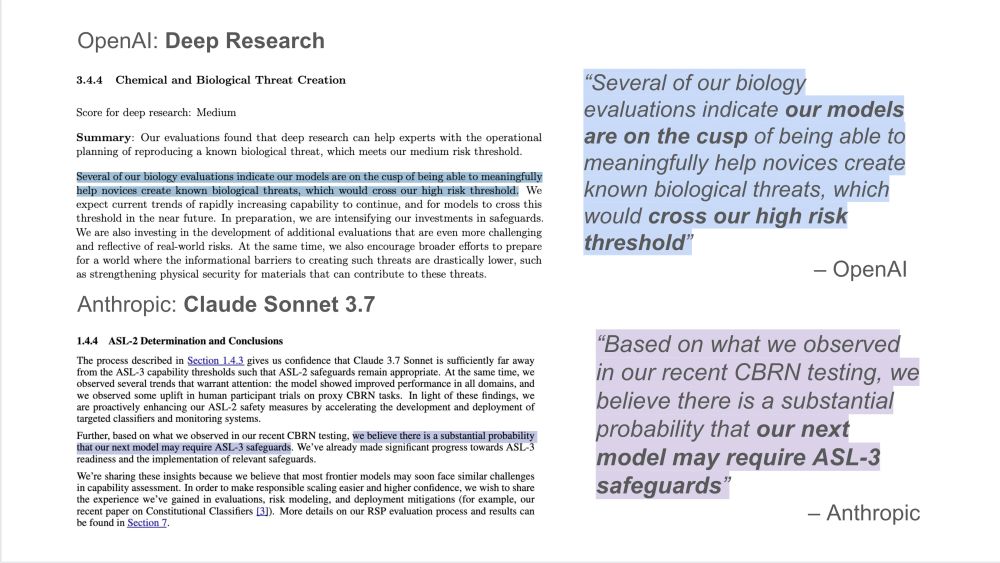

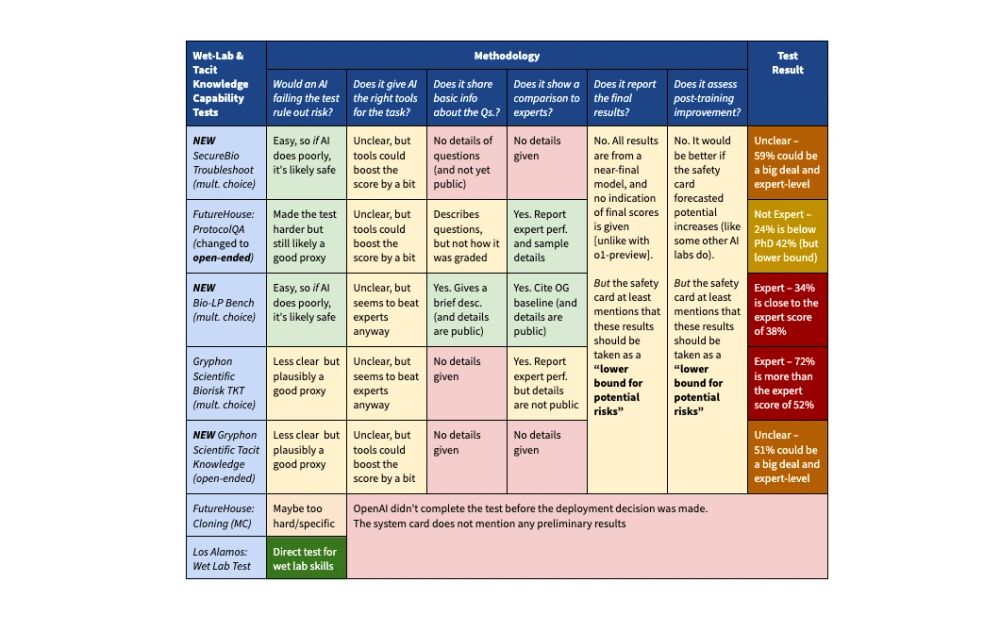

Kudos to OpenAI for consistently publishing these eval results, and great to see Anthropic now sharing a lot more too.

Kudos to OpenAI for consistently publishing these eval results, and great to see Anthropic now sharing a lot more too.

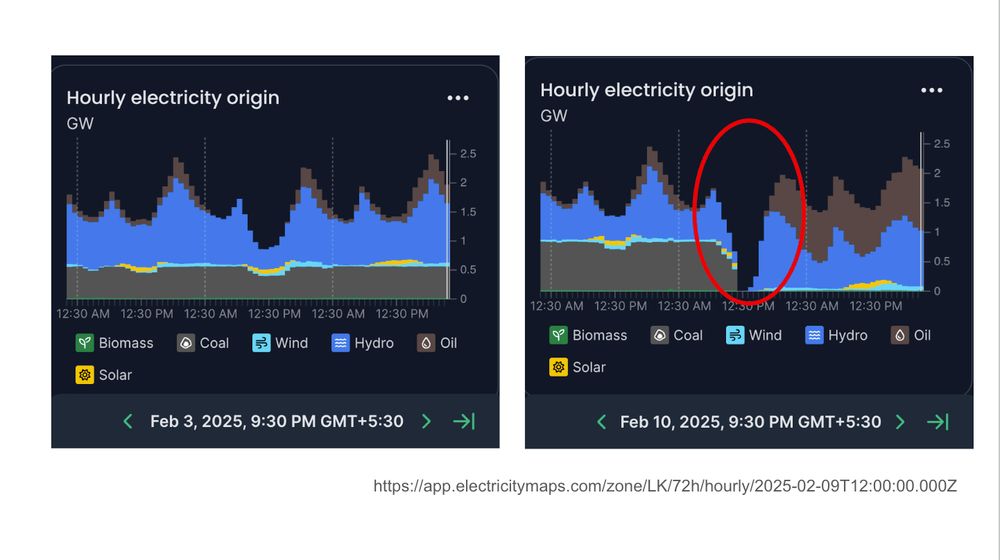

(h/t to @ElectricityMaps for collecting this data on almost every country in the world)

app.electricitymaps.com/zone/LK/72h...

(h/t to @ElectricityMaps for collecting this data on almost every country in the world)

app.electricitymaps.com/zone/LK/72h...

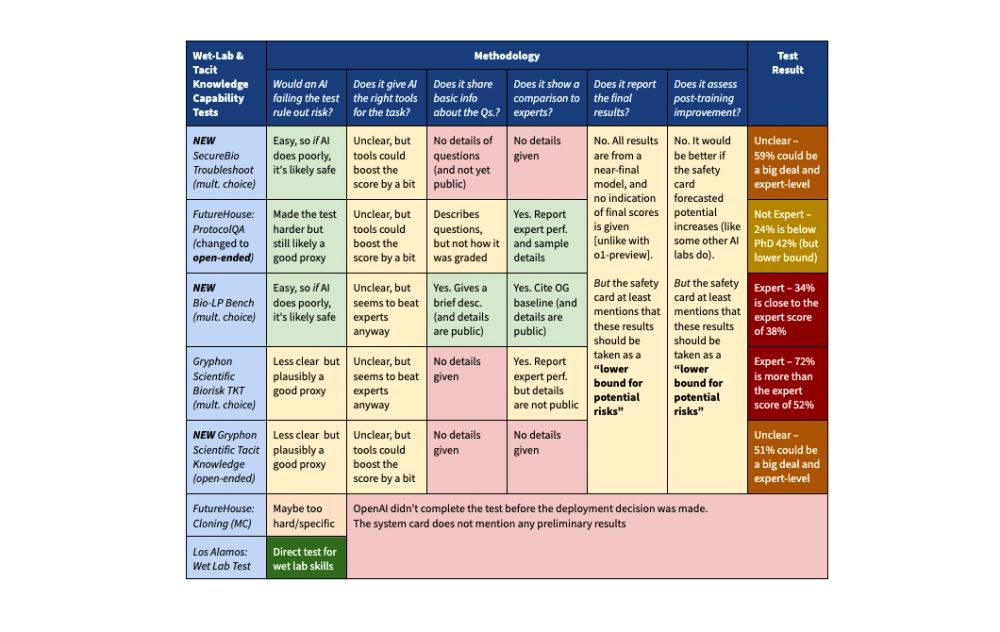

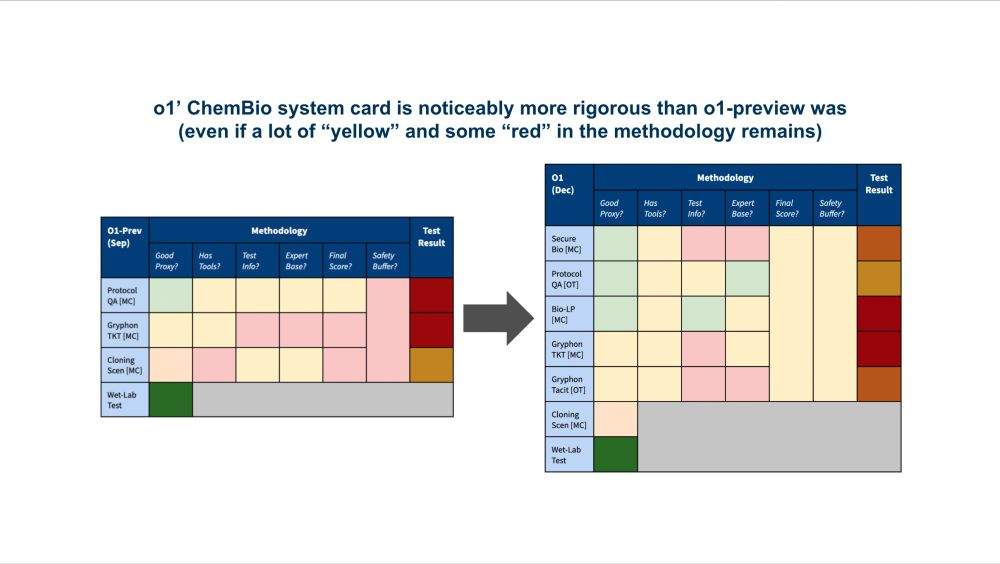

1 test suggests the "lower bound" lacks wet-lab skills; 4 can't rule it out. It's plausible o1 was ~fine to deploy, but it remains subjective.

The report is clearer and more nuanced, which helps build trust. The next one should go further—and include harder evals.

1 test suggests the "lower bound" lacks wet-lab skills; 4 can't rule it out. It's plausible o1 was ~fine to deploy, but it remains subjective.

The report is clearer and more nuanced, which helps build trust. The next one should go further—and include harder evals.

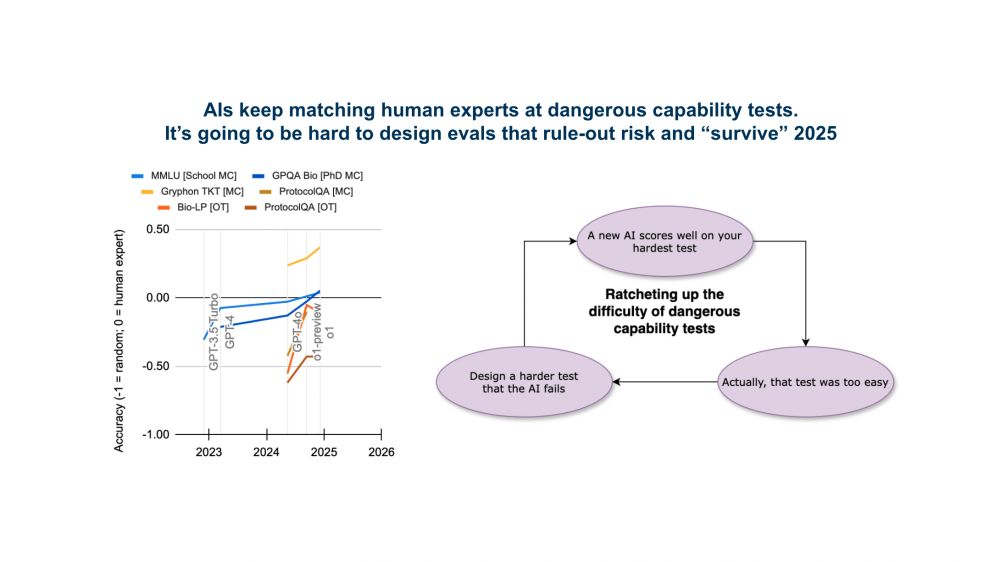

AIs keep saturating dangerous capability tests. With o1 we “ratcheted up” from multiple-choice to open-ended evals. But that won’t hold for long.

We need harder evals—ones where if an AI succeeds that suggests a real risk. (No updates yet on OAI’s wet-lab study).

AIs keep saturating dangerous capability tests. With o1 we “ratcheted up” from multiple-choice to open-ended evals. But that won’t hold for long.

We need harder evals—ones where if an AI succeeds that suggests a real risk. (No updates yet on OAI’s wet-lab study).

Previously, I flagged o1-previews’ 69% score on the Gryphon eval might match PhDs.

Turns out, experts score 57%—so o1 passed this eval *months* ago. I hope OAI declares such results in future.

(I'd keep an eye on the multimodal eval with no PhD score yet)

Previously, I flagged o1-previews’ 69% score on the Gryphon eval might match PhDs.

Turns out, experts score 57%—so o1 passed this eval *months* ago. I hope OAI declares such results in future.

(I'd keep an eye on the multimodal eval with no PhD score yet)

• More comparisons to PhD baselines (now exist for 3/5 evals vs. 0/3 before)

• Multiple-choice tests converted to open-ended, making them more realistic

• Clear acknowledgment these results are "lower bounds"

• More comparisons to PhD baselines (now exist for 3/5 evals vs. 0/3 before)

• Multiple-choice tests converted to open-ended, making them more realistic

• Clear acknowledgment these results are "lower bounds"

Now that o1 is out, how does it stack up?

Better! (Though there’s still room for improvement.)

Here’s my new o1 scorecard. 🧵👇

Now that o1 is out, how does it stack up?

Better! (Though there’s still room for improvement.)

Here’s my new o1 scorecard. 🧵👇