lisaalaz.github.io

LLM agents performing real-world tasks should be able to combine these different types of reasoning, but are they fit for the job? 🤔

🧵⬇️

LLM agents performing real-world tasks should be able to combine these different types of reasoning, but are they fit for the job? 🤔

🧵⬇️

We demonstrate that human preferences can be reverse engineered effectively by pipelining LLMs to optimise upstream preambles via reinforcement learning 🧵⬇️

We demonstrate that human preferences can be reverse engineered effectively by pipelining LLMs to optimise upstream preambles via reinforcement learning 🧵⬇️

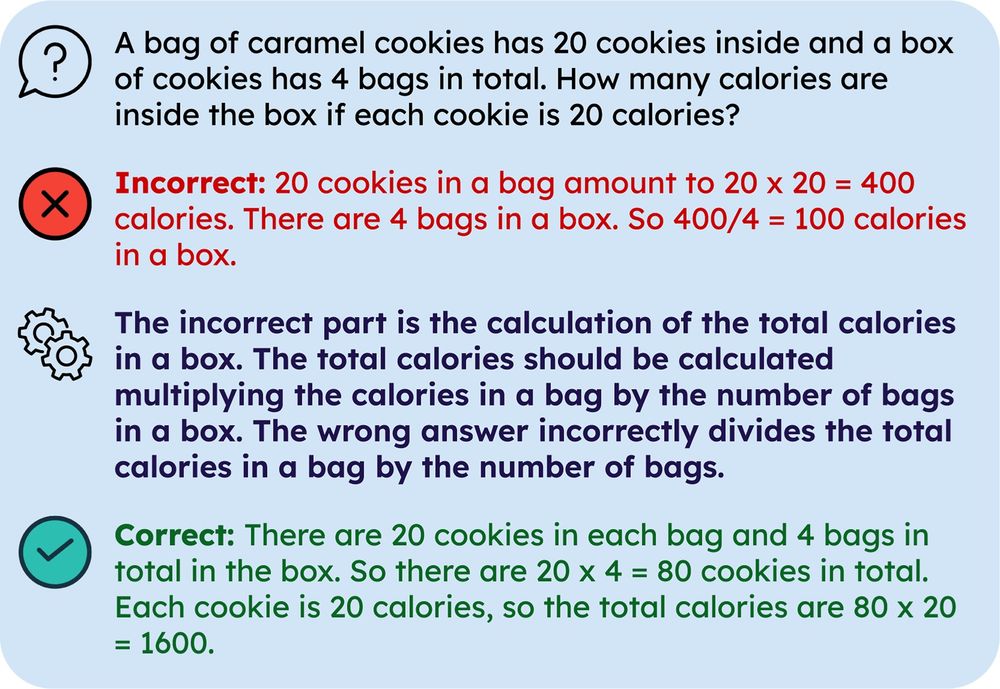

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵