LLMs often produce inconsistent explanations (62–86%), hurting faithfulness and trust in explainable AI.

We introduce PEX consistency, a measure for explanation consistency,

and show that optimizing it via DPO improves faithfulness by up to 9.7%.

LLMs often produce inconsistent explanations (62–86%), hurting faithfulness and trust in explainable AI.

We introduce PEX consistency, a measure for explanation consistency,

and show that optimizing it via DPO improves faithfulness by up to 9.7%.

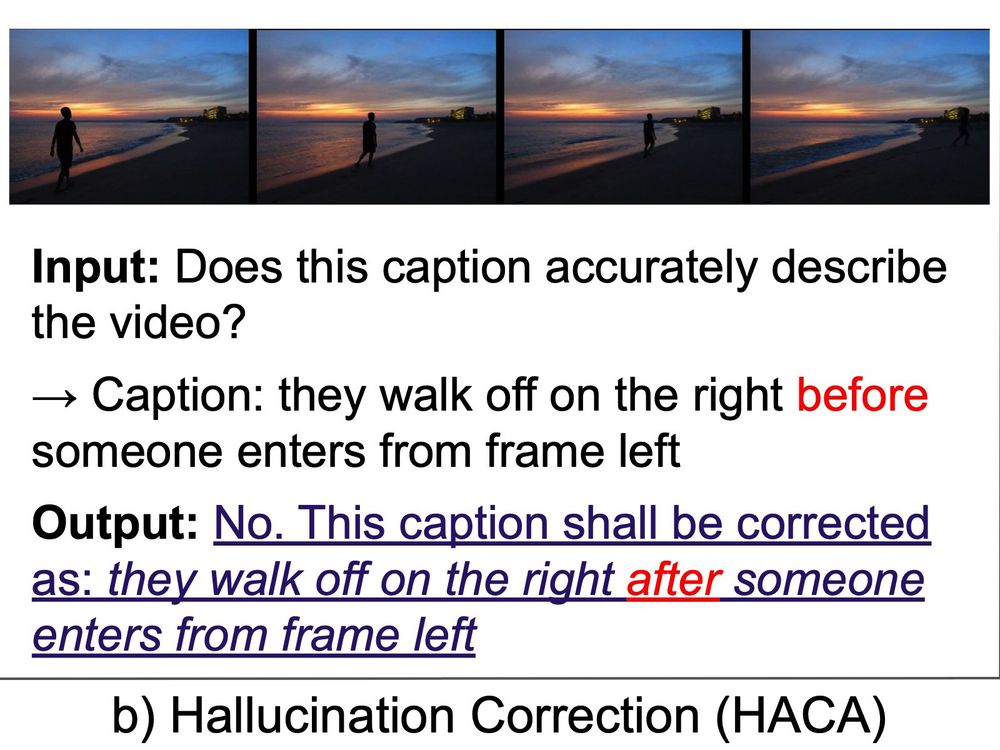

Excited to share our accepted paper at ACL: Can Hallucination Correction Improve Video-language Alignment?

Link: arxiv.org/abs/2502.15079

Excited to share our accepted paper at ACL: Can Hallucination Correction Improve Video-language Alignment?

Link: arxiv.org/abs/2502.15079