✅ MoT achieves dense-level 7B performance with up to 66% fewer FLOPs!

📌 GitHub repo: github.com/facebookrese...

📄 Paper: arxiv.org/abs/2411.04996

📌 GitHub repo: github.com/facebookrese...

📄 Paper: arxiv.org/abs/2411.04996

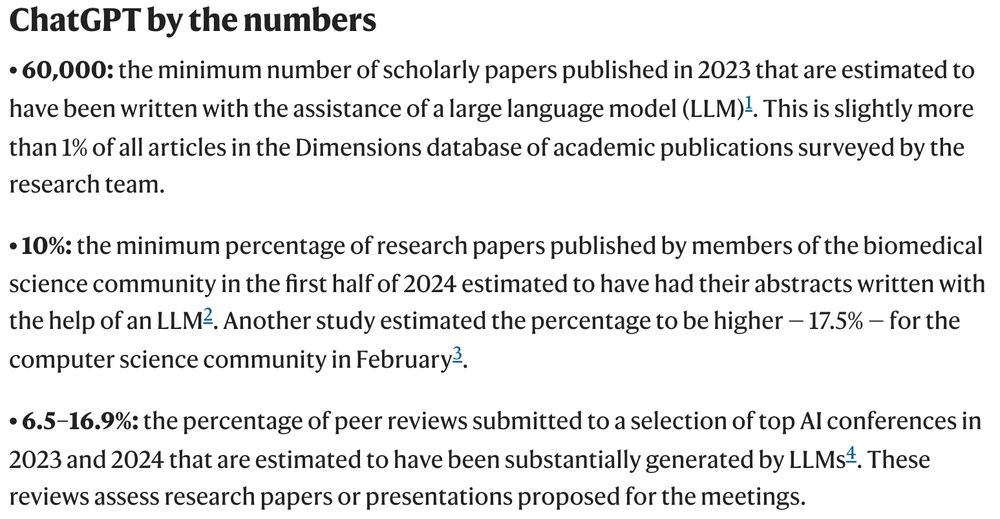

📊By late 2024, LLMs assist in writing:

- 18% of financial consumer complaints

- 24% of corporate press releases

- Up to 15% of job postings (esp. in small/young firms)

- 14% of UN press releases

arxiv.org/abs/2502.09747

📊By late 2024, LLMs assist in writing:

- 18% of financial consumer complaints

- 24% of corporate press releases

- Up to 15% of job postings (esp. in small/young firms)

- 14% of UN press releases

arxiv.org/abs/2502.09747

🧵 Following up on Mixture-of-Transformers (MoT), we're excited to share Mixture-of-Mamba (MoM)!

✅ Mamba w/ Transfusion setting (image + text): Dense-level performance with just 34.76% of the FLOPs

Full paper: 📚 arxiv.org/abs/2501.16295

🧵 Following up on Mixture-of-Transformers (MoT), we're excited to share Mixture-of-Mamba (MoM)!

✅ Mamba w/ Transfusion setting (image + text): Dense-level performance with just 34.76% of the FLOPs

Full paper: 📚 arxiv.org/abs/2501.16295

LLM self-taught to code for next-gen AI hardware!

arxiv.org/abs/2502.02534

1/ Programming AI accelerators is a major bottleneck in ML. Our self-improving LLM agent learns to write optimized code for new hardware, achieving 3.9x better results.

LLM self-taught to code for next-gen AI hardware!

arxiv.org/abs/2502.02534

1/ Programming AI accelerators is a major bottleneck in ML. Our self-improving LLM agent learns to write optimized code for new hardware, achieving 3.9x better results.

www.nature.com/articles/d41...

www.nature.com/articles/d41...

We created a pipeline using GPT4 to read 1000s papers (from #Nature, #ICLR, etc.) and generate feedback (eg suggestions for improvement). Then we compare with human expert reviews.

(1/n)

We created a pipeline using GPT4 to read 1000s papers (from #Nature, #ICLR, etc.) and generate feedback (eg suggestions for improvement). Then we compare with human expert reviews.

(1/n)

Around 8% for bioRxiv papers.

Paper: arxiv.org/abs/2404.01268 🧵

Around 8% for bioRxiv papers.

Paper: arxiv.org/abs/2404.01268 🧵

Main results👇

proceedings.mlr.press/v235/liang24...

Media Coverage: The New York Times

nyti.ms/3vwQhdi

Main results👇

proceedings.mlr.press/v235/liang24...

Media Coverage: The New York Times

nyti.ms/3vwQhdi

Many thanks to my collaborators for their insights and dedication to advancing fair and ethical AI practices in scientific publishing. #AI #PeerReview

www.nature.com/articles/d41...

Many thanks to my collaborators for their insights and dedication to advancing fair and ethical AI practices in scientific publishing. #AI #PeerReview

www.nature.com/articles/d41...

✅ MoT achieves dense-level 7B performance with up to 66% fewer FLOPs!

✅ MoT achieves dense-level 7B performance with up to 66% fewer FLOPs!