/ Teacher @ aivancity

/ Teacher Assistant @ Sorbonne Université

https://paullerner.github.io/

"Self-Retrieval from Distant Contexts for Document-Level Machine Translation", accepted to the Conference on Machine Translation (WMT25), from @ziqianpeng.bsky.social, @rachelbawden.bsky.social, @yvofr.bsky.social

"Self-Retrieval from Distant Contexts for Document-Level Machine Translation", accepted to the Conference on Machine Translation (WMT25), from @ziqianpeng.bsky.social, @rachelbawden.bsky.social, @yvofr.bsky.social

- a vllm-based implementation: 4.15 times faster!

- a naive hugging face implementation, which does not sort texts by length: 4.61 times faster!

- a vllm-based implementation: 4.15 times faster!

- a naive hugging face implementation, which does not sort texts by length: 4.61 times faster!

It aims to be feature complete for many information-theoretic metrics, including Perplexity (PPL), Surprisal, and bits per character (BPC), and their word-level counterparts.

It aims to be feature complete for many information-theoretic metrics, including Perplexity (PPL), Surprisal, and bits per character (BPC), and their word-level counterparts.

@haldaume3.bsky.social, Léo Labat, Gaël Lejeune, Pierre-Antoine Lequeu,

@bpiwowar.bsky.social, Nazanin Shafiabadi and @yvofr.bsky.social, read the paper here talnarchives.atala.org/ateliers/202...

Any feedback is appreciated :)

@haldaume3.bsky.social, Léo Labat, Gaël Lejeune, Pierre-Antoine Lequeu,

@bpiwowar.bsky.social, Nazanin Shafiabadi and @yvofr.bsky.social, read the paper here talnarchives.atala.org/ateliers/202...

Any feedback is appreciated :)

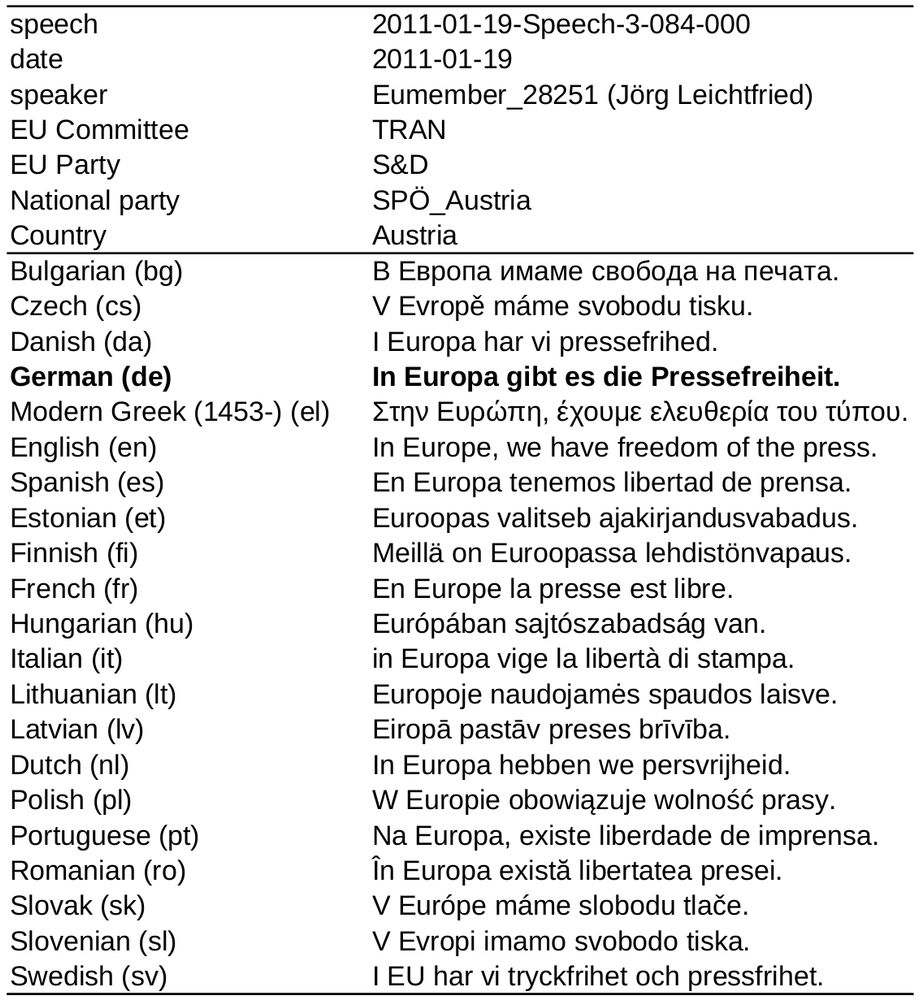

"On Assessing the Political Biases of Multilingual Large Language Models" by @lernerp.bsky.social Laurène Cave, @haldaume3.bsky.social Léo Labat, Gaël Lejeune, Pierre-Antoine Lequeu, @bpiwowar.bsky.social Nazanin Shafiabadi and yvofr.bsky.social, collaborated with the STIH lab

"On Assessing the Political Biases of Multilingual Large Language Models" by @lernerp.bsky.social Laurène Cave, @haldaume3.bsky.social Léo Labat, Gaël Lejeune, Pierre-Antoine Lequeu, @bpiwowar.bsky.social Nazanin Shafiabadi and yvofr.bsky.social, collaborated with the STIH lab