Mastodon: @LeoVarnet@fediscience.org

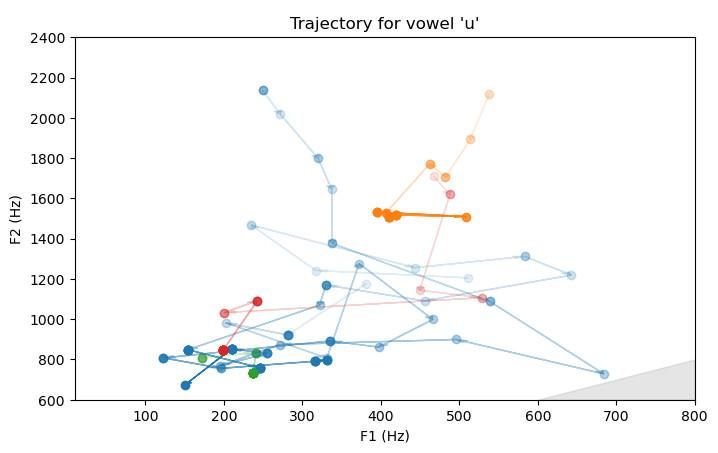

A master student in my team has developed a very nice vowel perception experiment, applying the concept of Markov Chain Monte Carlo to human behavior.

We are kindly looking for a few volunteers to take part in a short (~15-20 min) pilot test. If you're willing to help, the

A master student in my team has developed a very nice vowel perception experiment, applying the concept of Markov Chain Monte Carlo to human behavior.

We are kindly looking for a few volunteers to take part in a short (~15-20 min) pilot test. If you're willing to help, the

Ma présentation : "La voix humaine, de Jean Cocteau à la psycholinguistique"

Ma présentation : "La voix humaine, de Jean Cocteau à la psycholinguistique"

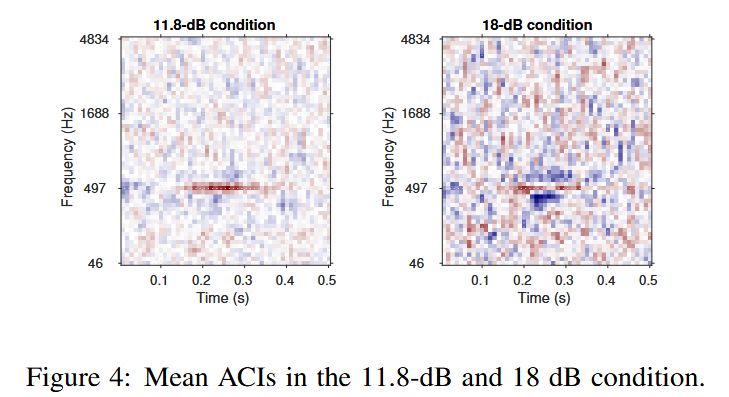

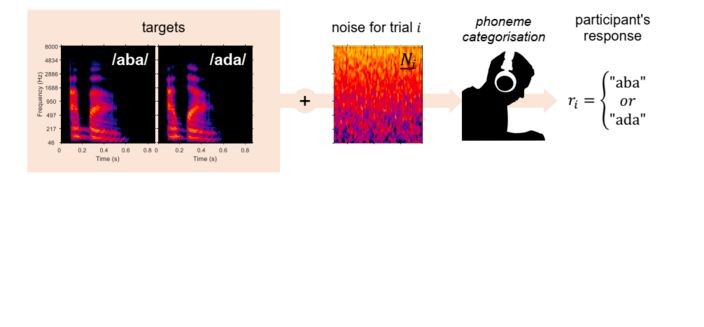

pubmed.ncbi.nlm.nih.gov/38364046/

pubmed.ncbi.nlm.nih.gov/38364046/