Website: https://ml.informatik.uni-freiburg.de/profile/purucker/

Authors: @nickerickson.bsky.social Lennart Purucker @atschalz.bsky.social @dholzmueller.bsky.social Prateek Desai David Salinas Frank Hutter

LB: tabarena.ai

Paper: arxiv.org/abs/2506.16791

Code: tabarena.ai/code

12/

Authors: @nickerickson.bsky.social Lennart Purucker @atschalz.bsky.social @dholzmueller.bsky.social Prateek Desai David Salinas Frank Hutter

LB: tabarena.ai

Paper: arxiv.org/abs/2506.16791

Code: tabarena.ai/code

12/

11/

11/

10/

10/

9/

9/

8/

8/

7/

7/

6/

6/

5/

5/

4/

4/

3/

3/

2/

2/

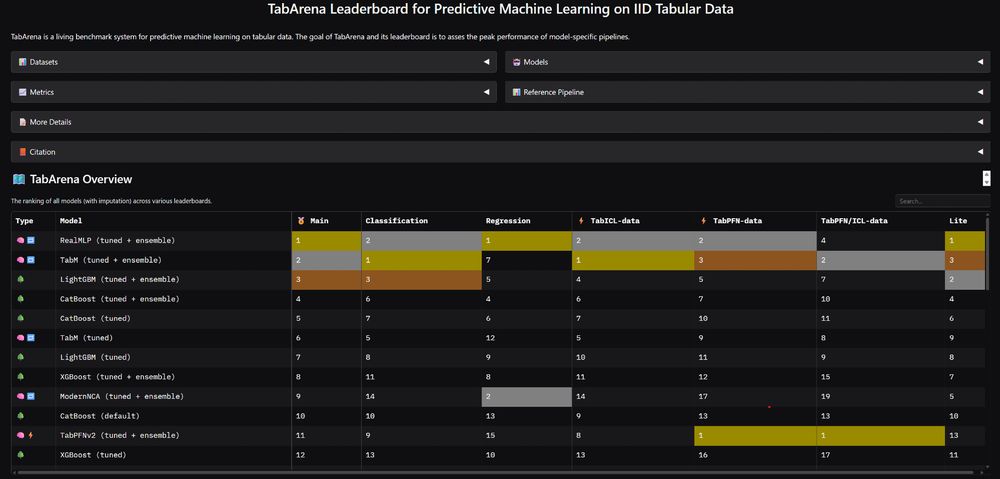

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

📊 an online leaderboard (submit!)

📑 carefully curated datasets

📈 strong tree-based, deep learning, and foundation models

🧵

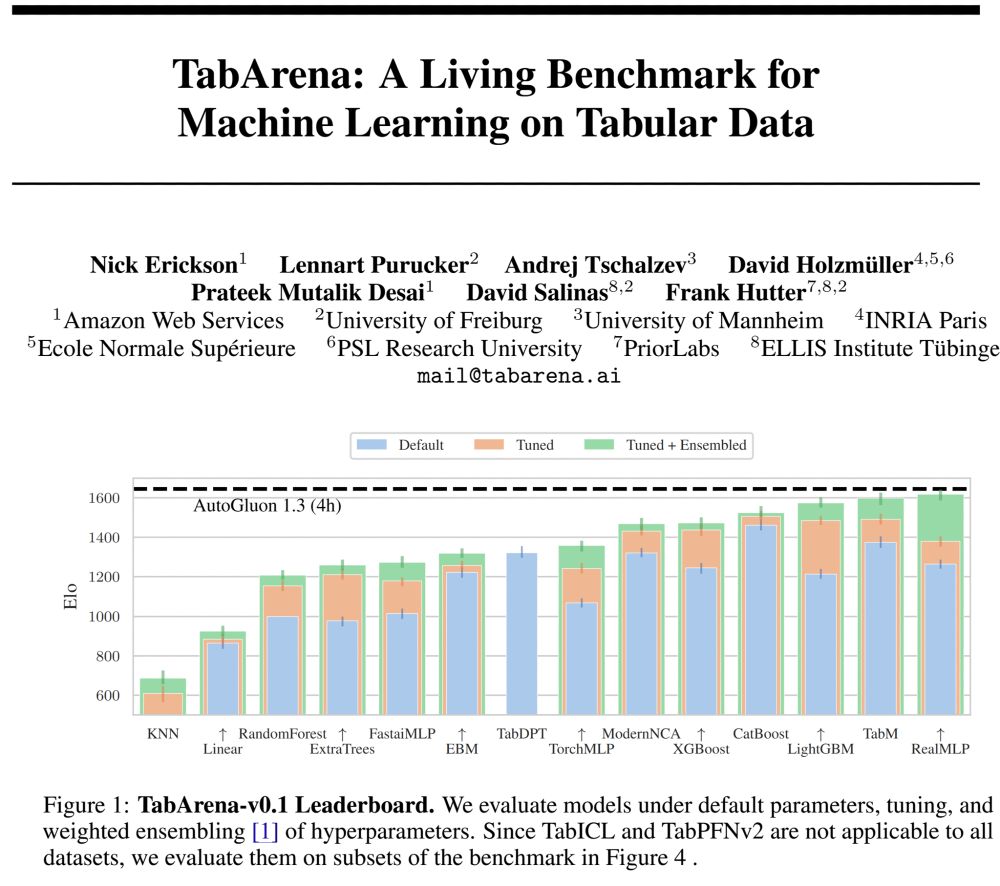

This is excellent news for (small) tabular ML! Checkout our Nature article (nature.com/articles/s41...) and code (github.com/PriorLabs/Ta...)

This is excellent news for (small) tabular ML! Checkout our Nature article (nature.com/articles/s41...) and code (github.com/PriorLabs/Ta...)