“From Priest to Doctor: Domain Adaptaion for Low-Resource Neural Machine Translation” by Ali Marashian, @covetedfish.bsky.social, @alexispalmer.bsky.social, and others

“From Priest to Doctor: Domain Adaptaion for Low-Resource Neural Machine Translation” by Ali Marashian, @covetedfish.bsky.social, @alexispalmer.bsky.social, and others

Find out in “Boosting the Capabilities of Compact Models in Low-Data Contexts with Large Language Models and Retrieval-Augmented Generation” by Bhargav Shandilya and @alexispalmer.bsky.social

arxiv.org/abs/2410.00387

Find out in “Boosting the Capabilities of Compact Models in Low-Data Contexts with Large Language Models and Retrieval-Augmented Generation” by Bhargav Shandilya and @alexispalmer.bsky.social

arxiv.org/abs/2410.00387

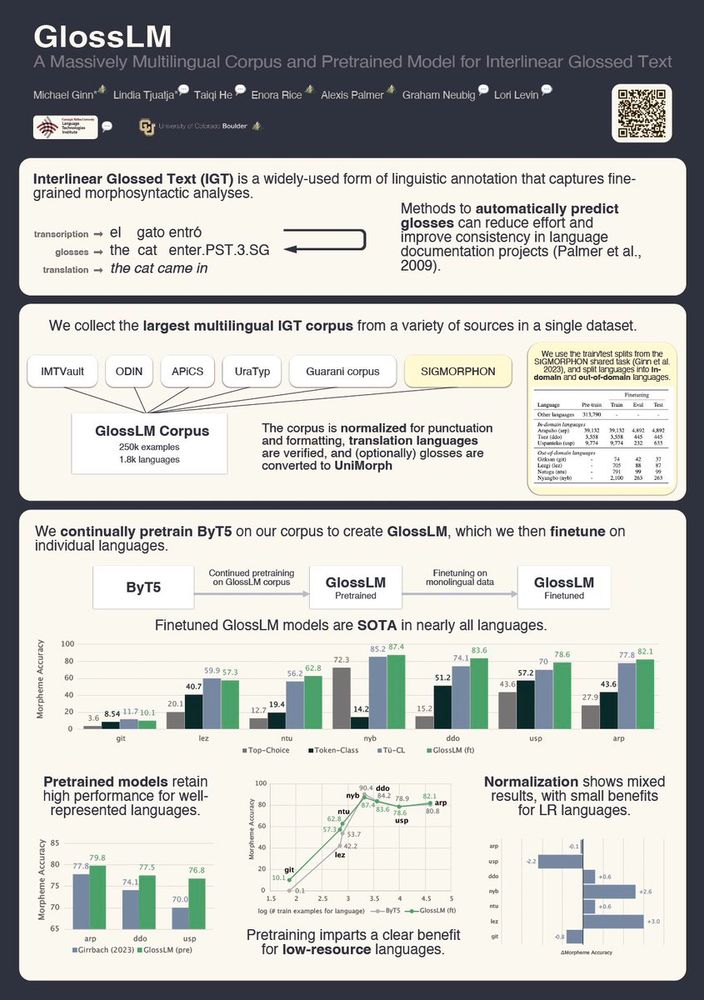

🗺️GlossLM: A Massively Multilingual Corpus and Pretrained Model for Interlinear Glossed Text [EMNLP Main]

🗺️GlossLM: A Massively Multilingual Corpus and Pretrained Model for Interlinear Glossed Text [EMNLP Main]