Previously intern @SonyCSL, @Ircam, @Inria

🌎 Personal website: https://lebellig.github.io/

Solving the Schrödinger bridge pb with a non-zero drift ref. process: learn curved interpolants, apply minibatch OT with the induced metric, learn the mixture of diffusion bridges.

Solving the Schrödinger bridge pb with a non-zero drift ref. process: learn curved interpolants, apply minibatch OT with the induced metric, learn the mixture of diffusion bridges.

Transport between two distributions defined on different spaces by training a noise-to-data flow models in the target space, conditioned on the source data and leveraging Gromov–Wasserstein couplings

Transport between two distributions defined on different spaces by training a noise-to-data flow models in the target space, conditioned on the source data and leveraging Gromov–Wasserstein couplings

Unexpected result: swapping the SD-VAE for a pretrained visual encoder improves FID, challenging the idea that encoders' information compression is not suited for generative modeling!

Unexpected result: swapping the SD-VAE for a pretrained visual encoder improves FID, challenging the idea that encoders' information compression is not suited for generative modeling!

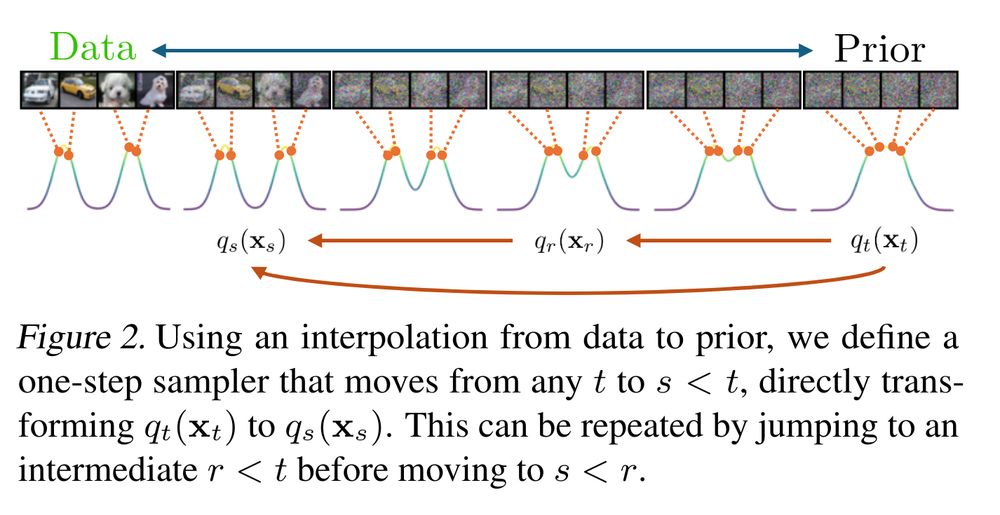

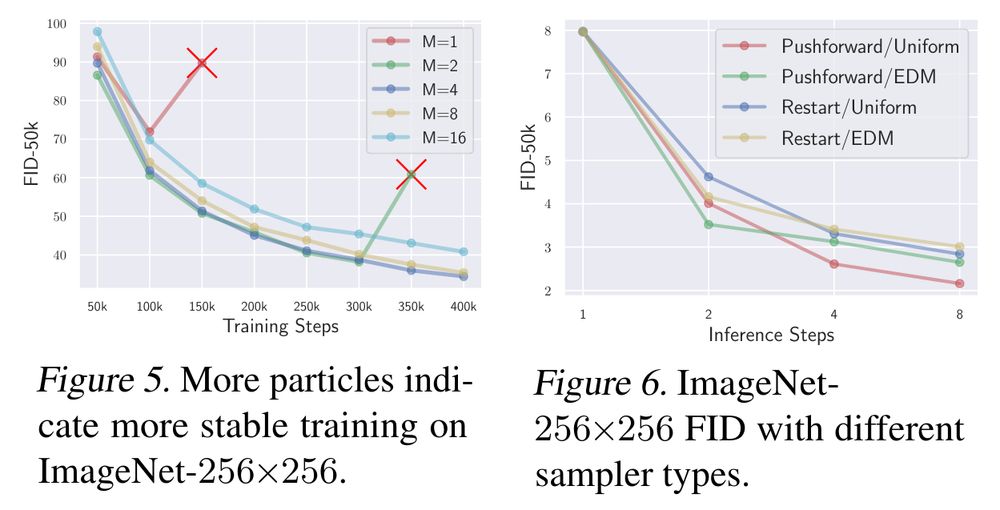

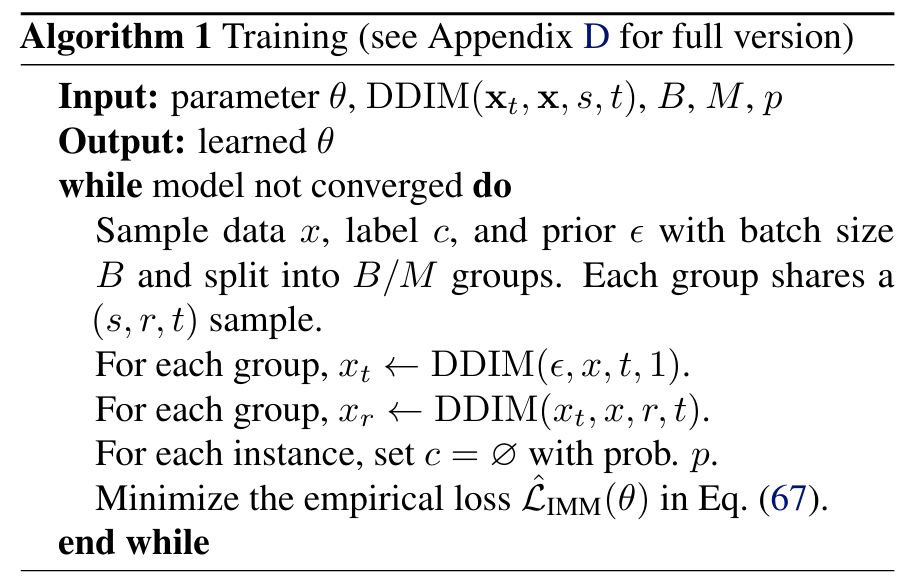

New method to train flow maps without any pretrained flow matching/diffusion models!

New method to train flow maps without any pretrained flow matching/diffusion models!

High-density regions might not be the most interesting areas to visit. Thus, they define a new Riemannian metric for diffusion models relying on the Jacobian of the score

High-density regions might not be the most interesting areas to visit. Thus, they define a new Riemannian metric for diffusion models relying on the Jacobian of the score

Join on Sept 11 at 15:00 CET! www.ri.se/en/learningm...

Join on Sept 11 at 15:00 CET! www.ri.se/en/learningm...

Also really looking forward to the poster sessions and all the exciting conferences on the program!

📄 hal.science/hal-05140421

Also really looking forward to the poster sessions and all the exciting conferences on the program!

📄 hal.science/hal-05140421

📄 Ambient diffusion Omni: arxiv.org/pdf/2506.10038

📄 Ambient Proteins: www.biorxiv.org/content/10.1...

📄 Ambient diffusion Omni: arxiv.org/pdf/2506.10038

📄 Ambient Proteins: www.biorxiv.org/content/10.1...

Energy-Based Models for Generative Modeling" by Michal Balcerak et al. arxiv.org/abs/2504.10612

I'm not sure EBM will beat flow-matching/diffusion models, but this article is very refreshing.

Energy-Based Models for Generative Modeling" by Michal Balcerak et al. arxiv.org/abs/2504.10612

I'm not sure EBM will beat flow-matching/diffusion models, but this article is very refreshing.

They propose an algorithm to traverse high-density regions when interpolating between two points in a diffusion model latent space.

They propose an algorithm to traverse high-density regions when interpolating between two points in a diffusion model latent space.

📄 https://arxiv.org/abs/2504.11172

📄 https://arxiv.org/abs/2504.11172

I'm curious about its modality translation capabilities 👀

📄 https://arxiv.org/abs/2504.11171

🐍 https://huggingface.co/ibm-esa-geospatial

I'm curious about its modality translation capabilities 👀

📄 https://arxiv.org/abs/2504.11171

🐍 https://huggingface.co/ibm-esa-geospatial

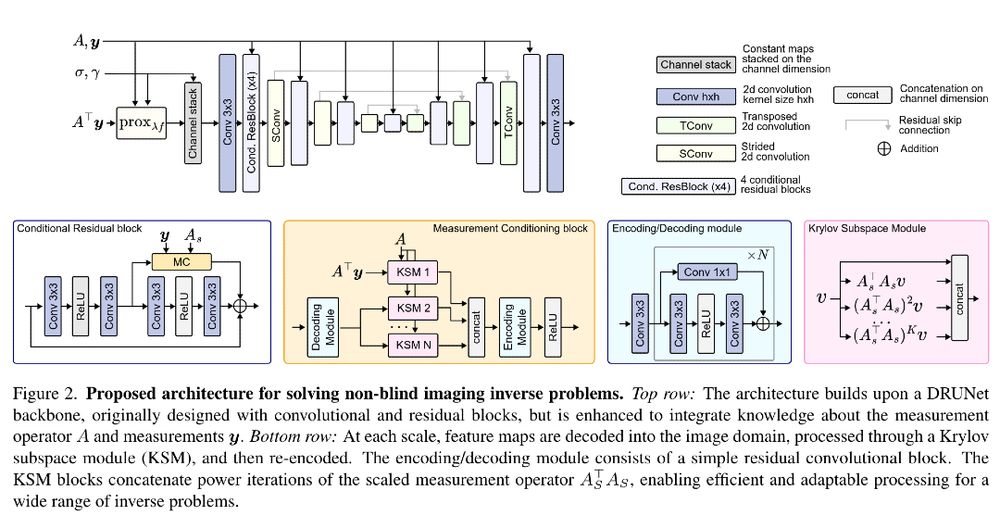

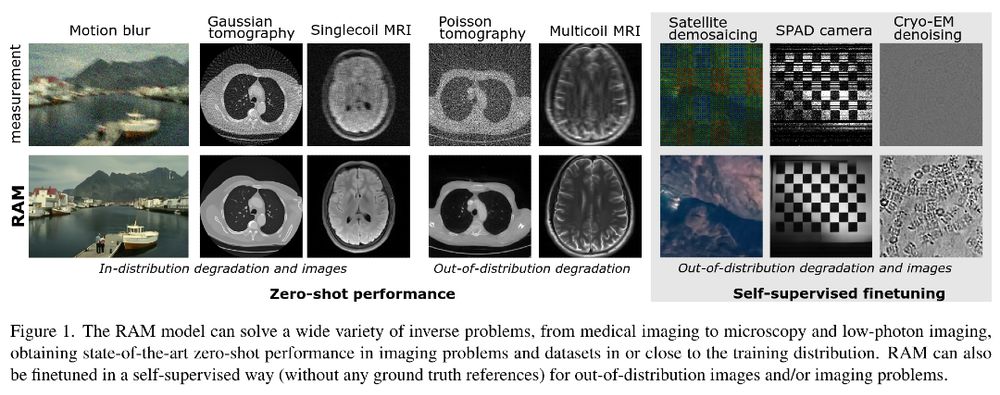

📰 https://arxiv.org/abs/2503.08915

📰 https://arxiv.org/abs/2503.08915

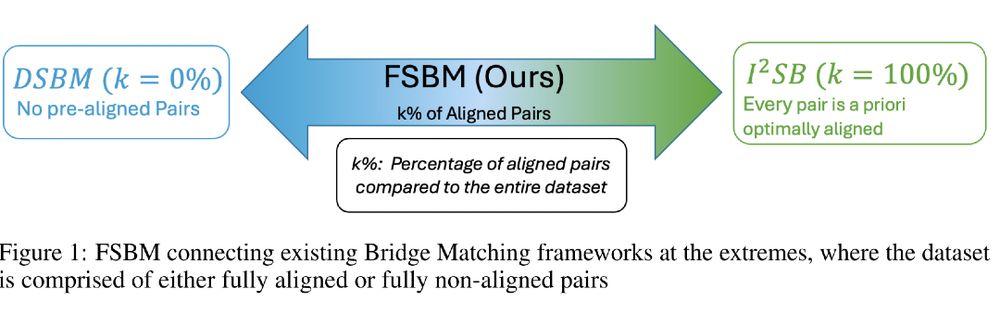

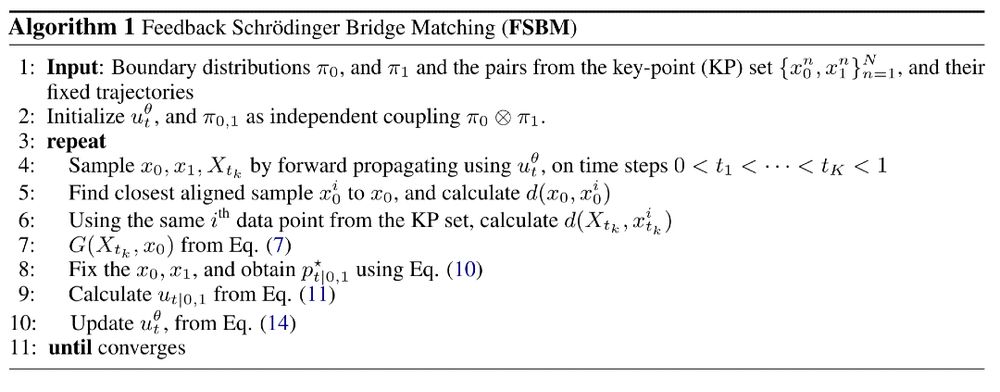

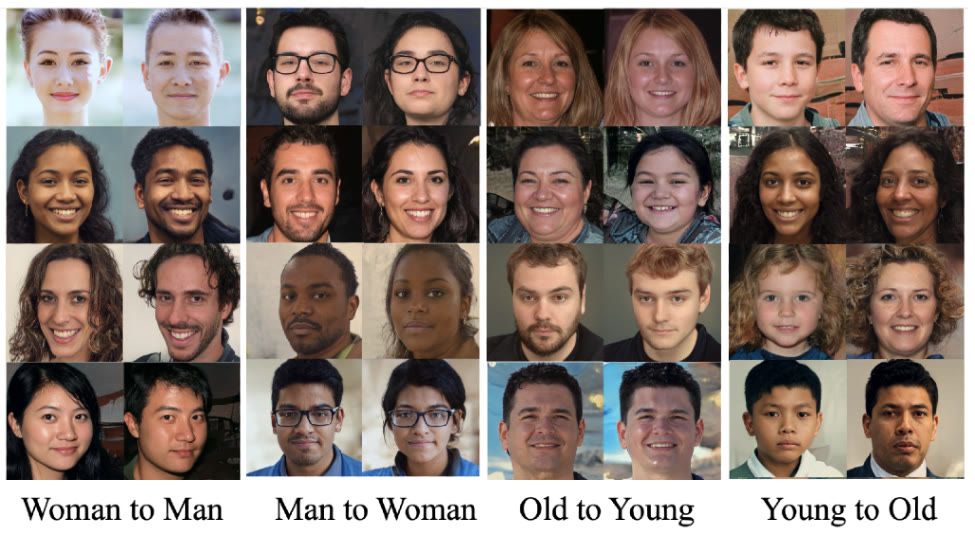

Feedback Schrödinger Bridge Matching introduces a new method to improve transfer between two data distributions using only a small number of paired samples!

Feedback Schrödinger Bridge Matching introduces a new method to improve transfer between two data distributions using only a small number of paired samples!

📄 arxiv.org/abs/2503.07565

🌍 lumalabs.ai/news/inducti...

📄 arxiv.org/abs/2503.07565

🌍 lumalabs.ai/news/inducti...

📄 arxiv.org/abs/2502.09509

🐍 github.com/zelaki/eqvae

📄 arxiv.org/abs/2502.09509

🐍 github.com/zelaki/eqvae