Prev: @CornellORIE @MSFTResearch, @IBMResearch, @uoftmie 🌈

Come chat with Haotian at poster W-515 to learn about our work on automatic equivalence checking for optimization models!

Come chat with Haotian at poster W-515 to learn about our work on automatic equivalence checking for optimization models!

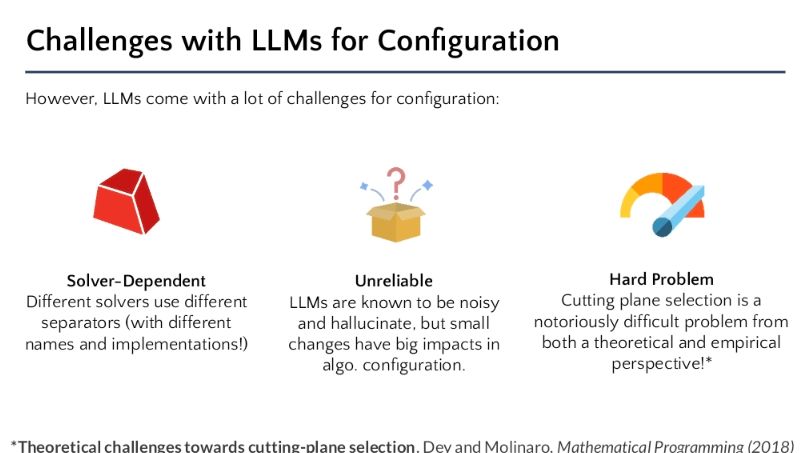

In this paper we show that we can thanks to Large Language Models! Why LLMs? They can identify useful optimization structure and have a lot of built in math programming knowledge!

In this paper we show that we can thanks to Large Language Models! Why LLMs? They can identify useful optimization structure and have a lot of built in math programming knowledge!

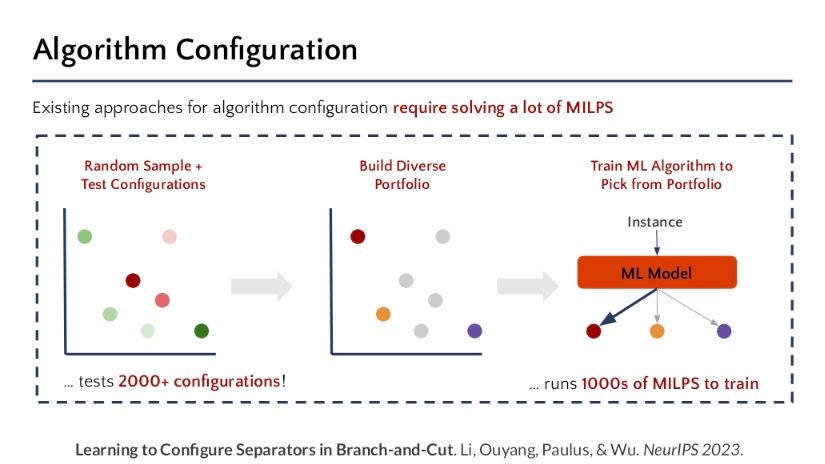

Existing approaches for algorithm configuration require solving a ton of MILPs leading to days of compute.

Existing approaches for algorithm configuration require solving a ton of MILPs leading to days of compute.