Formerly T&S Policy at TikTok, Analyst at Global Disinformation Index, Uni of Glasgow, Dublin City Univ, Washington Ireland Programme

#AWS us-east-1 outage took down #Signal and chunks of the internet. The culprit was too many critical operations crammed into a single AWS data center cluster in Northern Virginia.

How often is it used in the 🇳🇱 election campaign, and by whom?

Check out colleague @favstats.eu and @meinungsfuehrer.bsky.social’s AI campaign tracker #tk2025

www.campaigntracker.nl/en/

How often is it used in the 🇳🇱 election campaign, and by whom?

Check out colleague @favstats.eu and @meinungsfuehrer.bsky.social’s AI campaign tracker #tk2025

www.campaigntracker.nl/en/

Fortunately, what @samgregory.bsky.social told me did make feel better. We discuss what technologists can do to help protect our shared reality:

open.spotify.com/episode/6HE6...

Fortunately, what @samgregory.bsky.social told me did make feel better. We discuss what technologists can do to help protect our shared reality:

open.spotify.com/episode/6HE6...

MSTS is exciting because it tests for safety risks *created by multimodality*. Each prompt consists of a text + image that *only in combination* reveal their full unsafe meaning.

🧵

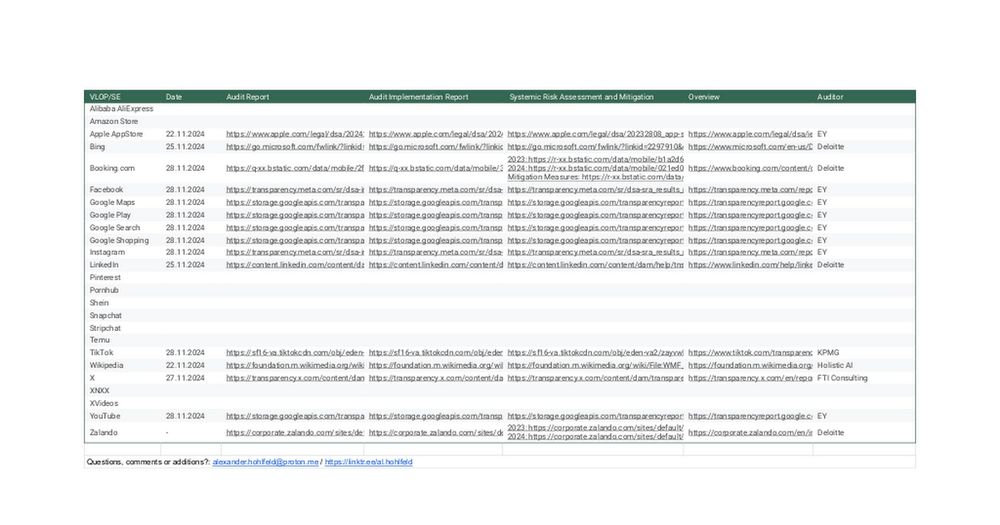

Huge shift in platform policy.

Check this overview ⬇️

X, Facebook, Instagram, Google, YouTube, TikTok are there

docs.google.com/spreadsheets...

Check this overview ⬇️

X, Facebook, Instagram, Google, YouTube, TikTok are there

docs.google.com/spreadsheets...

Here's to brighter skies ahead...

Here's to brighter skies ahead...