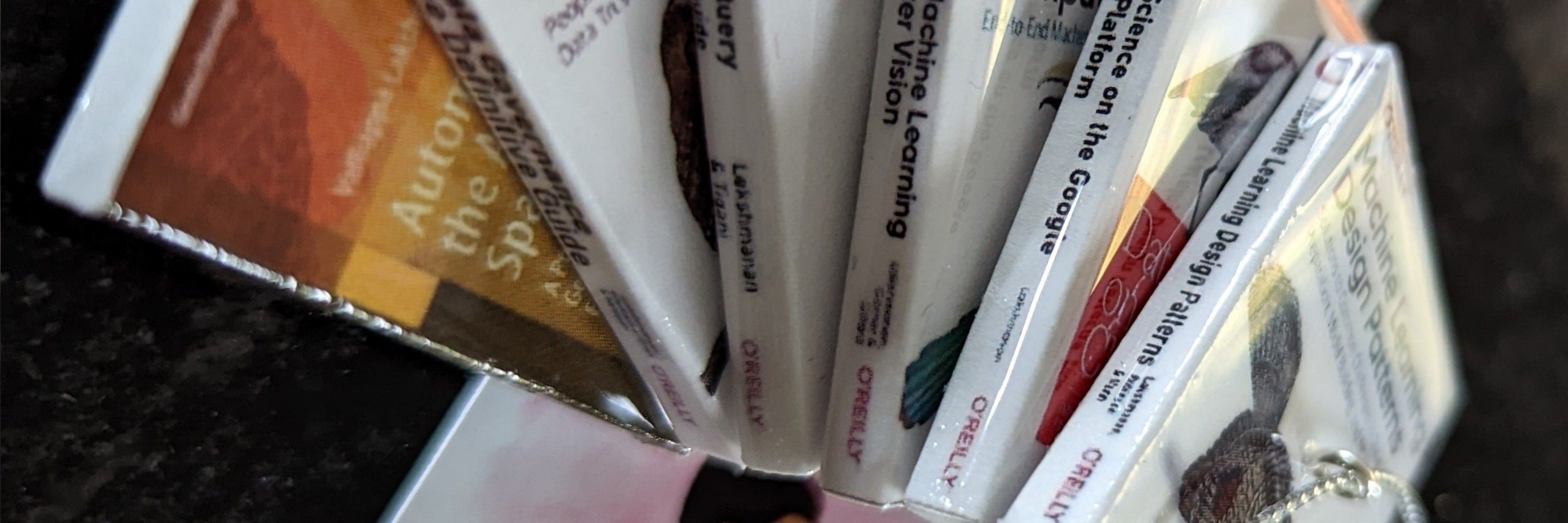

📚 O'Reilly: BigQuery, ML Design Patterns, Data Science 👨🏫Coursera 🌪️Ex: @googlecloud @NOAA

https://www.vlakshman.com/

github.com/lakshmanok/g...

Looking forward to hearing from you! What did you find helpful? Where can we do better? This is early access, so you still have a chance to make it better for future readers.

github.com/lakshmanok/g...

Looking forward to hearing from you! What did you find helpful? Where can we do better? This is early access, so you still have a chance to make it better for future readers.

g.co/gemini/share...

claude.ai/share/00cb35...

g.co/gemini/share...

claude.ai/share/00cb35...

bsky.app/profile/lakl...

bsky.app/profile/lakl...