London AI and Humanity Project

@laihp.bsky.social

The LAIHP brings together interdisciplinary researchers from academia and industry to investigate human interaction with AI.

https://www.ai-humanity-london.com/

https://www.ai-humanity-london.com/

arxiv.org

March 21, 2025 at 10:34 AM

So sorry about this Orpheus! Working on that now!

I’m afraid our talks are not recorded.

I’m afraid our talks are not recorded.

March 13, 2025 at 8:10 PM

So sorry about this Orpheus! Working on that now!

I’m afraid our talks are not recorded.

I’m afraid our talks are not recorded.

January 30, 2025 at 11:39 PM

Join us also next week!

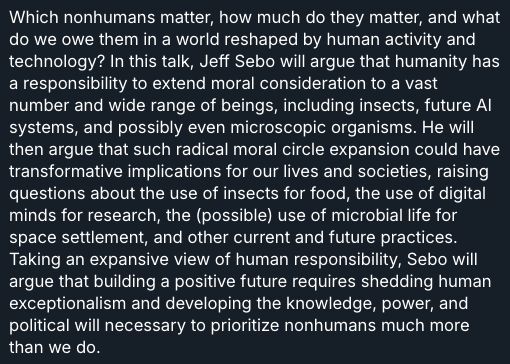

👤 Jeff Sebo (NYU)

📢 "The Moral Circle"

🗓️ Tuesday, February 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Jeff Sebo (NYU)

📢 "The Moral Circle"

🗓️ Tuesday, February 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

January 27, 2025 at 11:00 AM

Join us also next week!

👤 Jeff Sebo (NYU)

📢 "The Moral Circle"

🗓️ Tuesday, February 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Jeff Sebo (NYU)

📢 "The Moral Circle"

🗓️ Tuesday, February 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

Absolutely appreciate that usage but there a public bait-and-switch worry here

January 26, 2025 at 12:54 PM

Absolutely appreciate that usage but there a public bait-and-switch worry here

See previous discussion of this article and a response from @mpshanahan.bsky.social here.

Two new pieces from the LAIHP: Responding to Murray Shanahan's "Talking About Large Language Models," Stephen M. Downes, Patrick Forber, and LAIHP director @alexgrz.bsky.social argue that "LLMs are Not Just Next Token Predictors"

LLMs are Not Just Next Token Predictors

LLMs are statistical models of language learning through stochastic gradient descent with a next token prediction objective. Prompting a popular view among AI modelers: LLMs are just next token predic...

arxiv.org

January 20, 2025 at 10:13 AM

See previous discussion of this article and a response from @mpshanahan.bsky.social here.

January 18, 2025 at 9:43 PM