Working on a book about Citizen Diplomacy.

Living in the woods.

Also - being mom to four boys and one baby girl 🤘🏻

In our deep dive into A2A you'll learn how it works and how to start with it, whether MCP and A2A are competitors, and if Google might use it to index every agent on the internet 👇

Enjoy and leave your feedback!

In our deep dive into A2A you'll learn how it works and how to start with it, whether MCP and A2A are competitors, and if Google might use it to index every agent on the internet 👇

Enjoy and leave your feedback!

• Easy cross-enterprise workflows

• Standardized human-in-the-loop collaboration between AI and people

• And even a searchable, internet-scale agents directory

• Easy cross-enterprise workflows

• Standardized human-in-the-loop collaboration between AI and people

• And even a searchable, internet-scale agents directory

Most AI agents today live in silos. @Google’s A2A protocol aims to be the “common language” that lets them to collaborate.

A2A could unlock many possibilities:

Most AI agents today live in silos. @Google’s A2A protocol aims to be the “common language” that lets them to collaborate.

A2A could unlock many possibilities:

And Liquid AI are working on something even more interesting

How can their models beat Transformers? 👇

And Liquid AI are working on something even more interesting

How can their models beat Transformers? 👇

YouTube www.youtube.com/@RealTuringP...

Spotify open.spotify.com/show/2SQxCUR...

Apple podcasts.apple.com/us/podcast/i...

YouTube www.youtube.com/@RealTuringP...

Spotify open.spotify.com/show/2SQxCUR...

Apple podcasts.apple.com/us/podcast/i...

YouTube channel - 'Inference'-> www.youtube.com/@RealTuringP...

YouTube channel - 'Inference'-> www.youtube.com/@RealTuringP...

Maps AI value expressions across real-world interactions to inform grounded AI value alignment

arxiv.org/abs/2504.15236

Maps AI value expressions across real-world interactions to inform grounded AI value alignment

arxiv.org/abs/2504.15236

Highlights limitations of next-token prediction and proposes noise-injection strategies for open-ended creativity

arxiv.org/abs/2504.15266

GitHub: github.com/chenwu98/alg...

Highlights limitations of next-token prediction and proposes noise-injection strategies for open-ended creativity

arxiv.org/abs/2504.15266

GitHub: github.com/chenwu98/alg...

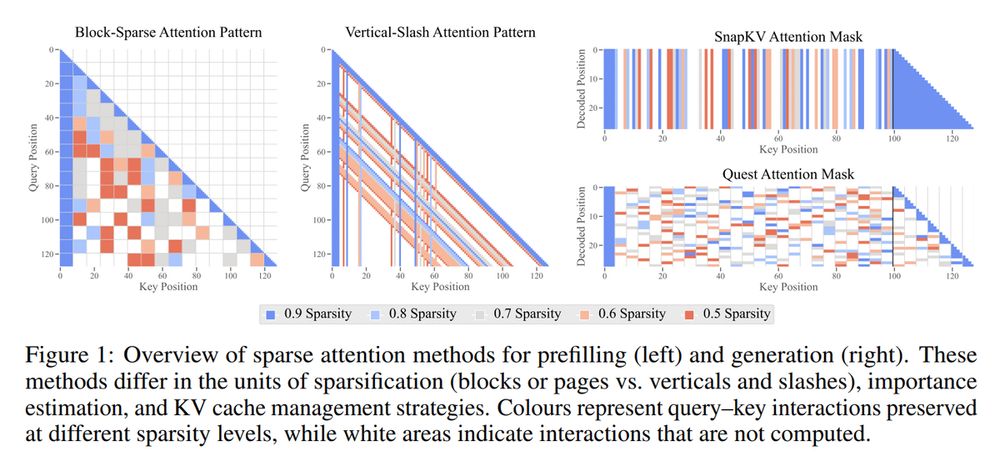

Investigates sparse attention trade-offs and proposes scaling laws for long-context LLMs

arxiv.org/abs/2504.17768

Investigates sparse attention trade-offs and proposes scaling laws for long-context LLMs

arxiv.org/abs/2504.17768

Presents PHD-Transformer to enable efficient long-context pretraining without inflating memory costs

arxiv.org/abs/2504.14992

Presents PHD-Transformer to enable efficient long-context pretraining without inflating memory costs

arxiv.org/abs/2504.14992

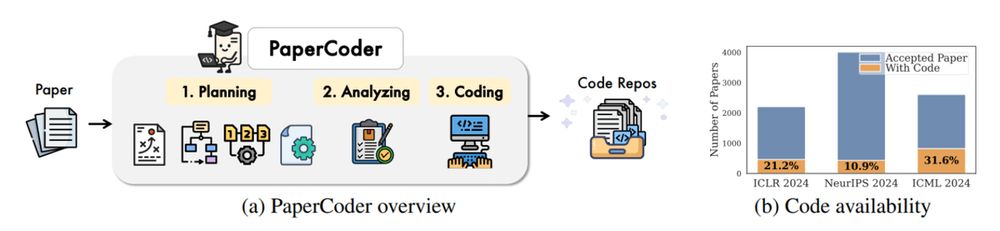

Automates end-to-end ML paper-to-code translation with a multi-agent framework

arxiv.org/abs/2504.17192

Code: github.com/going-doer/P...

Automates end-to-end ML paper-to-code translation with a multi-agent framework

arxiv.org/abs/2504.17192

Code: github.com/going-doer/P...

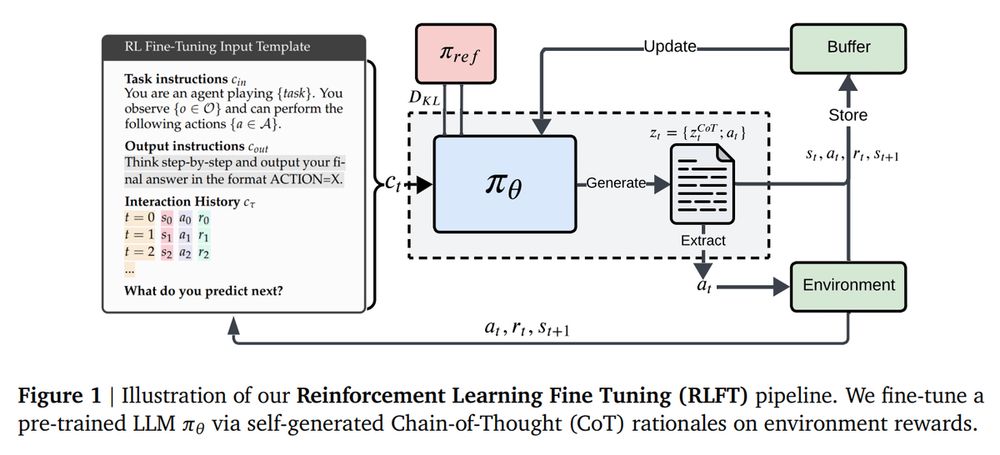

Analyzes how RL fine-tuning improves exploration and decision-making abilities of LLMs

arxiv.org/abs/2504.16078

Analyzes how RL fine-tuning improves exploration and decision-making abilities of LLMs

arxiv.org/abs/2504.16078

Introduces a method for self-evolving LLMs at test-time using reward signals without labeled data

arxiv.org/abs/2504.16084

GitHub: github.com/PRIME-RL/TTRL

Introduces a method for self-evolving LLMs at test-time using reward signals without labeled data

arxiv.org/abs/2504.16084

GitHub: github.com/PRIME-RL/TTRL

www.turingpost.com/p/fod98

www.turingpost.com/p/fod98

Advances multimodal reasoning with a hybrid RL paradigm balancing reward guidance and rule-based strategies.

arxiv.org/abs/2504.16656

Model: huggingface.co/Skywork/Skyw...

Advances multimodal reasoning with a hybrid RL paradigm balancing reward guidance and rule-based strategies.

arxiv.org/abs/2504.16656

Model: huggingface.co/Skywork/Skyw...

It's a generative verifier that scales step-wise reward modeling with minimal supervision.

arxiv.org/abs/2504.16828

GitHub: github.com/mukhal/think...

It's a generative verifier that scales step-wise reward modeling with minimal supervision.

arxiv.org/abs/2504.16828

GitHub: github.com/mukhal/think...

Release an open-source, high-speed OCR model supporting 90+ languages with LaTeX formatting and structured output for real-world document processing.

x.com/VikParuchuri...

Release an open-source, high-speed OCR model supporting 90+ languages with LaTeX formatting and structured output for real-world document processing.

x.com/VikParuchuri...

Develops a highly token-efficient multilingual LLM using specialized cross-lingual techniques for Korean, Japanese, and more.

huggingface.co/papers/2504....

Model:

huggingface.co/trillionlabs...

Develops a highly token-efficient multilingual LLM using specialized cross-lingual techniques for Korean, Japanese, and more.

huggingface.co/papers/2504....

Model:

huggingface.co/trillionlabs...

Expands vision-language models to handle long-context video and image comprehension with specialized training tricks and efficient scaling.

arxiv.org/abs/2504.15271

Project page: nvlabs.github.io/EAGLE/

Expands vision-language models to handle long-context video and image comprehension with specialized training tricks and efficient scaling.

arxiv.org/abs/2504.15271

Project page: nvlabs.github.io/EAGLE/

Builds state-of-the-art mathematical reasoning models with OpenMathReasoning dataset.

arxiv.org/abs/2504.16891

Builds state-of-the-art mathematical reasoning models with OpenMathReasoning dataset.

arxiv.org/abs/2504.16891