Code generation, math, optimization

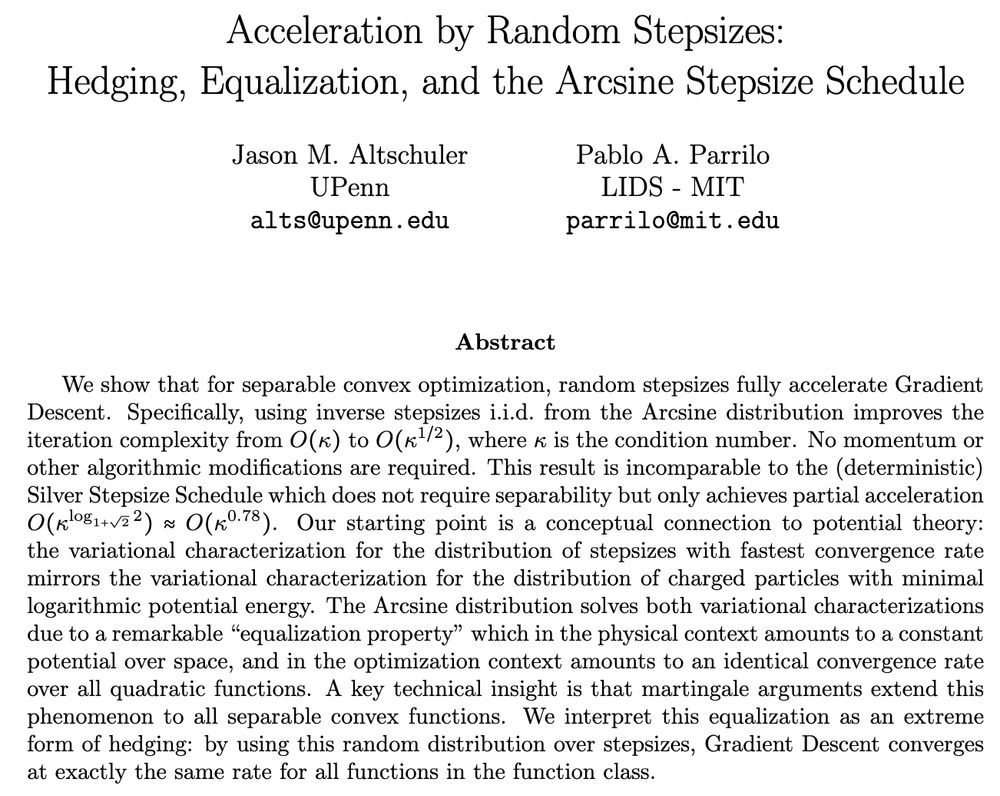

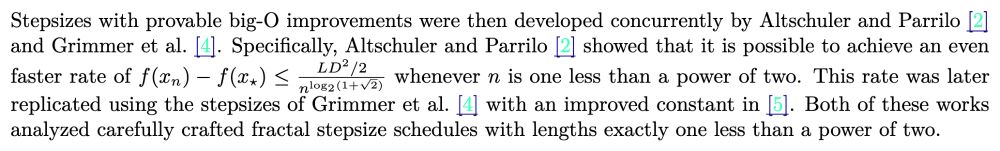

arxiv.org/abs/2412.05790

arxiv.org/abs/2412.05790

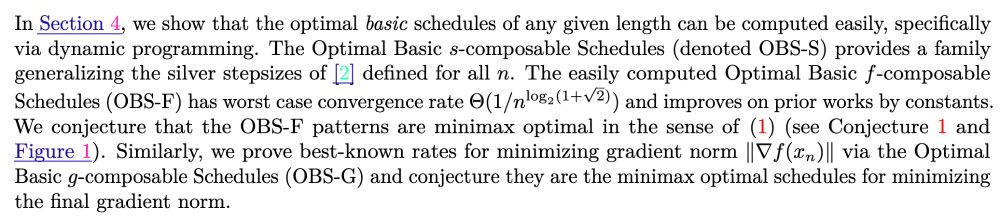

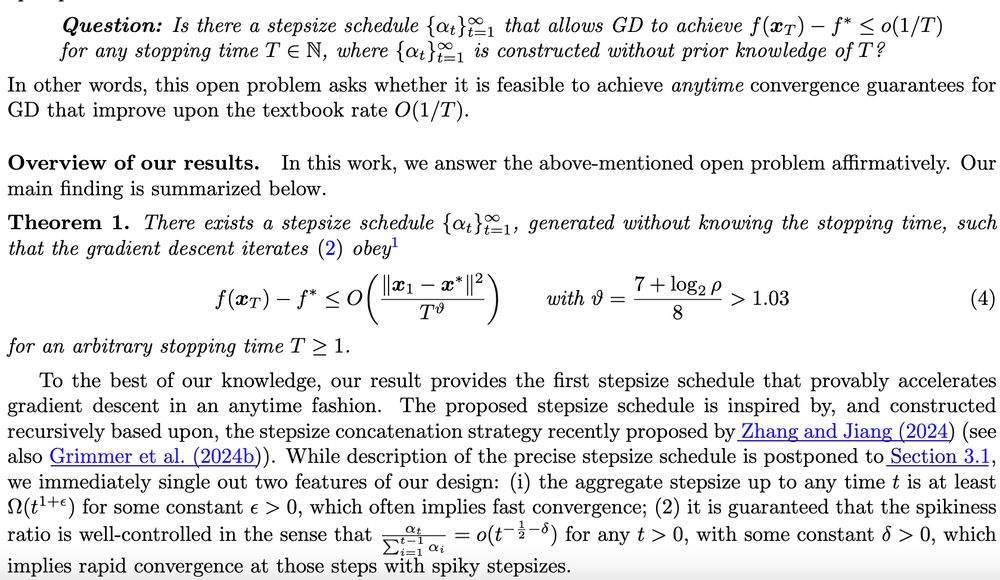

arxiv.org/abs/2411.17668

I am still waiting to see a version with adaptive stepsizes though 👀

arxiv.org/abs/2411.17668

I am still waiting to see a version with adaptive stepsizes though 👀