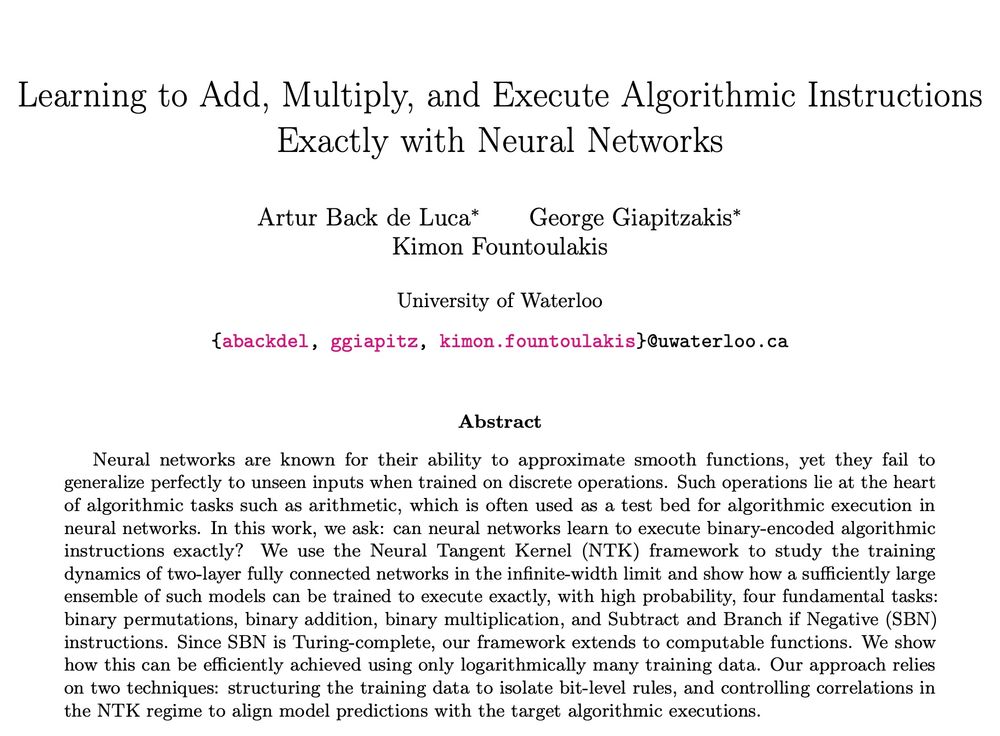

Machine Learning

Lab: opallab.ca

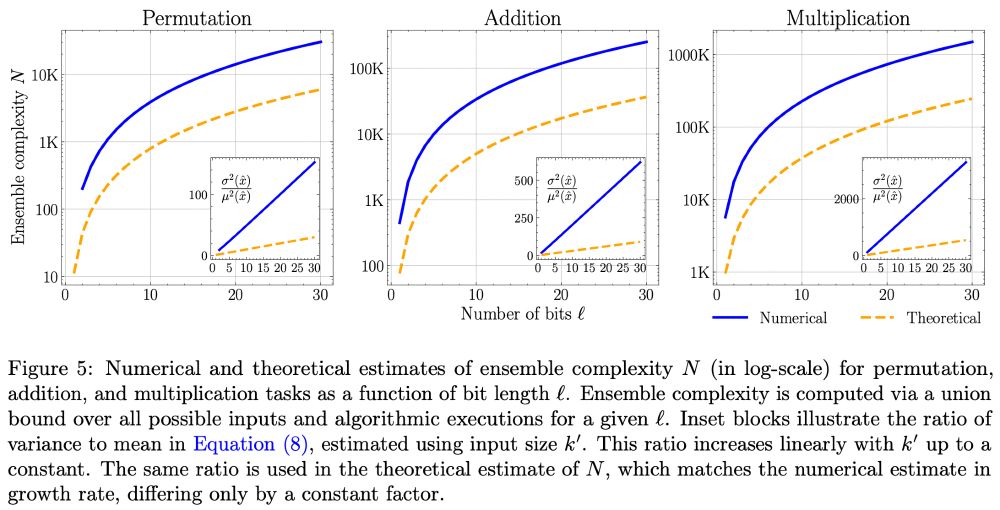

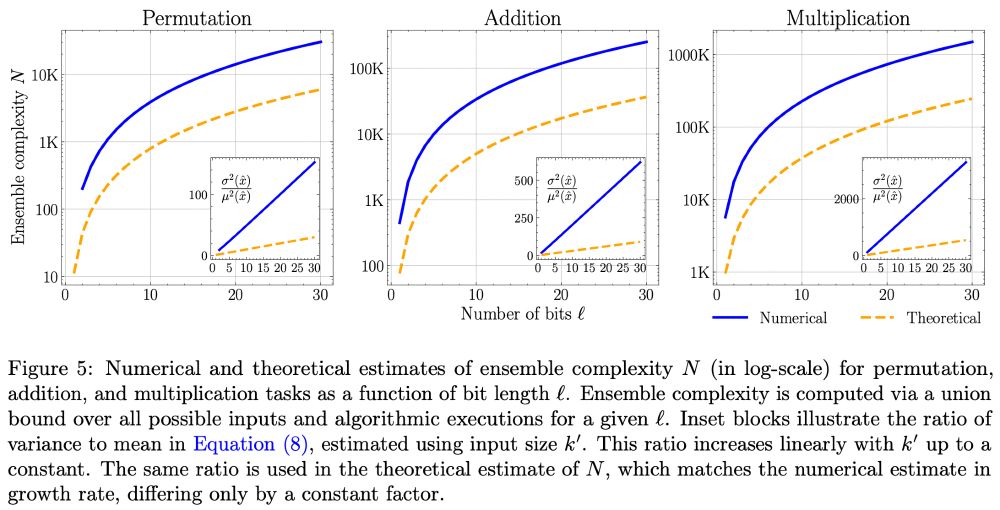

Link to the paper: arxiv.org/abs/2510.04115

Link to the paper: arxiv.org/abs/2510.04115

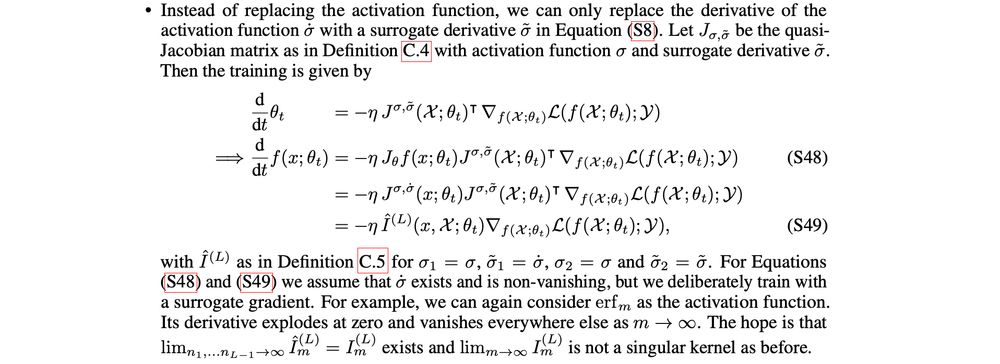

They extend the NTK framework to activation functions that have finitely many jumps.

They extend the NTK framework to activation functions that have finitely many jumps.

Link: dspacemainprd01.lib.uwaterloo.ca/server/api/c...

Relevant papers:

1) Local Graph Clustering with Noisy Labels (ICLR 2024)

Link: dspacemainprd01.lib.uwaterloo.ca/server/api/c...

Relevant papers:

1) Local Graph Clustering with Noisy Labels (ICLR 2024)

I compiled a list of theoretical papers related to the computational capabilities of Transformers, recurrent networks, feedforward networks, and graph neural networks.

Link: github.com/opallab/neur...

I compiled a list of theoretical papers related to the computational capabilities of Transformers, recurrent networks, feedforward networks, and graph neural networks.

Link: github.com/opallab/neur...

Link: weightagnostic.github.io/papers/turin...

Link: weightagnostic.github.io/papers/turin...

Link: arxiv.org/abs/2502.16763

Link: arxiv.org/abs/2502.16763

link: arxiv.org/abs/2412.02975

link: arxiv.org/abs/2412.02975

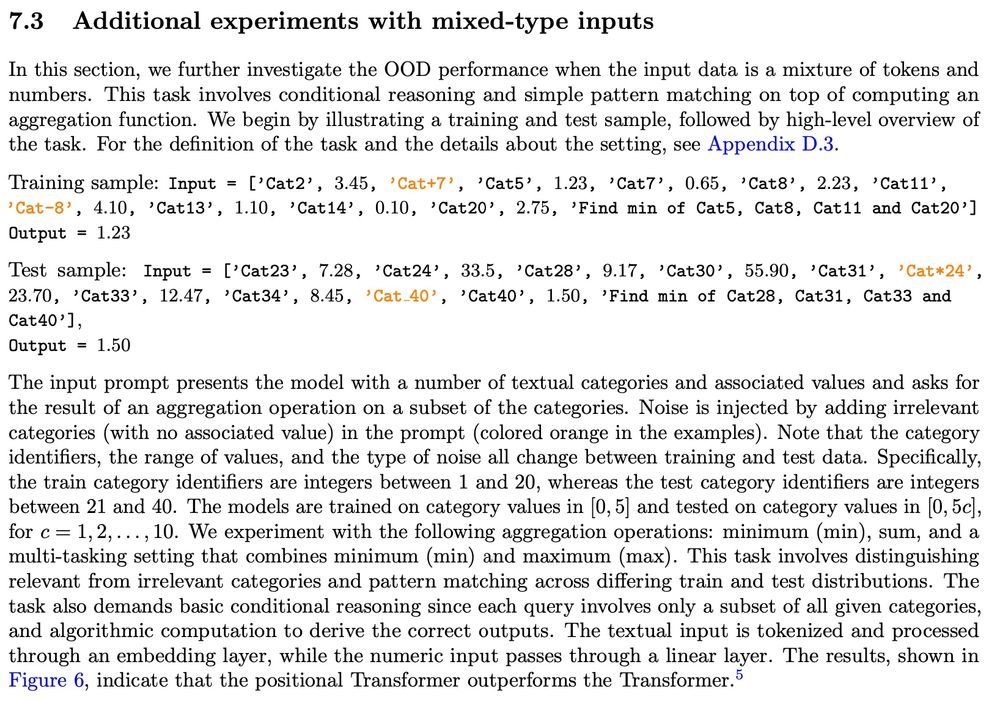

We study the effect of using only fixed positional encodings in the Transformer architecture for computational tasks. These positional encodings remain the same across layers.

We study the effect of using only fixed positional encodings in the Transformer architecture for computational tasks. These positional encodings remain the same across layers.

Shenghao Yang passed his PhD defence today. Shenghao is the second PhD student to graduate from our group. I am very happy for Shenghao and the work that he has done!

Shenghao Yang passed his PhD defence today. Shenghao is the second PhD student to graduate from our group. I am very happy for Shenghao and the work that he has done!

link: openreview.net/pdf?id=MSsQD...

link: openreview.net/pdf?id=MSsQD...

link: uwspace.uwaterloo.ca/items/291d10...

link: uwspace.uwaterloo.ca/items/291d10...