https://www.cs.cmu.edu/~kmcrane/

But “fair” really just means you get the frequencies you expect (say, 1/4, 1/4 & 1/2)

We can now design fair dice with any frequencies—and any shape! 🐉

hbaktash.github.io/projects/put...

But “fair” really just means you get the frequencies you expect (say, 1/4, 1/4 & 1/2)

We can now design fair dice with any frequencies—and any shape! 🐉

hbaktash.github.io/projects/put...

It converts Markdown and LaTeX to Unicode that can be used in “tweets”, and automatically splits long threads. Try it out!

keenancrane.github.io/LaTweet/

It converts Markdown and LaTeX to Unicode that can be used in “tweets”, and automatically splits long threads. Try it out!

keenancrane.github.io/LaTweet/

The encoder f maps high-dimensional data x to low-dimensional latents z. The decoder tries to map z back to x. We *always* learn a k-dimensional submanifold M, which is reliable only where we have many samples z.

The encoder f maps high-dimensional data x to low-dimensional latents z. The decoder tries to map z back to x. We *always* learn a k-dimensional submanifold M, which is reliable only where we have many samples z.

If we're lucky, someone bothers to draw arrows that illustrate the main point: we want the output to look like the input!

If we're lucky, someone bothers to draw arrows that illustrate the main point: we want the output to look like the input!

They confuse the representation (matrices) with the objects represented by those matrices (linear maps… or is it a quadratic form?)

They confuse the representation (matrices) with the objects represented by those matrices (linear maps… or is it a quadratic form?)

In short: we emphasize how autoencoders are implemented—but not always what they represent (and some of the implications of that representation).🧵

In short: we emphasize how autoencoders are implemented—but not always what they represent (and some of the implications of that representation).🧵

Like any savant, you have to roll with the quirks. 😉

Like any savant, you have to roll with the quirks. 😉

Likewise, the height of the rectangles in the top diagram doesn’t literally correspond to the length of the data and latent vectors (the ration is often more extreme)

Likewise, the height of the rectangles in the top diagram doesn’t literally correspond to the length of the data and latent vectors (the ration is often more extreme)

- The encoder tries to compress the data into a lower-dimensional space (left to right)

- The decoder attempts to invert the encoder (right to left)

- There's inevitabe error in reconstruction of a latent code (dashed line between x and x̂)

- The encoder tries to compress the data into a lower-dimensional space (left to right)

- The decoder attempts to invert the encoder (right to left)

- There's inevitabe error in reconstruction of a latent code (dashed line between x and x̂)

But it misses some critical features (like cycle consistency). And often adds other nutty stuff—like drawing functions as complete bipartite graphs!

But it misses some critical features (like cycle consistency). And often adds other nutty stuff—like drawing functions as complete bipartite graphs!

The whole idea of an autoencoder is that you complete a round trip and seek cycle consistency—why lay out the network linearly?

The whole idea of an autoencoder is that you complete a round trip and seek cycle consistency—why lay out the network linearly?

But I do think the first bar should be checking whether you can recover consistent geometry from video—not whether it makes accurate predictions of physics.

But I do think the first bar should be checking whether you can recover consistent geometry from video—not whether it makes accurate predictions of physics.

Stay tuned for more…👣

Stay tuned for more…👣

Basically we can turn arbitrary objects into fair dice, or make dice which capture the statistics of other objects—like several coin flips.

Basically we can turn arbitrary objects into fair dice, or make dice which capture the statistics of other objects—like several coin flips.

github.com/etcorman/Rec...

(C++ version is still in the works…)

github.com/etcorman/Rec...

(C++ version is still in the works…)

www.cs.cmu.edu/~kmcrane/Pro...

And find some supplemental information—including pseudocode—here:

www.cs.cmu.edu/~kmcrane/Pro...

www.cs.cmu.edu/~kmcrane/Pro...

And find some supplemental information—including pseudocode—here:

www.cs.cmu.edu/~kmcrane/Pro...

Amazingly, no past quad meshing method could guarantee 90° angles under refinement—until now. #RSP

Amazingly, no past quad meshing method could guarantee 90° angles under refinement—until now. #RSP

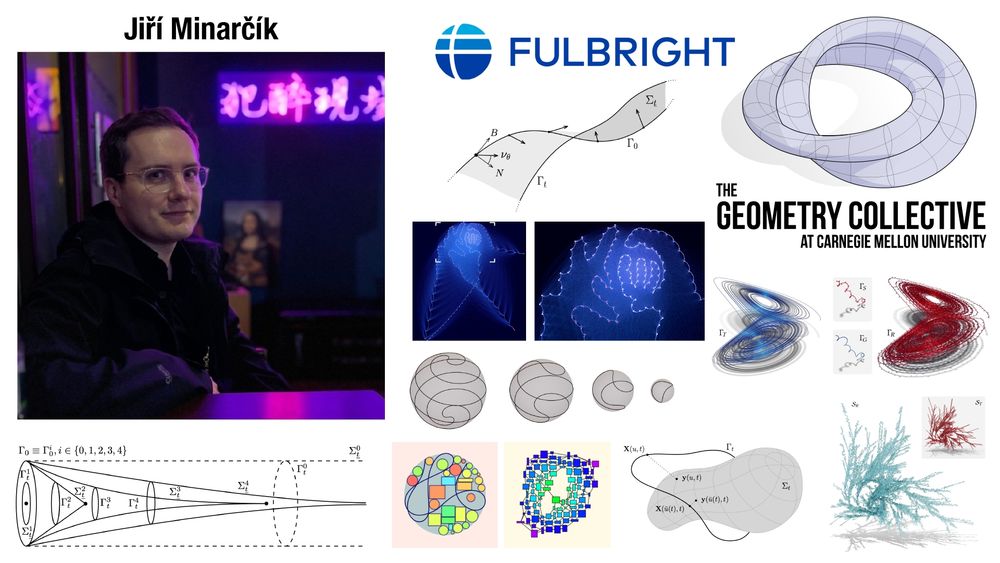

Jiří is a world expert in space curves, and one of the core contributors to Penrose (penrose.cs.cmu.edu). Check out his beautiful work here: minarcik.com

Jiří is a world expert in space curves, and one of the core contributors to Penrose (penrose.cs.cmu.edu). Check out his beautiful work here: minarcik.com

You may or may not be aware of the controversy around the next #SIGGRAPHAsia location, summarized here www.cs.toronto.edu/~jacobson/we...

If you're concerned consider signing this letter docs.google.com/document/d/1...

via this form

docs.google.com/forms/d/e/1F...

You may or may not be aware of the controversy around the next #SIGGRAPHAsia location, summarized here www.cs.toronto.edu/~jacobson/we...

If you're concerned consider signing this letter docs.google.com/document/d/1...

via this form

docs.google.com/forms/d/e/1F...

Here’s an alternate link: cs.cmu.edu/~kmcrane/Pro...

Here’s an alternate link: cs.cmu.edu/~kmcrane/Pro...