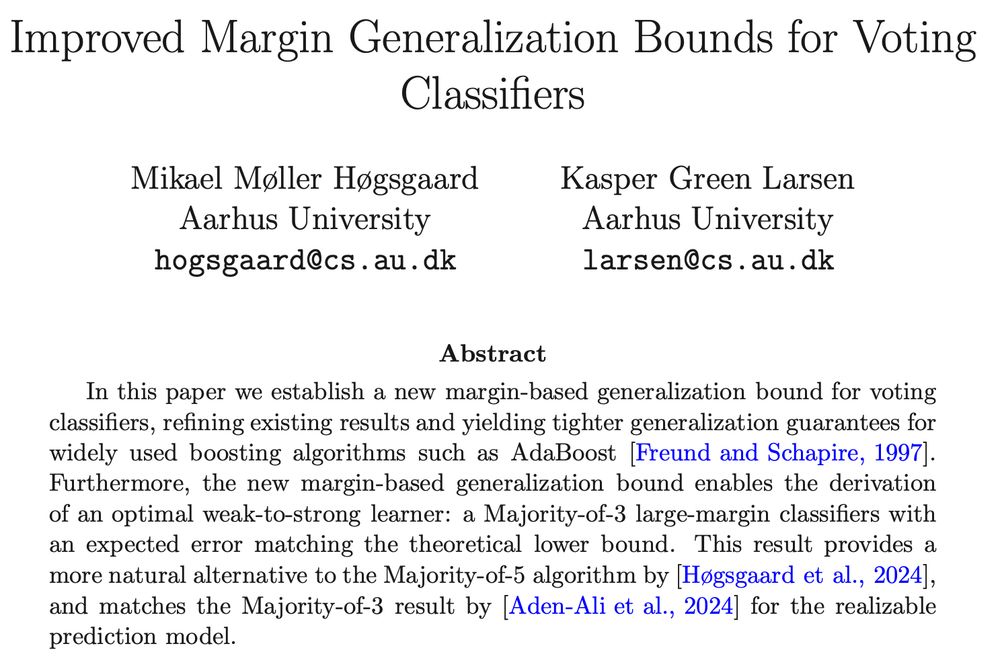

Accepted at COLT'25 🥳

arXiv: arxiv.org/pdf/2502.16462

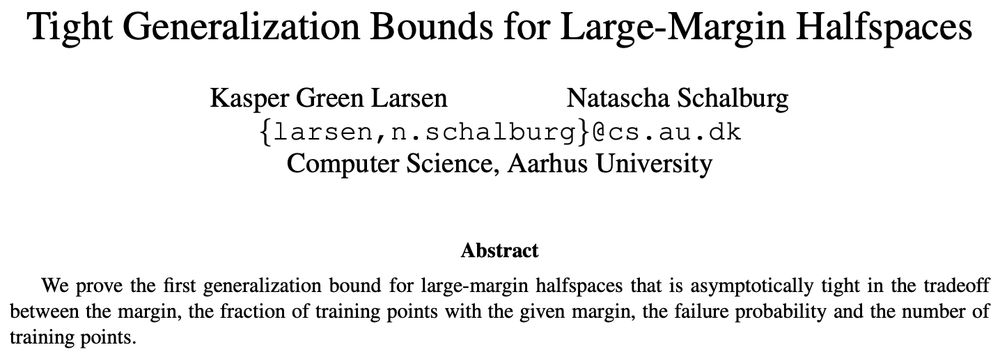

Accepted at COLT'25 🥳

arXiv: arxiv.org/pdf/2502.16462

New preprint. And as mentioned yesterday, Mikael is on the job market 😉

New preprint. And as mentioned yesterday, Mikael is on the job market 😉

New arXiv preprint: arxiv.org/abs/2502.13692

New arXiv preprint: arxiv.org/abs/2502.13692

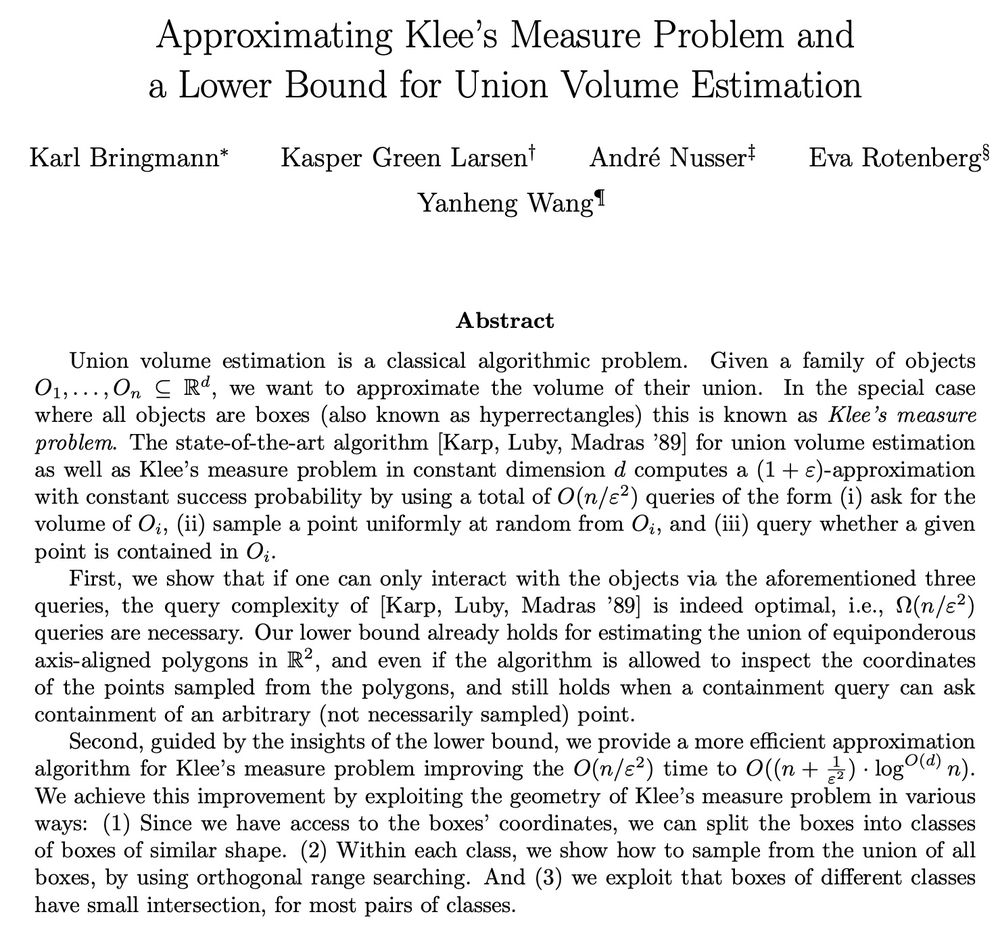

New arXiv preprint: arxiv.org/abs/2502.10516

New arXiv preprint: arxiv.org/abs/2502.10516

Accepted at SoCG'25: Symp. on Computational Geometry.

arxiv.org/abs/2410.00996

Accepted at SoCG'25: Symp. on Computational Geometry.

arxiv.org/abs/2410.00996

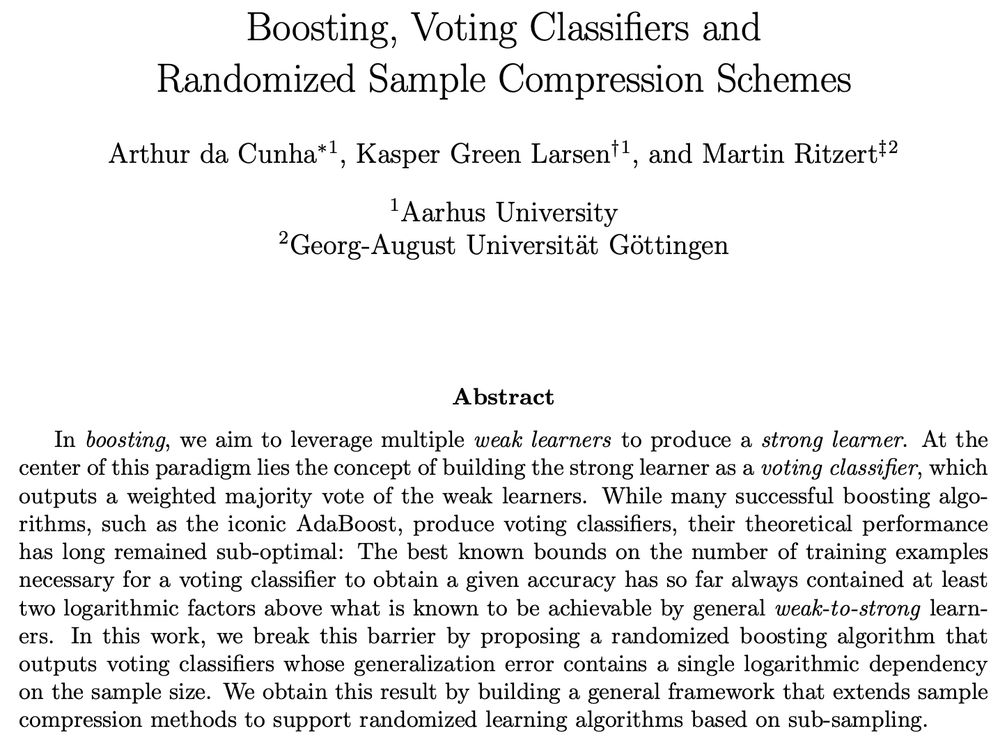

New arxiv preprint: arxiv.org/abs/2501.18388

New arxiv preprint: arxiv.org/abs/2501.18388

arxiv.org/abs/2402.02976

Accepted at ALT25 🥳

arxiv.org/abs/2402.02976

Accepted at ALT25 🥳

youtu.be/BGZJMwhQc4U

youtu.be/BGZJMwhQc4U