Kartik Chandra

@kartikchandra.bsky.social

This is *so* cool!

Just for fun, I re-implemented the rational belief updating computational model for Experiments 1 + 2 in memo. github.com/kach/memo/bl...

Here's the main model for the "revision" condition, and its predictions with some reasonable parameter settings (should look like fig 2B).

Just for fun, I re-implemented the rational belief updating computational model for Experiments 1 + 2 in memo. github.com/kach/memo/bl...

Here's the main model for the "revision" condition, and its predictions with some reasonable parameter settings (should look like fig 2B).

October 31, 2025 at 12:23 PM

This is *so* cool!

Just for fun, I re-implemented the rational belief updating computational model for Experiments 1 + 2 in memo. github.com/kach/memo/bl...

Here's the main model for the "revision" condition, and its predictions with some reasonable parameter settings (should look like fig 2B).

Just for fun, I re-implemented the rational belief updating computational model for Experiments 1 + 2 in memo. github.com/kach/memo/bl...

Here's the main model for the "revision" condition, and its predictions with some reasonable parameter settings (should look like fig 2B).

The full, uncropped picture explains what is going on here: the sun is reflected by two buildings with different window tints. The reflection from the blue building casts the yellow shadow, and vice versa. (Studying graphics reminds me just how overwhelmingly beautiful the everyday visual world is…)

August 13, 2025 at 5:36 AM

The full, uncropped picture explains what is going on here: the sun is reflected by two buildings with different window tints. The reflection from the blue building casts the yellow shadow, and vice versa. (Studying graphics reminds me just how overwhelmingly beautiful the everyday visual world is…)

Three years ago, at SIGGRAPH '22 in Vancouver, I took this picture of a pole casting two shadows: one blue and one yellow, from yellow and blue streetlamps respectively.

Today, back in Vancouver for SIGGRAPH '25, I saw the same effect in sunlight! How can one sun cast two colored shadows? Hint in 🧵

Today, back in Vancouver for SIGGRAPH '25, I saw the same effect in sunlight! How can one sun cast two colored shadows? Hint in 🧵

August 13, 2025 at 5:36 AM

Three years ago, at SIGGRAPH '22 in Vancouver, I took this picture of a pole casting two shadows: one blue and one yellow, from yellow and blue streetlamps respectively.

Today, back in Vancouver for SIGGRAPH '25, I saw the same effect in sunlight! How can one sun cast two colored shadows? Hint in 🧵

Today, back in Vancouver for SIGGRAPH '25, I saw the same effect in sunlight! How can one sun cast two colored shadows? Hint in 🧵

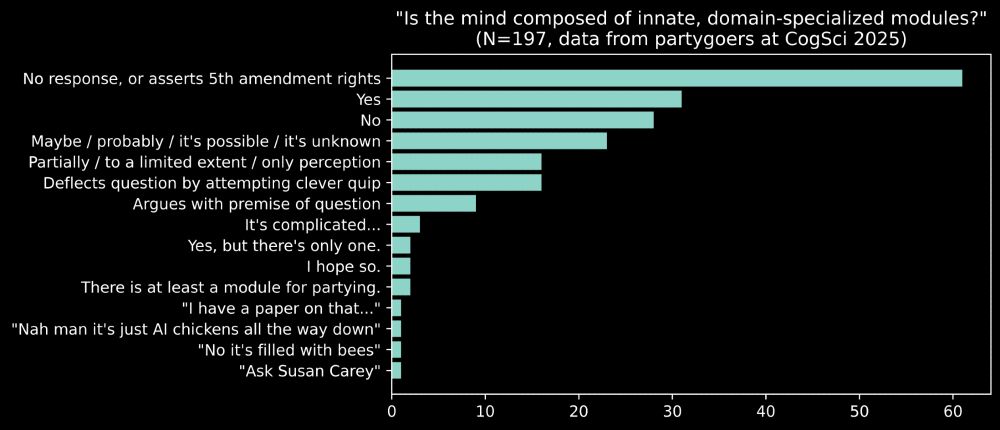

Thanks to a rogue Partiful RSVP form at #cogsci2025, I seem to have collected an unexpectedly large dataset (N=197) of whether cognitive scientists think the mind is composed of innate, domain-specialized modules…

August 2, 2025 at 4:56 PM

Thanks to a rogue Partiful RSVP form at #cogsci2025, I seem to have collected an unexpectedly large dataset (N=197) of whether cognitive scientists think the mind is composed of innate, domain-specialized modules…

Here is a little inverse graphics puzzle: The no-parking sign on my side of Vassar Street casts its shadow in a dramatically different direction from the leafless tree on the far side of the street — almost 90º apart. How is that possible, given that the sun casts parallel rays? (Hints in thread.)

June 5, 2025 at 2:27 PM

Here is a little inverse graphics puzzle: The no-parking sign on my side of Vassar Street casts its shadow in a dramatically different direction from the leafless tree on the far side of the street — almost 90º apart. How is that possible, given that the sun casts parallel rays? (Hints in thread.)

There's much more to say about this—especially about why we think these ideas are important to graphics. For more, see our paper arxiv.org/abs/2409.13507 or Matt's upcoming talk at SIGGRAPH Asia.

In the meantime, enjoy a video where every sound effect is a vocal imitation produced by our method! :)

In the meantime, enjoy a video where every sound effect is a vocal imitation produced by our method! :)

November 30, 2024 at 8:29 PM

There's much more to say about this—especially about why we think these ideas are important to graphics. For more, see our paper arxiv.org/abs/2409.13507 or Matt's upcoming talk at SIGGRAPH Asia.

In the meantime, enjoy a video where every sound effect is a vocal imitation produced by our method! :)

In the meantime, enjoy a video where every sound effect is a vocal imitation produced by our method! :)

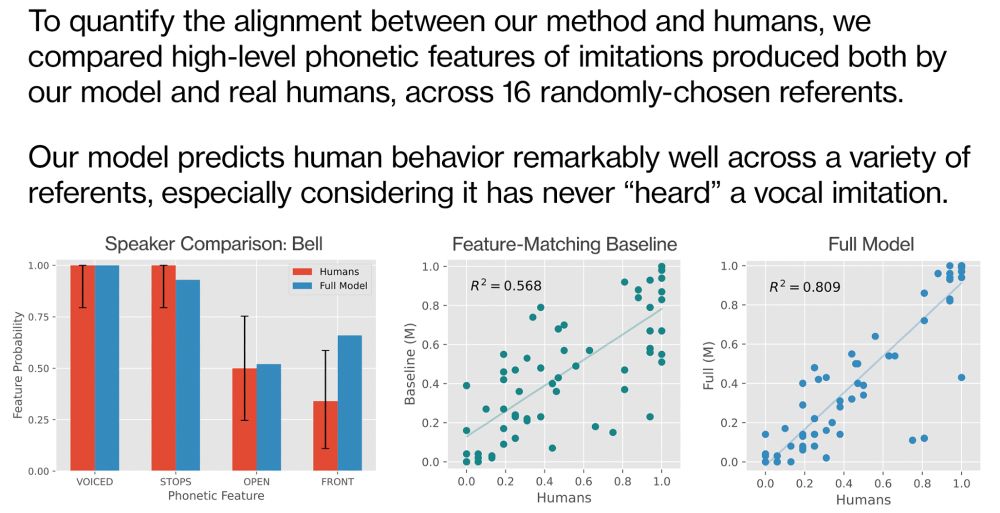

When compared to actual vocal imitations produced by actual humans, our model predicts people's behavior quite well…

But we never "trained" our model on any dataset of human vocal imitations! Human-like imitations emerged simply from encoding basic principles of human communication into our model.

But we never "trained" our model on any dataset of human vocal imitations! Human-like imitations emerged simply from encoding basic principles of human communication into our model.

November 30, 2024 at 8:29 PM

When compared to actual vocal imitations produced by actual humans, our model predicts people's behavior quite well…

But we never "trained" our model on any dataset of human vocal imitations! Human-like imitations emerged simply from encoding basic principles of human communication into our model.

But we never "trained" our model on any dataset of human vocal imitations! Human-like imitations emerged simply from encoding basic principles of human communication into our model.

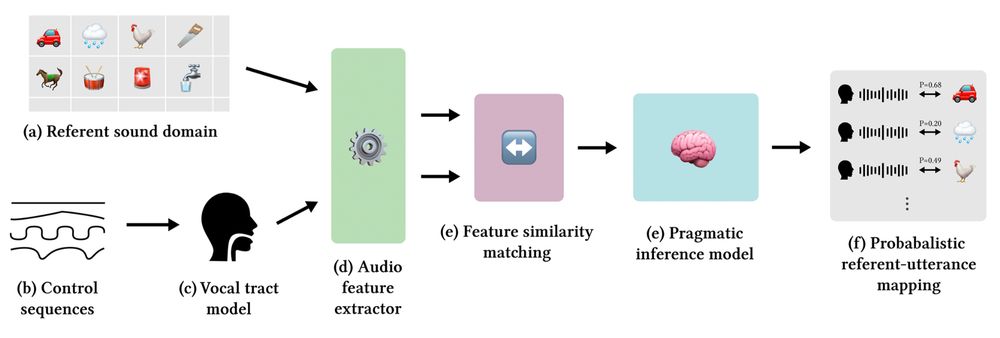

We designed a method for producing human-like vocal imitations of real-world sounds. It works by combining models of the human vocal tract (like "Pink Trombone"), human hearing (via feature extraction), and human communicative reasoning (the "Rational Speech Acts" framework from cognitive science).

November 30, 2024 at 8:29 PM

We designed a method for producing human-like vocal imitations of real-world sounds. It works by combining models of the human vocal tract (like "Pink Trombone"), human hearing (via feature extraction), and human communicative reasoning (the "Rational Speech Acts" framework from cognitive science).

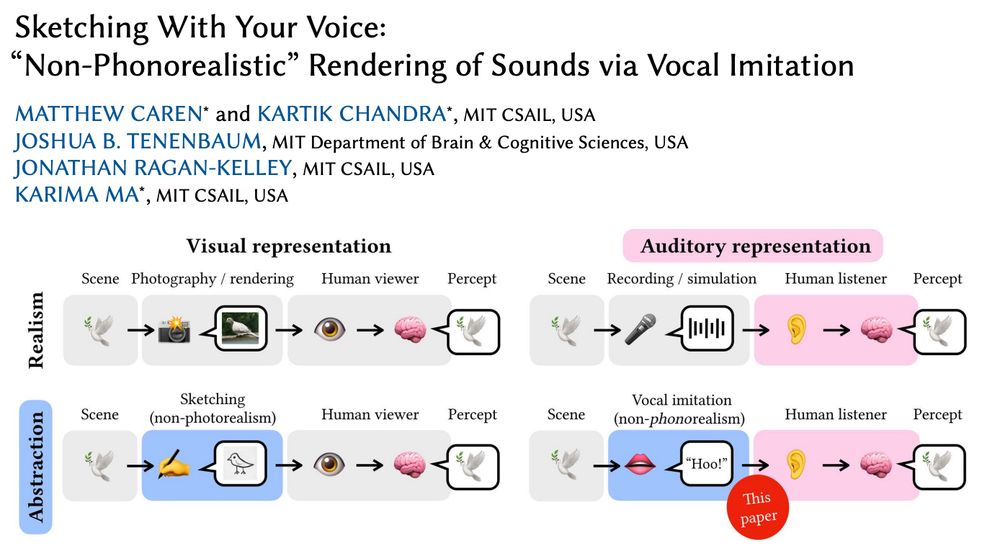

We are interested in *vocal imitation*: how we use our voices to "sketch" and communicate real-world sounds (e.g. crow → "caw," bell → "dong"). It's effortless, intuitive, and we do it all the time.

Some of my favorite examples are from callers describing engine sounds on the radio show "Car Talk"…

Some of my favorite examples are from callers describing engine sounds on the radio show "Car Talk"…

November 30, 2024 at 8:29 PM

We are interested in *vocal imitation*: how we use our voices to "sketch" and communicate real-world sounds (e.g. crow → "caw," bell → "dong"). It's effortless, intuitive, and we do it all the time.

Some of my favorite examples are from callers describing engine sounds on the radio show "Car Talk"…

Some of my favorite examples are from callers describing engine sounds on the radio show "Car Talk"…

Graphics has long studied how to make:

(1) realistic images 📷

(2) non-photorealistic images ✍️

(3) realistic sounds 🎤

What about (4) "non-phono-realistic" sounds?

What could that even mean?

Next week at SIGGRAPH Asia, MIT undergrad Matt Caren will present our proposal… 🧵

arxiv.org/abs/2409.13507

(1) realistic images 📷

(2) non-photorealistic images ✍️

(3) realistic sounds 🎤

What about (4) "non-phono-realistic" sounds?

What could that even mean?

Next week at SIGGRAPH Asia, MIT undergrad Matt Caren will present our proposal… 🧵

arxiv.org/abs/2409.13507

November 30, 2024 at 8:29 PM

Graphics has long studied how to make:

(1) realistic images 📷

(2) non-photorealistic images ✍️

(3) realistic sounds 🎤

What about (4) "non-phono-realistic" sounds?

What could that even mean?

Next week at SIGGRAPH Asia, MIT undergrad Matt Caren will present our proposal… 🧵

arxiv.org/abs/2409.13507

(1) realistic images 📷

(2) non-photorealistic images ✍️

(3) realistic sounds 🎤

What about (4) "non-phono-realistic" sounds?

What could that even mean?

Next week at SIGGRAPH Asia, MIT undergrad Matt Caren will present our proposal… 🧵

arxiv.org/abs/2409.13507

Oooh, that reminded me of my own initial foray into graphics research… 👀

November 26, 2024 at 11:58 PM

Oooh, that reminded me of my own initial foray into graphics research… 👀

Last night in Cambridge I took these two pictures of the same tree. To me there is something almost overwhelmingly beautiful about how from picture one to two, the branches appear to wrap around the streetlight and make it a glinted wooden halo. (Why does this happen? The Fresnel effect, I think…)

November 28, 2023 at 1:24 PM

Last night in Cambridge I took these two pictures of the same tree. To me there is something almost overwhelmingly beautiful about how from picture one to two, the branches appear to wrap around the streetlight and make it a glinted wooden halo. (Why does this happen? The Fresnel effect, I think…)

One of the joys of studying visual perception is that you can't stop seeing "seeing" everywhere you go. Here I am on an American Airlines flight wondering if there are any T's hidden among those L's. (Figure from Bergen & Landy, 1991).

November 27, 2023 at 1:58 PM

One of the joys of studying visual perception is that you can't stop seeing "seeing" everywhere you go. Here I am on an American Airlines flight wondering if there are any T's hidden among those L's. (Figure from Bergen & Landy, 1991).