artintech.substack.com

www.jordipons.me

We submitted an interactive & generative AI music piece.

We used AI for:

- Sound design

- Interactive playback

More details about..

- Human-AI co-creation process

- Name of the song

- Videoclip

- Live performance

👉 artintech.substack.com/p/our-ai-son...

We submitted an interactive & generative AI music piece.

We used AI for:

- Sound design

- Interactive playback

More details about..

- Human-AI co-creation process

- Name of the song

- Videoclip

- Live performance

👉 artintech.substack.com/p/our-ai-son...

- Singles

- Albums

- Performances

- Installations

- AI voices

- Operas

- Soundtracks

- Singles

- Albums

- Performances

- Installations

- AI voices

- Operas

- Soundtracks

- AI composition

- Co-composition

- Sound design

- Lyrics generation

- Translation

- AI composition

- Co-composition

- Sound design

- Lyrics generation

- Translation

📄 Paper (arXiv)

📂 Database (GitHub)

🎥 Video

👉 artintech.substack.com/p/report-art...

📄 Paper (arXiv)

📂 Database (GitHub)

🎥 Video

👉 artintech.substack.com/p/report-art...

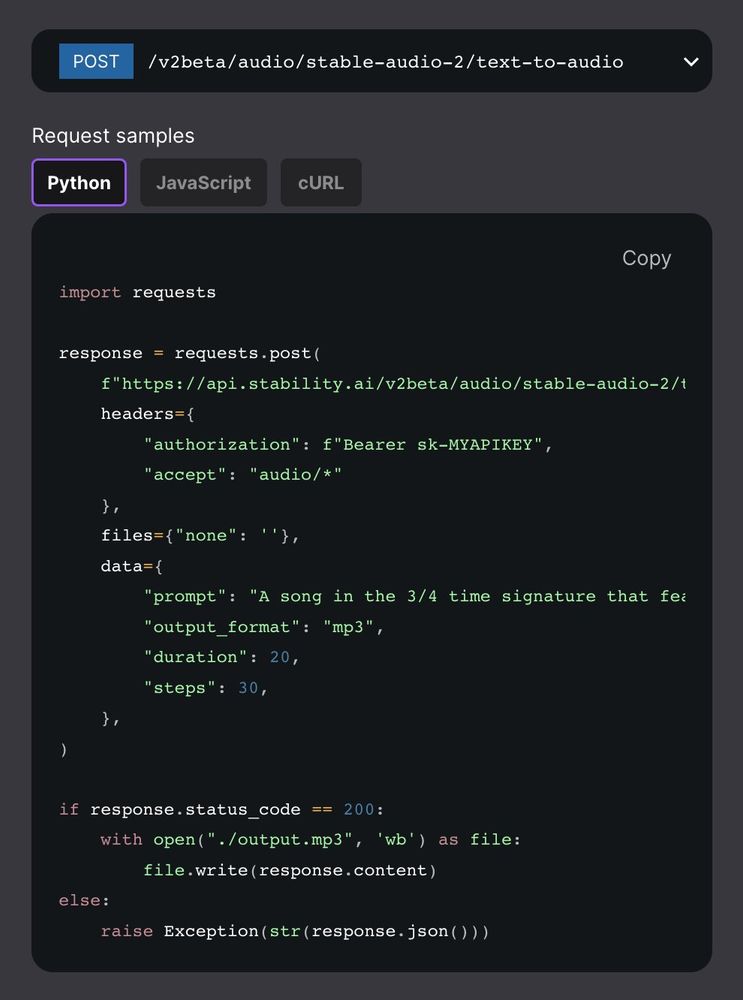

Based on adversarial post-training, it does not rely on distillation or CFG

Runtime is reduced to milliseconds with GPUs or seconds with CPUs

Weights huggingface.co/stabilityai/...

Blog stability.ai/news/stabili...

Paper arxiv.org/abs/2505.08175

Based on adversarial post-training, it does not rely on distillation or CFG

Runtime is reduced to milliseconds with GPUs or seconds with CPUs

Weights huggingface.co/stabilityai/...

Blog stability.ai/news/stabili...

Paper arxiv.org/abs/2505.08175

In the language of their time, 'indeterminacy' is akin to what we now call 'generative'—where some aspects of the composition are left to chance (not determined).

rohandrape.net/ut/rttcc-tex...

In the language of their time, 'indeterminacy' is akin to what we now call 'generative'—where some aspects of the composition are left to chance (not determined).

rohandrape.net/ut/rttcc-tex...

Thinking of art more as 'seeds' than 'artifacts' — and AI not as a 'tool', but as a 'mirror'.

And also to be aware that the line between audience and author blurs.

More ideas in the full article here 👇

artintech.substack.com/p/the-ai-art...

Thinking of art more as 'seeds' than 'artifacts' — and AI not as a 'tool', but as a 'mirror'.

And also to be aware that the line between audience and author blurs.

More ideas in the full article here 👇

artintech.substack.com/p/the-ai-art...

Beyond Copyright Battles—AI Art 👉 artintech.substack.com/p/ai-art-bey...

Beyond Copyright Battles—AI Art 👉 artintech.substack.com/p/ai-art-bey...

and found 5 trends that are shaping current AI music

🐝 Embrace the uncanny

🐝 Multi-genre AI music

🐝 AI music that reflects your culture

🐝 Lazy artwork

🐝 Not much Chinese and African AI music

👉https://artintech.substack.com/p/my-top-5-ai-music-picks

and found 5 trends that are shaping current AI music

🐝 Embrace the uncanny

🐝 Multi-genre AI music

🐝 AI music that reflects your culture

🐝 Lazy artwork

🐝 Not much Chinese and African AI music

👉https://artintech.substack.com/p/my-top-5-ai-music-picks

platform.stability.ai/docs/api-ref...

platform.stability.ai/docs/api-ref...

CPUs go brrrrrrr

CPUs go brrrrrrr

- Stage 1 (semantic tokens): dual-tokens language modeling from audio and text conditioning

- Stage 2 (acoustic tokens): vocal and instrumental language modeling from semantic tokens (using residual vector quantizers?)

- Stage 3 (audio): detokenization and upsampling

- Stage 1 (semantic tokens): dual-tokens language modeling from audio and text conditioning

- Stage 2 (acoustic tokens): vocal and instrumental language modeling from semantic tokens (using residual vector quantizers?)

- Stage 3 (audio): detokenization and upsampling

- Semantic audio tokens, to reduce training cost

- Dual-tokens (vocal-instrumental) for track-synced vocal-instrumental modeling

- Lyrics-chain-of-thoughts to progressively generate the whole song in a single context following lyrics condition (I don't know what this is)

- Semantic audio tokens, to reduce training cost

- Dual-tokens (vocal-instrumental) for track-synced vocal-instrumental modeling

- Lyrics-chain-of-thoughts to progressively generate the whole song in a single context following lyrics condition (I don't know what this is)

Tokenizing 16kHz speech at very low bitrates.

Inference code: github.com/Stability-AI...

Model code: github.com/Stability-AI...

Model weights: huggingface.co/stabilityai/...

arXiv: arxiv.org/abs/2411.19842

Audio demos: stability-ai.github.io/stable-codec...

Tokenizing 16kHz speech at very low bitrates.

Inference code: github.com/Stability-AI...

Model code: github.com/Stability-AI...

Model weights: huggingface.co/stabilityai/...

arXiv: arxiv.org/abs/2411.19842

Audio demos: stability-ai.github.io/stable-codec...

Time to cook up some Christmas bangers!

🔗 jordipons.me/apps/samples/

Time to cook up some Christmas bangers!

🔗 jordipons.me/apps/samples/