Jonas Hübotter

@jonhue.bsky.social

PhD student at ETH Zurich

jonhue.github.io

jonhue.github.io

Paper: arxiv.org/pdf/2410.05026

Joint work with the amazing @marbaga.bsky.social, @gmartius.bsky.social, @arkrause.bsky.social

Joint work with the amazing @marbaga.bsky.social, @gmartius.bsky.social, @arkrause.bsky.social

July 14, 2025 at 7:38 PM

Paper: arxiv.org/pdf/2410.05026

Joint work with the amazing @marbaga.bsky.social, @gmartius.bsky.social, @arkrause.bsky.social

Joint work with the amazing @marbaga.bsky.social, @gmartius.bsky.social, @arkrause.bsky.social

We propose an algorithm that does this by actively maximizing expected information gain of the demonstrations, with a couple of tricks to estimate this quantity and mitigate forgetting.

Interestingly, this solution is viable even without any information about pre-training!

Interestingly, this solution is viable even without any information about pre-training!

July 14, 2025 at 7:35 PM

We propose an algorithm that does this by actively maximizing expected information gain of the demonstrations, with a couple of tricks to estimate this quantity and mitigate forgetting.

Interestingly, this solution is viable even without any information about pre-training!

Interestingly, this solution is viable even without any information about pre-training!

Our method significantly improves accuracy (measured as perplexity) for large language models and achieves a new state-of-the-art on the Pile benchmark.

If you're interested in test-time training or active learning, come chat with me at our poster session!

If you're interested in test-time training or active learning, come chat with me at our poster session!

April 21, 2025 at 2:40 PM

Our method significantly improves accuracy (measured as perplexity) for large language models and achieves a new state-of-the-art on the Pile benchmark.

If you're interested in test-time training or active learning, come chat with me at our poster session!

If you're interested in test-time training or active learning, come chat with me at our poster session!

We introduce SIFT, a novel data selection algorithm for test-time training of language models. Unlike traditional nearest neighbor methods, SIFT uses uncertainty estimates to select maximally informative data, balancing relevance & diversity.

April 21, 2025 at 2:40 PM

We introduce SIFT, a novel data selection algorithm for test-time training of language models. Unlike traditional nearest neighbor methods, SIFT uses uncertainty estimates to select maximally informative data, balancing relevance & diversity.

Unfortunately not as of now. We may also release Jupyter notebooks in the future, but this may take some time.

February 12, 2025 at 10:25 PM

Unfortunately not as of now. We may also release Jupyter notebooks in the future, but this may take some time.

I'm glad you find this resource useful Maximilian!

February 11, 2025 at 3:26 PM

I'm glad you find this resource useful Maximilian!

Noted. Thanks for the suggestion!

February 11, 2025 at 9:01 AM

Noted. Thanks for the suggestion!

Very glad to hear that they’ve been useful to you! :)

February 11, 2025 at 8:37 AM

Very glad to hear that they’ve been useful to you! :)

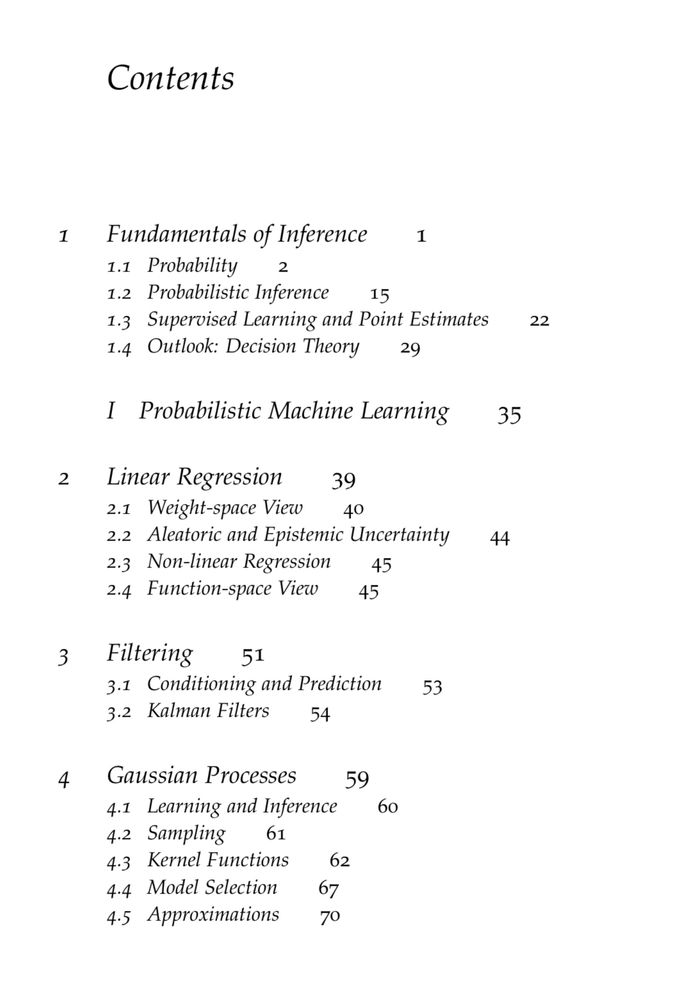

table of contents:

February 11, 2025 at 8:35 AM

table of contents:

Huge thanks to the countless people that helped in the process of bringing this resource together!

February 11, 2025 at 8:20 AM

Huge thanks to the countless people that helped in the process of bringing this resource together!

Preprint: arxiv.org/pdf/2410.08020

arxiv.org

December 13, 2024 at 6:33 PM

Preprint: arxiv.org/pdf/2410.08020

December 11, 2024 at 11:14 PM