Paper: arxiv.org/abs/2501.13075

Paper: arxiv.org/abs/2501.13075

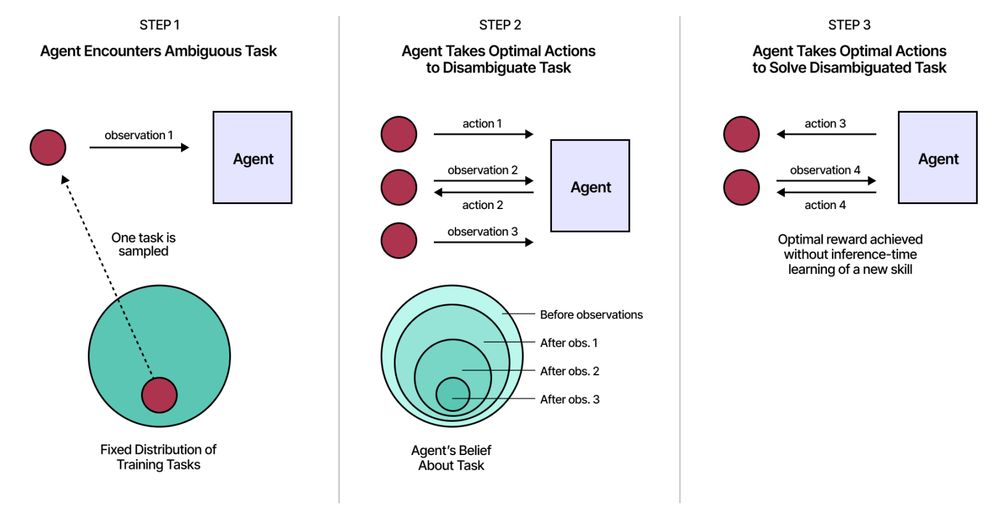

Related to @jeffclune's AI-GAs, @_rockt, @kenneth0stanley, @err_more, @MichaelD1729, @pyoudeyer

Related to @jeffclune's AI-GAs, @_rockt, @kenneth0stanley, @err_more, @MichaelD1729, @pyoudeyer

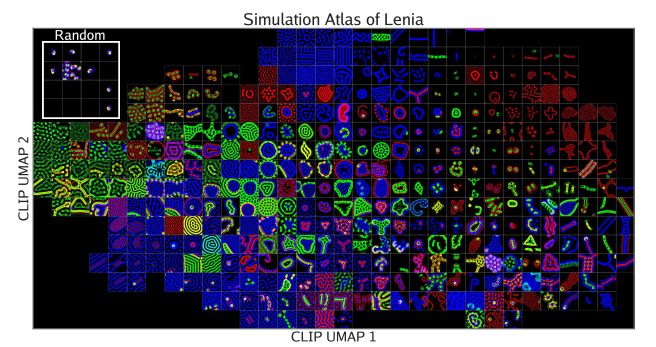

See work done by @risi1979 @drmichaellevin @hardmaru @BertChakovsky @sina_lana + many others

See work done by @risi1979 @drmichaellevin @hardmaru @BertChakovsky @sina_lana + many others

w/ great colleagues Elliot Meyerson, Tarek El-Gaaly, kennethstanley.bsky.social, @tarinz.bsky.social

(more details below)

w/ great colleagues Elliot Meyerson, Tarek El-Gaaly, kennethstanley.bsky.social, @tarinz.bsky.social

(more details below)