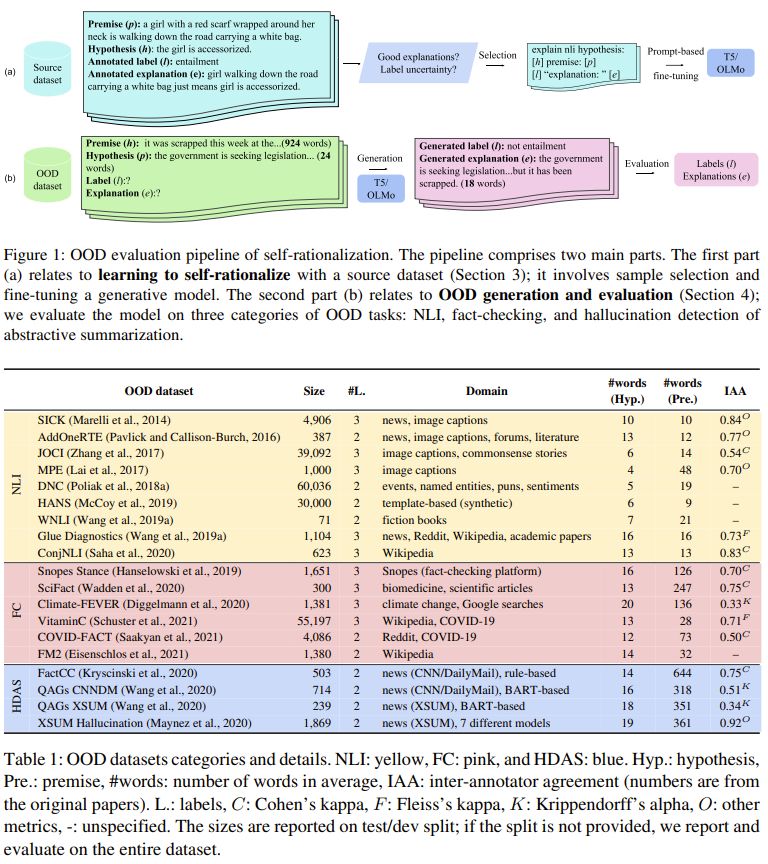

We tested model explanations across 19 datasets (NLI, fact-checking, hallucination detection) to see how well they self-rationalize on completely unseen data.

#LLMs #Explainability #ACL2025 #TACL

We tested model explanations across 19 datasets (NLI, fact-checking, hallucination detection) to see how well they self-rationalize on completely unseen data.

#LLMs #Explainability #ACL2025 #TACL