Personal site: https://www.jfkominsky.com

For now, I'll just close on a screenshot of my favorite sentence from the paper.

/end

For now, I'll just close on a screenshot of my favorite sentence from the paper.

/end

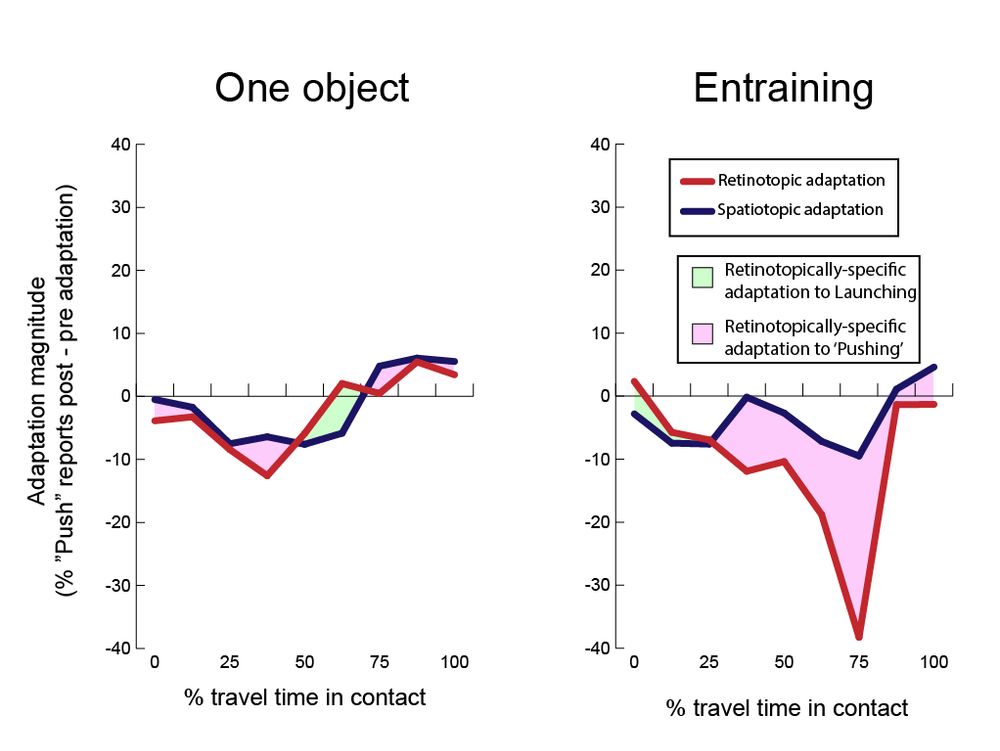

The results were spectacularly clear:

We perfectly replicated the entraining adaptation effect from Exp 2.

Adapting to the one-object event did *nothing*.

There is specialized perceptual processing for *causal* entraining.

17/22

The results were spectacularly clear:

We perfectly replicated the entraining adaptation effect from Exp 2.

Adapting to the one-object event did *nothing*.

There is specialized perceptual processing for *causal* entraining.

17/22

16/22

16/22

And that's exactly what we found!

14/22

And that's exactly what we found!

14/22

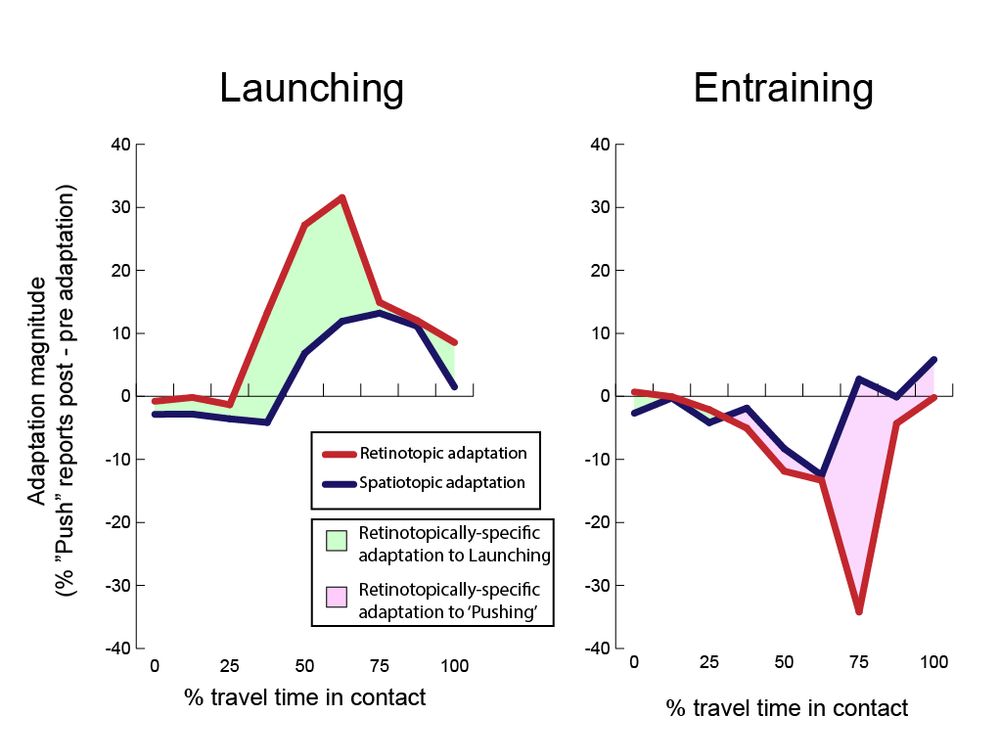

However, launching and entraining are also endpoints of a continuous feature dimension: How long A and B stay in contact.

We can make a new kind of ambiguous test event using this feature dimension, "launch/push".

11/22

However, launching and entraining are also endpoints of a continuous feature dimension: How long A and B stay in contact.

We can make a new kind of ambiguous test event using this feature dimension, "launch/push".

11/22

9/22

9/22

In 2020, Brian Scholl and I found that adapting to entraining does *not* generate the same adaptation effect on these launch/pass displays.

4/22

In 2020, Brian Scholl and I found that adapting to entraining does *not* generate the same adaptation effect on these launch/pass displays.

4/22

3/22

3/22

One of the best pieces of evidence for this is that you can get visual adaptation to launching events.

2/22

One of the best pieces of evidence for this is that you can get visual adaptation to launching events.

2/22

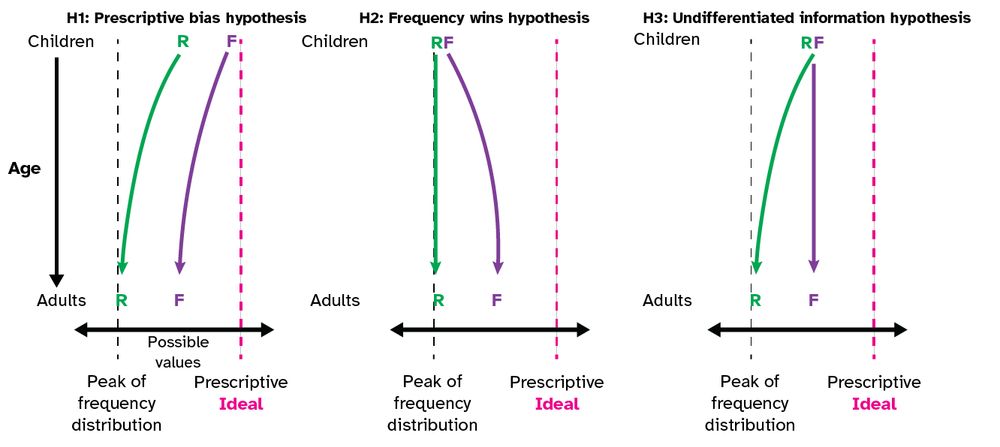

1. Kids mostly represent prescriptive information

2. Kids mostly represent descriptive information

3. Kids have an undifferentiated representation that combines both (3/10)

1. Kids mostly represent prescriptive information

2. Kids mostly represent descriptive information

3. Kids have an undifferentiated representation that combines both (3/10)

There are people in the US and among the dems who do get it, they just don't have real decision-making power. That needs to change *very* soon.

There are people in the US and among the dems who do get it, they just don't have real decision-making power. That needs to change *very* soon.

The Vienna state elections are next week. On my walk home today I got waved down by some volunteers from SPÖ who handed me a pen, flyer, and Easter egg, seen here. I said I'm an immigrant, I can't vote, they said no problem, take it anyway (1/2)

The Vienna state elections are next week. On my walk home today I got waved down by some volunteers from SPÖ who handed me a pen, flyer, and Easter egg, seen here. I said I'm an immigrant, I can't vote, they said no problem, take it anyway (1/2)

- I'm an assistant professor at CEU in Vienna, Austria

- I study how the human mind identifies, represents, and reasons about cause and effect from infancy through adulthood

- I made PyHab (github.com/jfkominsky/P...)

- My cat is cute

- I'm an assistant professor at CEU in Vienna, Austria

- I study how the human mind identifies, represents, and reasons about cause and effect from infancy through adulthood

- I made PyHab (github.com/jfkominsky/P...)

- My cat is cute