Part of UT Computational Linguistics https://sites.utexas.edu/compling/ and UT NLP https://www.nlp.utexas.edu/

(With thanks to Lisa Chuyuan Li who took this photo in Suzhou!)

(With thanks to Lisa Chuyuan Li who took this photo in Suzhou!)

We built **PLSemanticsBench** to find out.

The results: a wild mix.

✅The Brilliant:

Top reasoning models can execute complex, fuzzer-generated programs -- even with 5+ levels of nested loops! 🤯

❌The Brittle: 🧵

We built **PLSemanticsBench** to find out.

The results: a wild mix.

✅The Brilliant:

Top reasoning models can execute complex, fuzzer-generated programs -- even with 5+ levels of nested loops! 🤯

❌The Brittle: 🧵

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

Additionally, we present evidence that both *syntactic* and *discourse* diversity measures show strong homogenization that lexical and cosine used in this paper do not capture.

Additionally, we present evidence that both *syntactic* and *discourse* diversity measures show strong homogenization that lexical and cosine used in this paper do not capture.

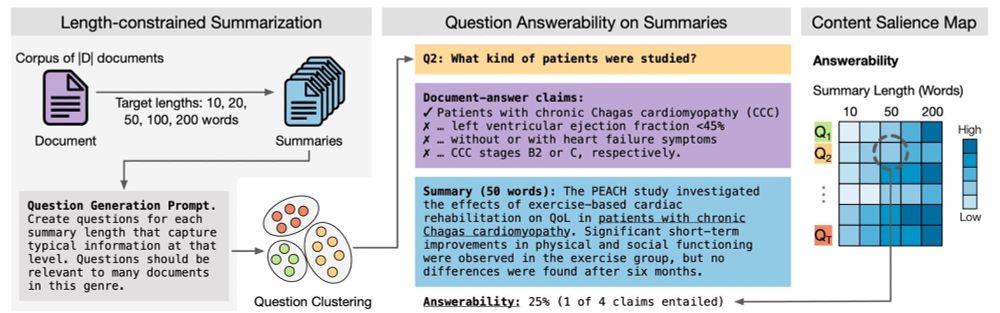

First of all, information salience is a fuzzy concept. So how can we even measure it? (1/6)

First of all, information salience is a fuzzy concept. So how can we even measure it? (1/6)

UT has a super vibrant comp ling & #nlp community!!

Apply here 👉 apply.interfolio.com/158280

UT has a super vibrant comp ling & #nlp community!!

Apply here 👉 apply.interfolio.com/158280

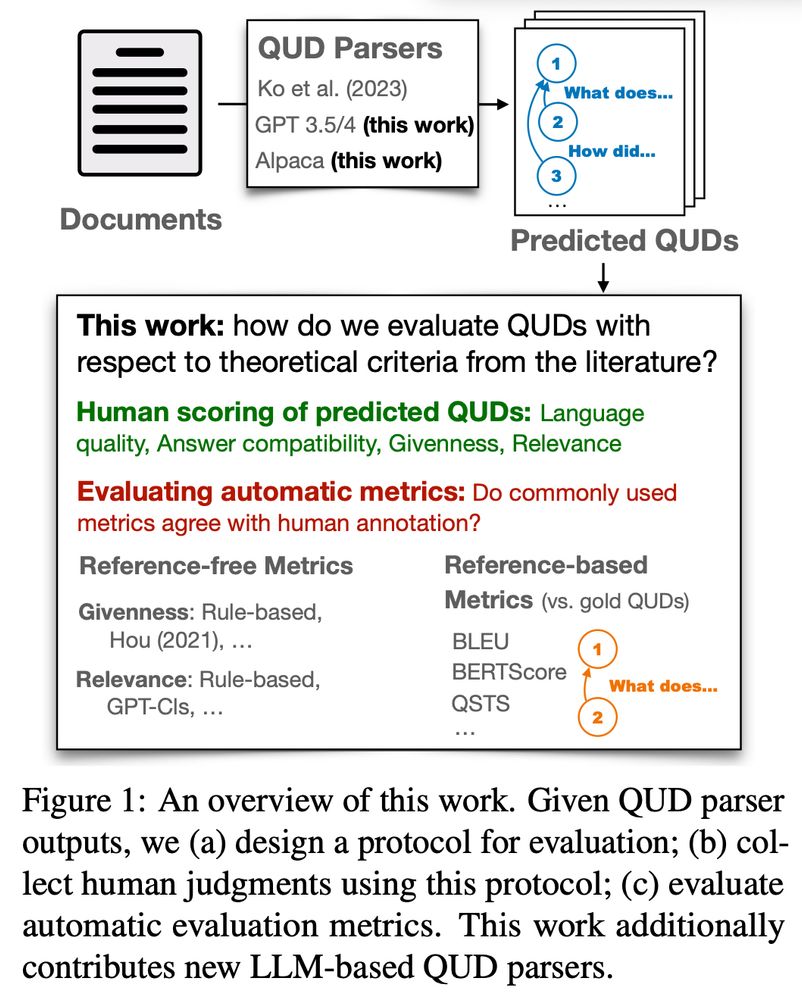

👉[Oral] Discourse+Phonology+Syntax2 10:30-12:00 @ Flagler

also w/ Ritika Mangla @gregdnlp.bsky.social Alex Dimakis

👉[Oral] Discourse+Phonology+Syntax2 10:30-12:00 @ Flagler

also w/ Ritika Mangla @gregdnlp.bsky.social Alex Dimakis

Paper arxiv.org/abs/2310.14389 w Hongli Zhan, Desmond Ong

Paper arxiv.org/abs/2310.14389 w Hongli Zhan, Desmond Ong

at 4:30 EST/3:30 CST on Simplifying Medical Texts with Large Language Models! harcconf.org/agenda-monda...

at 4:30 EST/3:30 CST on Simplifying Medical Texts with Large Language Models! harcconf.org/agenda-monda...

arxiv.org/abs/2305.10387

w/ @yatingwu.bsky.social Will Sheffield @kmahowald.bsky.social

arxiv.org/abs/2305.10387

w/ @yatingwu.bsky.social Will Sheffield @kmahowald.bsky.social

Paper: arxiv.org/abs/2310.14520

w/ @yatingwu.bsky.social, Ritika Mangla, @gregdnlp.bsky.social

Paper: arxiv.org/abs/2310.14520

w/ @yatingwu.bsky.social, Ritika Mangla, @gregdnlp.bsky.social