jennhu.github.io

Preprint (pre-TACL version): arxiv.org/abs/2510.16227

10/10

Preprint (pre-TACL version): arxiv.org/abs/2510.16227

10/10

This overlap is to be expected if prob is influenced by factors other than gram. 7/10

This overlap is to be expected if prob is influenced by factors other than gram. 7/10

1. Correlation btwn the prob of string probs within minimal pairs

2. Correlation btwn LMs’ and humans’ deltas within minimal pairs

3. Poor separation btwn prob of unpaired grammatical and ungrammatical strings

6/10

1. Correlation btwn the prob of string probs within minimal pairs

2. Correlation btwn LMs’ and humans’ deltas within minimal pairs

3. Poor separation btwn prob of unpaired grammatical and ungrammatical strings

6/10

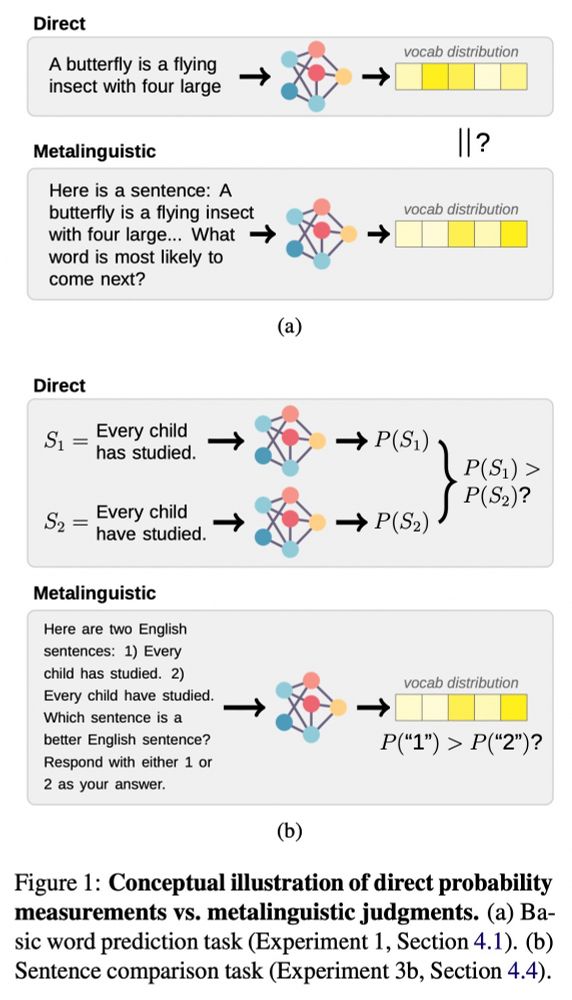

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

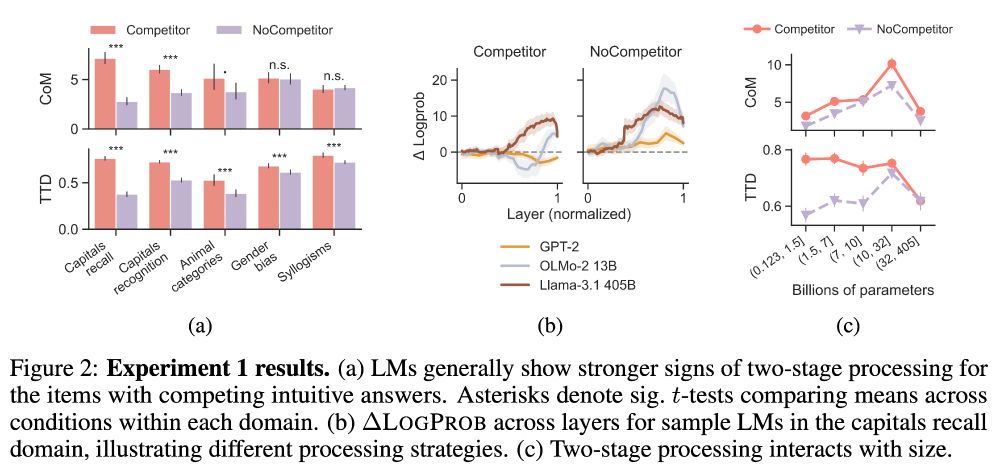

We find that dynamic measures improve prediction of human measures above static (final-layer) measures -- across models, domains, & modalities.

(7/12)

We find that dynamic measures improve prediction of human measures above static (final-layer) measures -- across models, domains, & modalities.

(7/12)

We find that models indeed appear to initially favor a competing incorrect answer in the cases where we expect decision conflict in humans.

(6/12)

We find that models indeed appear to initially favor a competing incorrect answer in the cases where we expect decision conflict in humans.

(6/12)

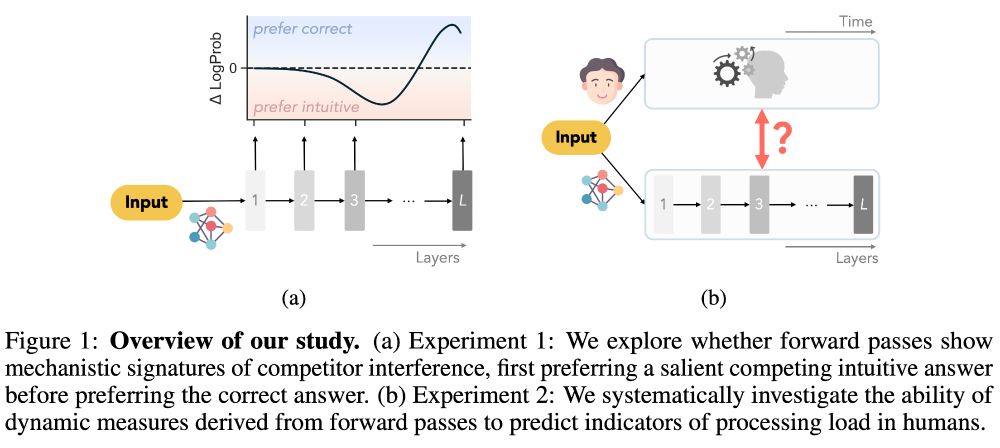

A dominant approach in AI/cogsci uses *outputs* from AI models (eg logprobs) to predict human behavior.

But how does model *processing* (across layers in a forward pass) relate to human real-time processing? 👇 (1/12)

A dominant approach in AI/cogsci uses *outputs* from AI models (eg logprobs) to predict human behavior.

But how does model *processing* (across layers in a forward pass) relate to human real-time processing? 👇 (1/12)

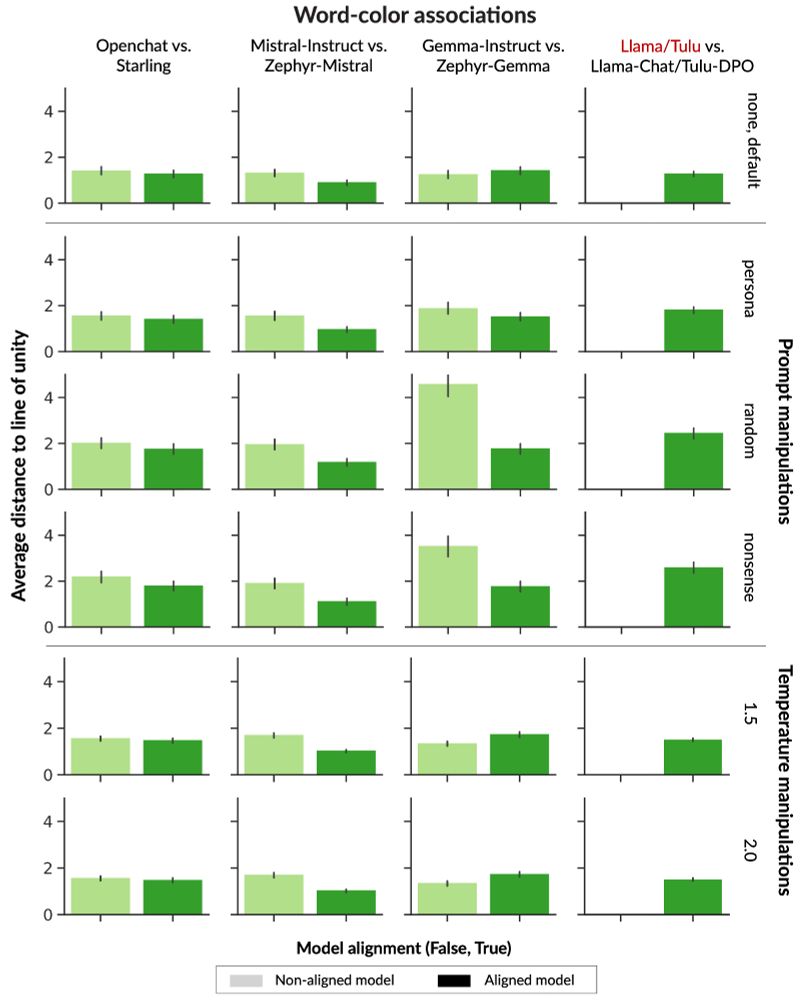

* no model reaches human-like diversity of thought.

* aligned models show LESS conceptual diversity than instruction fine-tuned counterparts

* no model reaches human-like diversity of thought.

* aligned models show LESS conceptual diversity than instruction fine-tuned counterparts

Paper: arxiv.org/abs/2305.13264

Original thread: twitter.com/_jennhu/stat...

Paper: arxiv.org/abs/2305.13264

Original thread: twitter.com/_jennhu/stat...