Jeff Clune

@jeffclune.com

Professor, Computer Science, University of British Columbia. CIFAR AI Chair, Vector Institute. Senior Advisor, DeepMind. ML, AI, deep RL, deep learning, AI-Generating Algorithms (AI-GAs), open-endedness.

Why do we tolerate loud motorcycles? I can't walk around with a crazy loud speaker, yet we allow people to have (and companies to sell) insanely loud machines that torture everyone else. They can be made quiet or silent...we should start heavily taxing and fining noise polluting machines.

May 16, 2025 at 5:12 PM

Why do we tolerate loud motorcycles? I can't walk around with a crazy loud speaker, yet we allow people to have (and companies to sell) insanely loud machines that torture everyone else. They can be made quiet or silent...we should start heavily taxing and fining noise polluting machines.

I greatly enjoyed “The Spectrum of AI Risks” panel at the Singapore Conference on AI. Thanks @teganmaharaj.bsky.social for great moderating, Max Tegmark for the invitation, and the organizers and other panelists for a great event!

PS. Do I really have sad resting panel face? 😐

PS. Do I really have sad resting panel face? 😐

April 29, 2025 at 1:17 PM

I greatly enjoyed “The Spectrum of AI Risks” panel at the Singapore Conference on AI. Thanks @teganmaharaj.bsky.social for great moderating, Max Tegmark for the invitation, and the organizers and other panelists for a great event!

PS. Do I really have sad resting panel face? 😐

PS. Do I really have sad resting panel face? 😐

Tom @rockt.ai did a great job in his #ICLR2025 keynote on open-endedness of explaining the ideas we are all so passionate about. A huge thanks for the kind words and for featuring our work, including Jenny Zhang's OMNI. cc @kennethstanley.bsky.social @joelbot3000.bsky.social

April 27, 2025 at 1:33 AM

Tom @rockt.ai did a great job in his #ICLR2025 keynote on open-endedness of explaining the ideas we are all so passionate about. A huge thanks for the kind words and for featuring our work, including Jenny Zhang's OMNI. cc @kennethstanley.bsky.social @joelbot3000.bsky.social

Very excited for this keynote by @_rockt! Awesome to see open-endedness go from a niche (😉) area to a keynote at #ICLR ! 🌱🌿🌳🌲🍀🌍✨ 📈 🧬🧪 cc @joelbot3000.bsky.social @kennethstanley.bsky.social

April 25, 2025 at 2:56 AM

Very excited for this keynote by @_rockt! Awesome to see open-endedness go from a niche (😉) area to a keynote at #ICLR ! 🌱🌿🌳🌲🍀🌍✨ 📈 🧬🧪 cc @joelbot3000.bsky.social @kennethstanley.bsky.social

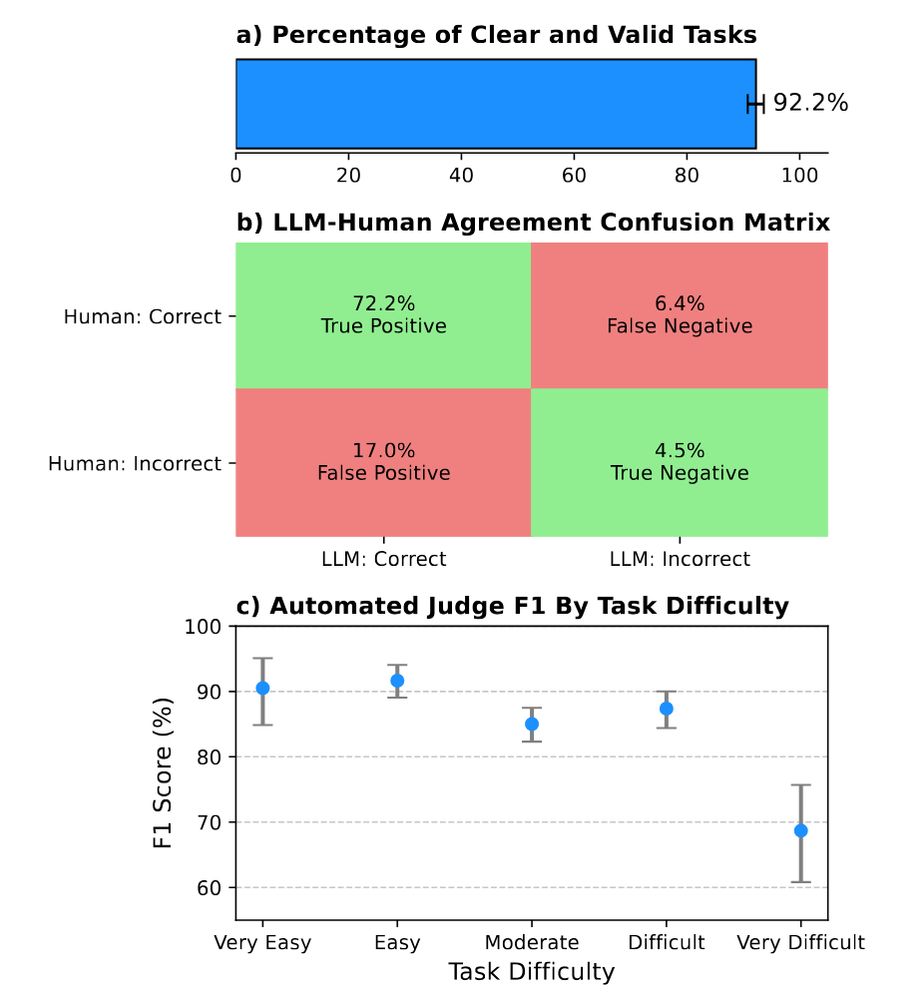

Human surveys confirm a majority of the tasks are clear & valid. Moreover, the automated scoring aligns closely with human judgment for all but the hardest tasks. [7/9]

February 12, 2025 at 6:59 AM

Human surveys confirm a majority of the tasks are clear & valid. Moreover, the automated scoring aligns closely with human judgment for all but the hardest tasks. [7/9]

Different scientist models uncover wildly creative, out-of-the-box probes. For example, Claude Sonnet 3.5 found GPT-4o can successfully design alien communication protocols!! 🌌🤖[6/9]

February 12, 2025 at 6:59 AM

Different scientist models uncover wildly creative, out-of-the-box probes. For example, Claude Sonnet 3.5 found GPT-4o can successfully design alien communication protocols!! 🌌🤖[6/9]

We also explored different scientist/subject pairings. Results with Llama3-8B as the subject instead of GPT-4o reveal unique failure modes & emerging skills, offering interesting insights into each model’s capabilities. [5/9]

February 12, 2025 at 6:59 AM

We also explored different scientist/subject pairings. Results with Llama3-8B as the subject instead of GPT-4o reveal unique failure modes & emerging skills, offering interesting insights into each model’s capabilities. [5/9]

ACD mimics community exploration: endlessly generating tasks (in code with automated scoring) probing for new capabilities or weaknesses—covering topics from string games to complex puzzles. In a GPT-4o self-eval, ACD uncovered thousands of capabilities (visualized here)! [3/9]

February 12, 2025 at 6:59 AM

ACD mimics community exploration: endlessly generating tasks (in code with automated scoring) probing for new capabilities or weaknesses—covering topics from string games to complex puzzles. In a GPT-4o self-eval, ACD uncovered thousands of capabilities (visualized here)! [3/9]

ACD automatically creates a concise "Capability Report" of discovered capabilities and failure modes, enabling quick inspection and easier dissemination of results or flagging issues pre-deployment. [2/9]

February 12, 2025 at 6:59 AM

ACD automatically creates a concise "Capability Report" of discovered capabilities and failure modes, enabling quick inspection and easier dissemination of results or flagging issues pre-deployment. [2/9]

Introducing Automated Capability Discovery!

ACD automatically identifies surprising new capabilities and failure modes in foundation models, via "self-exploration" (models exploring their own abilities).

Led by @cong-ml.bsky.social & @shengranhu.bsky.social

🔬🤖🧠🔎 [1/9]

ACD automatically identifies surprising new capabilities and failure modes in foundation models, via "self-exploration" (models exploring their own abilities).

Led by @cong-ml.bsky.social & @shengranhu.bsky.social

🔬🤖🧠🔎 [1/9]

February 12, 2025 at 6:59 AM

Introducing Automated Capability Discovery!

ACD automatically identifies surprising new capabilities and failure modes in foundation models, via "self-exploration" (models exploring their own abilities).

Led by @cong-ml.bsky.social & @shengranhu.bsky.social

🔬🤖🧠🔎 [1/9]

ACD automatically identifies surprising new capabilities and failure modes in foundation models, via "self-exploration" (models exploring their own abilities).

Led by @cong-ml.bsky.social & @shengranhu.bsky.social

🔬🤖🧠🔎 [1/9]

Research Idea: Predict abstract properties of text in addition to next-word prediction as helpful auxiliary losses. Has this been tried? (1/n)

January 22, 2025 at 8:59 PM

Research Idea: Predict abstract properties of text in addition to next-word prediction as helpful auxiliary losses. Has this been tried? (1/n)

A copy of Tim's @rockt.ai has arrived. As I say on the back cover, I read it cover to cover and recommend it to those looking to ramp up on modern AI. Congrats and thanks Tim!

January 13, 2025 at 7:26 PM

A copy of Tim's @rockt.ai has arrived. As I say on the back cover, I read it cover to cover and recommend it to those looking to ramp up on modern AI. Congrats and thanks Tim!

It's an honor that The AI Scientist is #1 on this list!

www.linkedin.com/feed/update/...

Congrats @chris-lu.bsky.social @cong-ml.bsky.social @RobertTLange @hardmaru.bsky.social @jfoerst.bsky.social

www.linkedin.com/feed/update/...

Congrats @chris-lu.bsky.social @cong-ml.bsky.social @RobertTLange @hardmaru.bsky.social @jfoerst.bsky.social

January 8, 2025 at 6:50 PM

It's an honor that The AI Scientist is #1 on this list!

www.linkedin.com/feed/update/...

Congrats @chris-lu.bsky.social @cong-ml.bsky.social @RobertTLange @hardmaru.bsky.social @jfoerst.bsky.social

www.linkedin.com/feed/update/...

Congrats @chris-lu.bsky.social @cong-ml.bsky.social @RobertTLange @hardmaru.bsky.social @jfoerst.bsky.social

I strongly believe the development of superintelligence is inevitable. I do not believe humanity has the ability to not invent it, given its economic, scientific, and military value. Many people say things like “we should not build AGI” without realizing that outcome is virtually impossible. 1/

January 7, 2025 at 7:55 PM

I strongly believe the development of superintelligence is inevitable. I do not believe humanity has the ability to not invent it, given its economic, scientific, and military value. Many people say things like “we should not build AGI” without realizing that outcome is virtually impossible. 1/

I guess AI and human scientists stand on the shoulders of the same giants.

December 17, 2024 at 11:16 PM

I guess AI and human scientists stand on the shoulders of the same giants.

NO WAY! This is nearly EXACTLY one of the paper ideas The AI Scientist came up with! It was my favorite idea it generated, & made us very impressed with its creativity & good taste in an ML paper proposal. Cool to see humans agree! The humans def executed better though.

x.com/BrantonDeMos...

x.com/BrantonDeMos...

December 17, 2024 at 9:36 PM

NO WAY! This is nearly EXACTLY one of the paper ideas The AI Scientist came up with! It was my favorite idea it generated, & made us very impressed with its creativity & good taste in an ML paper proposal. Cool to see humans agree! The humans def executed better though.

x.com/BrantonDeMos...

x.com/BrantonDeMos...

Dream finish to #NeurIPS2024: a dinner with Ted Chiang (one of my favorite writers), the excellent @alisongopnik.bsky.social, many amazing open-ended researchers (including @cedcolas) & other excellent people. Thanks to the tremendous IMOL organizers! Until next time! 🦎🧬🦖🦣

December 16, 2024 at 7:10 PM

Dream finish to #NeurIPS2024: a dinner with Ted Chiang (one of my favorite writers), the excellent @alisongopnik.bsky.social, many amazing open-ended researchers (including @cedcolas) & other excellent people. Thanks to the tremendous IMOL organizers! Until next time! 🦎🧬🦖🦣

Lots of interest in ADAS! Thanks everyone, and congrats

Shengran Hu and @cong-ml.bsky.social! 🚀🚀🚀

Shengran Hu and @cong-ml.bsky.social! 🚀🚀🚀

December 16, 2024 at 6:19 PM

Lots of interest in ADAS! Thanks everyone, and congrats

Shengran Hu and @cong-ml.bsky.social! 🚀🚀🚀

Shengran Hu and @cong-ml.bsky.social! 🚀🚀🚀

Jenny did a great job presenting OMNI-EPIC during the IMOL Oral. Congrats Jenny Zhang! arxiv.org/abs/2405.15568

December 16, 2024 at 6:17 PM

Jenny did a great job presenting OMNI-EPIC during the IMOL Oral. Congrats Jenny Zhang! arxiv.org/abs/2405.15568

A huge congratulations to

Shengran Hu and

@cong-ml.bsky.social on ADAS winning an Outstanding Paper Award at the #NeurIPS2024 OWA workshop!! And nice jumps everyone!! 🦘 🦘 🦘

Shengran Hu and

@cong-ml.bsky.social on ADAS winning an Outstanding Paper Award at the #NeurIPS2024 OWA workshop!! And nice jumps everyone!! 🦘 🦘 🦘

December 16, 2024 at 2:19 AM

A huge congratulations to

Shengran Hu and

@cong-ml.bsky.social on ADAS winning an Outstanding Paper Award at the #NeurIPS2024 OWA workshop!! And nice jumps everyone!! 🦘 🦘 🦘

Shengran Hu and

@cong-ml.bsky.social on ADAS winning an Outstanding Paper Award at the #NeurIPS2024 OWA workshop!! And nice jumps everyone!! 🦘 🦘 🦘

Is Developing AGI a socially responsible goal? I enjoyed the thoughtful, passionate SoLaR panel on this critically important topic w/ @yoshuabengio.bsky.social, @mmitchell.bsky.social, & @jfoerst.bsky.social. It's clear everyone is both worried & cares deeply about making this go as well as possible

December 15, 2024 at 3:51 PM

Is Developing AGI a socially responsible goal? I enjoyed the thoughtful, passionate SoLaR panel on this critically important topic w/ @yoshuabengio.bsky.social, @mmitchell.bsky.social, & @jfoerst.bsky.social. It's clear everyone is both worried & cares deeply about making this go as well as possible

Great to catch up with @Yoshua_Bengio, Aaron Courville, and @hugo_larochelle at #NeurIPS2024, with lots of talk about AI Safety. Thanks @CIFAR_News for supporting our research!

December 14, 2024 at 5:07 PM

Great to catch up with @Yoshua_Bengio, Aaron Courville, and @hugo_larochelle at #NeurIPS2024, with lots of talk about AI Safety. Thanks @CIFAR_News for supporting our research!

Our work Automated Design of Agentic Systems (w/

Shengran Hu & @cong-ml.bsky.social) will have ✨two orals✨ @ #NeurIPS2024 workshops (LanGame Sat 10:20, OWA Sun 4:50). Please come visit us😃

We would also love to chat about open-endedness, LLM agents, etc. Come by if you want to meet!

Shengran Hu & @cong-ml.bsky.social) will have ✨two orals✨ @ #NeurIPS2024 workshops (LanGame Sat 10:20, OWA Sun 4:50). Please come visit us😃

We would also love to chat about open-endedness, LLM agents, etc. Come by if you want to meet!

December 10, 2024 at 9:49 PM

Our work Automated Design of Agentic Systems (w/

Shengran Hu & @cong-ml.bsky.social) will have ✨two orals✨ @ #NeurIPS2024 workshops (LanGame Sat 10:20, OWA Sun 4:50). Please come visit us😃

We would also love to chat about open-endedness, LLM agents, etc. Come by if you want to meet!

Shengran Hu & @cong-ml.bsky.social) will have ✨two orals✨ @ #NeurIPS2024 workshops (LanGame Sat 10:20, OWA Sun 4:50). Please come visit us😃

We would also love to chat about open-endedness, LLM agents, etc. Come by if you want to meet!

A message to reviewers (including myself)

November 28, 2024 at 8:49 PM

A message to reviewers (including myself)