Here are a few diagrams more diagrams from the paper to tempt you!

Here are a few diagrams more diagrams from the paper to tempt you!

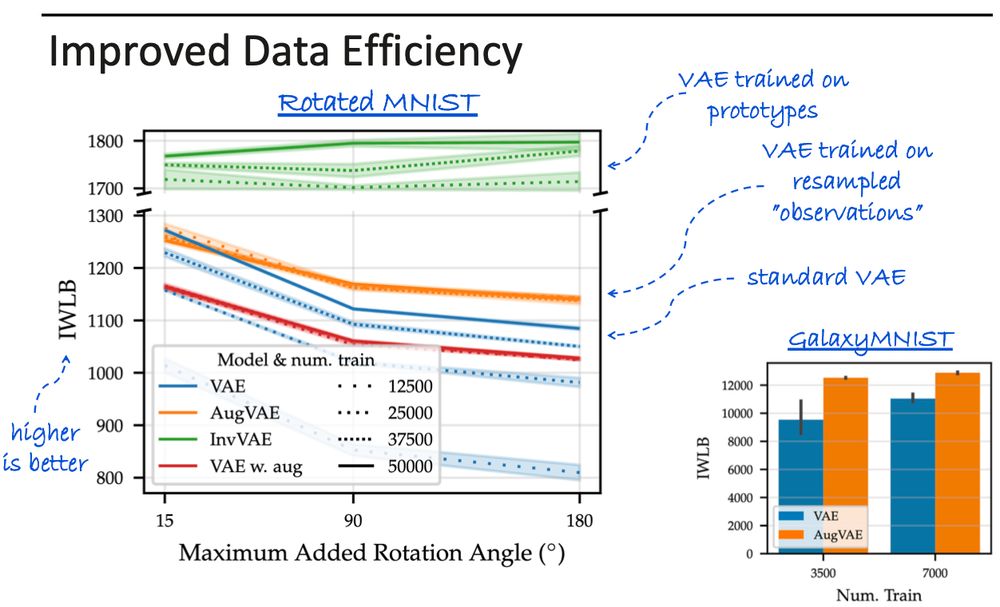

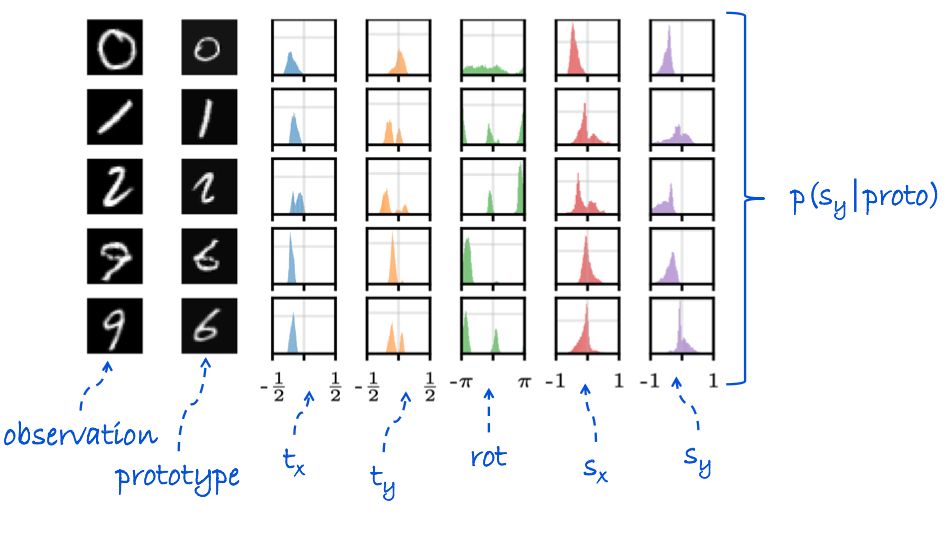

E.g., 9's and 6's can be rotated into each other, and 1's can be rotated 180 deg w/o change.

E.g., 9's and 6's can be rotated into each other, and 1's can be rotated 180 deg w/o change.

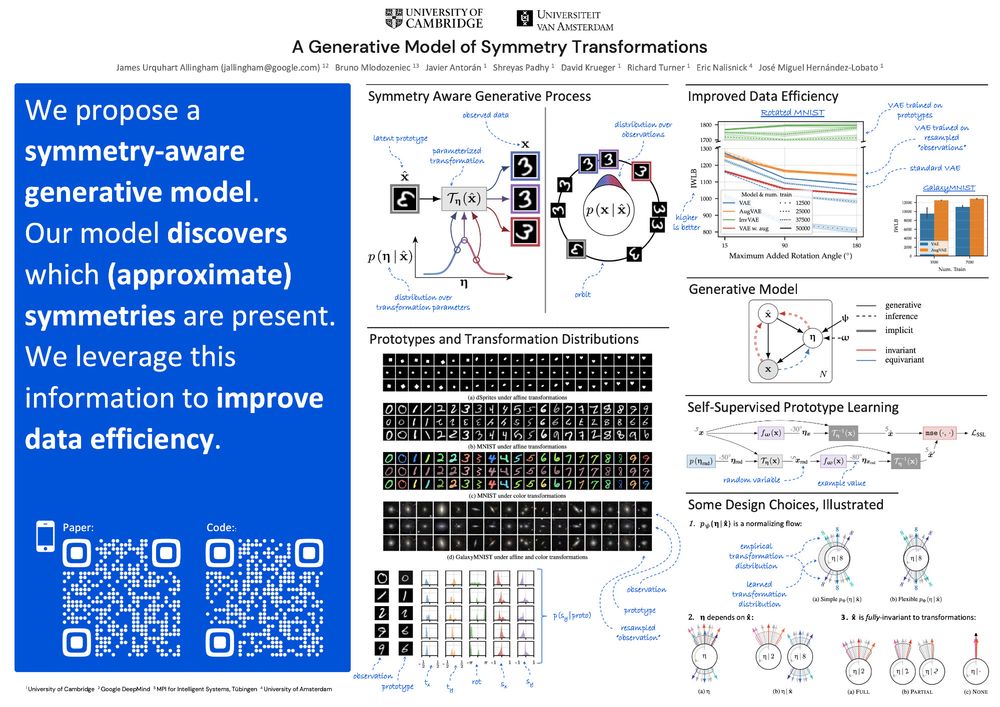

paper: arxiv.org/abs/2403.01946

code: github.com/cambridge-ml...

paper: arxiv.org/abs/2403.01946

code: github.com/cambridge-ml...

🧵⬇️

🧵⬇️