To build trust in AI, the government must ensure that AI systems are beneficial and safe, as well as center its messaging around AI on how it improves social outcomes

5/6

To build trust in AI, the government must ensure that AI systems are beneficial and safe, as well as center its messaging around AI on how it improves social outcomes

5/6

4/6

4/6

3/6

3/6

👫 While 25% of UK adults use generative AI weekly, nearly half have never used it

2/6

👫 While 25% of UK adults use generative AI weekly, nearly half have never used it

2/6

While a good starting point, current frameworks for industrial robots and autonomous vehicles are insufficient to address the full range of risks EAI systems pose

3/4

While a good starting point, current frameworks for industrial robots and autonomous vehicles are insufficient to address the full range of risks EAI systems pose

3/4

EAI inherits traditional AI risks (privacy, bias, security etc) - and pose new ones, e.g. related to physical harm, mass surveillance and displacement of manual labor

We identify 4 key EAI risk categories

2/4

EAI inherits traditional AI risks (privacy, bias, security etc) - and pose new ones, e.g. related to physical harm, mass surveillance and displacement of manual labor

We identify 4 key EAI risk categories

2/4

arxiv.org/pdf/2509.00117

Co-authors Jared Perlo @fbarez.bsky.social Alex Robey & @floridi.bsky.social

1\4

arxiv.org/pdf/2509.00117

Co-authors Jared Perlo @fbarez.bsky.social Alex Robey & @floridi.bsky.social

1\4

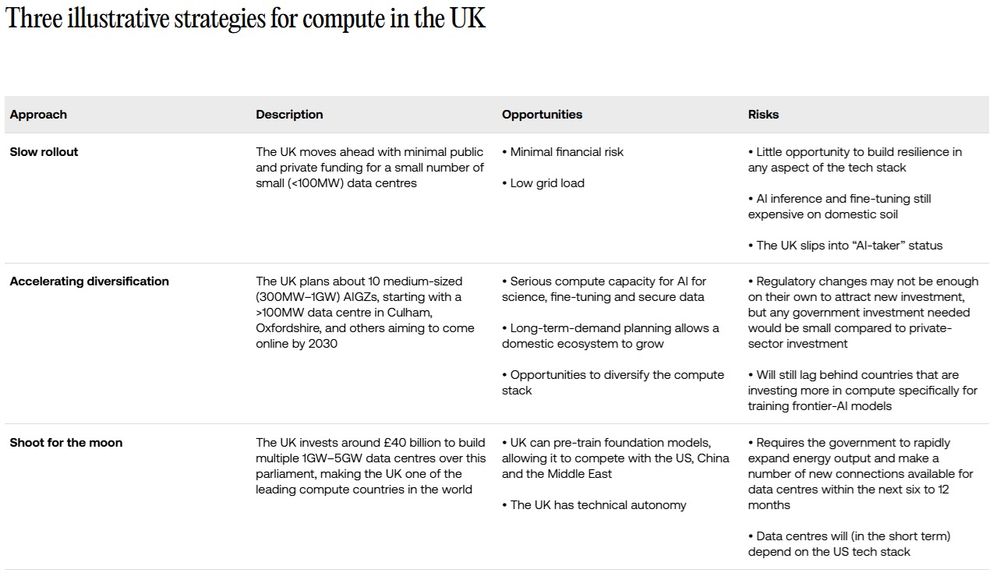

The UK doesn’t need to build everything, but it must build enough infrastructure to deploy AI where it matters, to ensure resilience, and to anchor a domestic ecosystem that delivers for the public and the economy

The UK doesn’t need to build everything, but it must build enough infrastructure to deploy AI where it matters, to ensure resilience, and to anchor a domestic ecosystem that delivers for the public and the economy

Key takes:

✅ Let’s shift from apathy to action

✅ Tech is part of green solutions

✅ European leadership is needed

Read more about Tony Blair Institute’s work on climate & energy led by Lindy Fursman in 🧵

Key takes:

✅ Let’s shift from apathy to action

✅ Tech is part of green solutions

✅ European leadership is needed

Read more about Tony Blair Institute’s work on climate & energy led by Lindy Fursman in 🧵

This requires bold visions, infrastructure investments & good governance to enable AI uptake

Panel w/ HE Josephine Teo, HE Paula Inngabire, Matt Clifford and Teresa Carlson

2/4

This requires bold visions, infrastructure investments & good governance to enable AI uptake

Panel w/ HE Josephine Teo, HE Paula Inngabire, Matt Clifford and Teresa Carlson

2/4

Last week in Paris, the Tony Blair Institute convened 200 global leaders to explore how AI can be harnessed for economic growth & social progress

Thanks @yoshuabengio.bsky.social, @alondra.bsky.social & Fu Ying for insights + lively debate on AI safety

🧵 1/4

Last week in Paris, the Tony Blair Institute convened 200 global leaders to explore how AI can be harnessed for economic growth & social progress

Thanks @yoshuabengio.bsky.social, @alondra.bsky.social & Fu Ying for insights + lively debate on AI safety

🧵 1/4

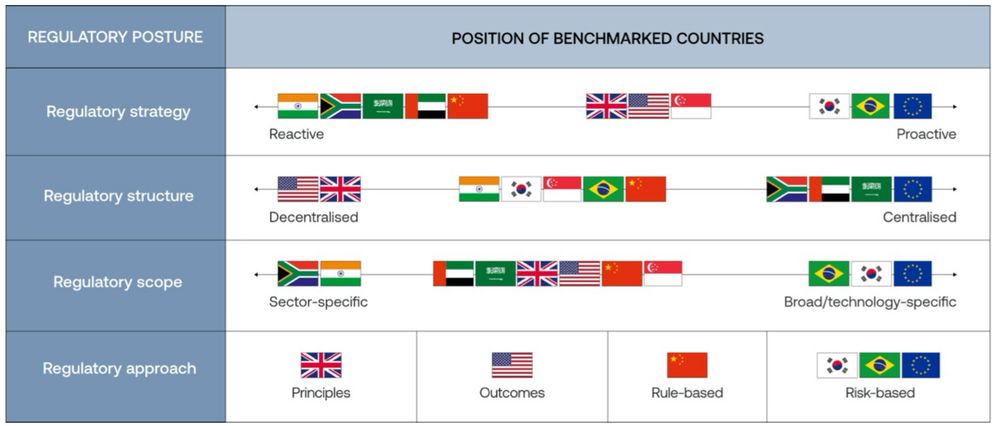

While useful, this is a simplification. Most AI regulations combine technology & sector-specific elements; centralized & decentralized controls; and procedural & substantive requirements

3/4

While useful, this is a simplification. Most AI regulations combine technology & sector-specific elements; centralized & decentralized controls; and procedural & substantive requirements

3/4

Robust AI governance also requires

- responsible dev. practices

- monitoring of downstream applications

- feedback loops btw different controls

link.springer.com/article/10.1...

Co-authors JonasSchuett

@hannahrosekirk.bsky.social

@floridi.bsky.social

Robust AI governance also requires

- responsible dev. practices

- monitoring of downstream applications

- feedback loops btw different controls

link.springer.com/article/10.1...

Co-authors JonasSchuett

@hannahrosekirk.bsky.social

@floridi.bsky.social