I also wrote a short blog on why we're building this dataset: huggingface.co/blog/davanst...

I also wrote a short blog on why we're building this dataset: huggingface.co/blog/davanst...

github.com/context-labs...

github.com/context-labs...

huggingface.co/blog/cyrilza...

huggingface.co/blog/cyrilza...

1M token context (that's entire "War and Peace"!) + 4.3x faster processing speed 🔥

Check out the demo: https://huggingface.co/spaces/Qwen/Qwen2.5-Turbo-1M-Demo

#QWEN

1M token context (that's entire "War and Peace"!) + 4.3x faster processing speed 🔥

Check out the demo: https://huggingface.co/spaces/Qwen/Qwen2.5-Turbo-1M-Demo

#QWEN

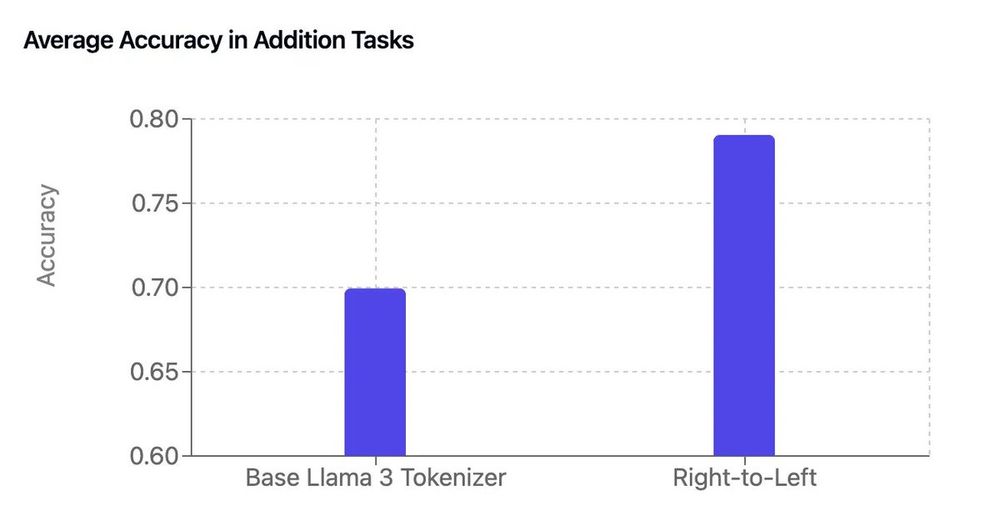

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]