New blog post reading the hype around scaling LLMs to reach AGI through the lens of anthropology, philosophy, and cognition.

int8.tech/posts/rethin...

New blog post reading the hype around scaling LLMs to reach AGI through the lens of anthropology, philosophy, and cognition.

int8.tech/posts/rethin...

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

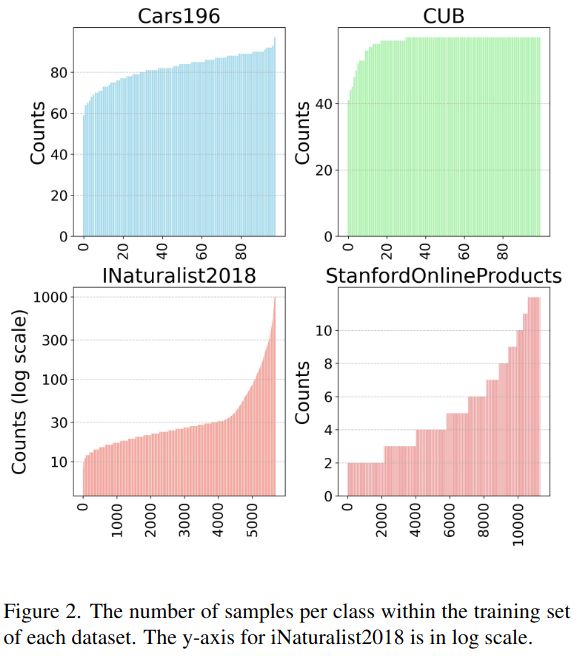

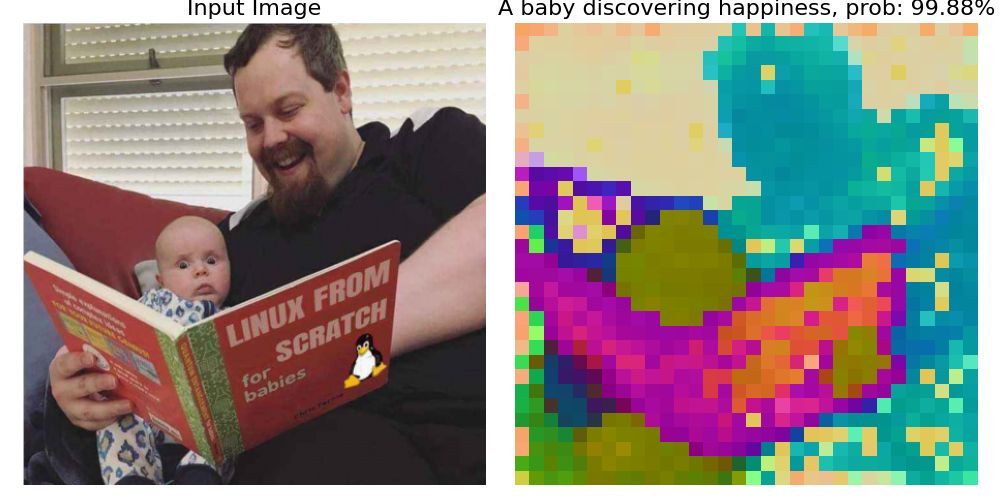

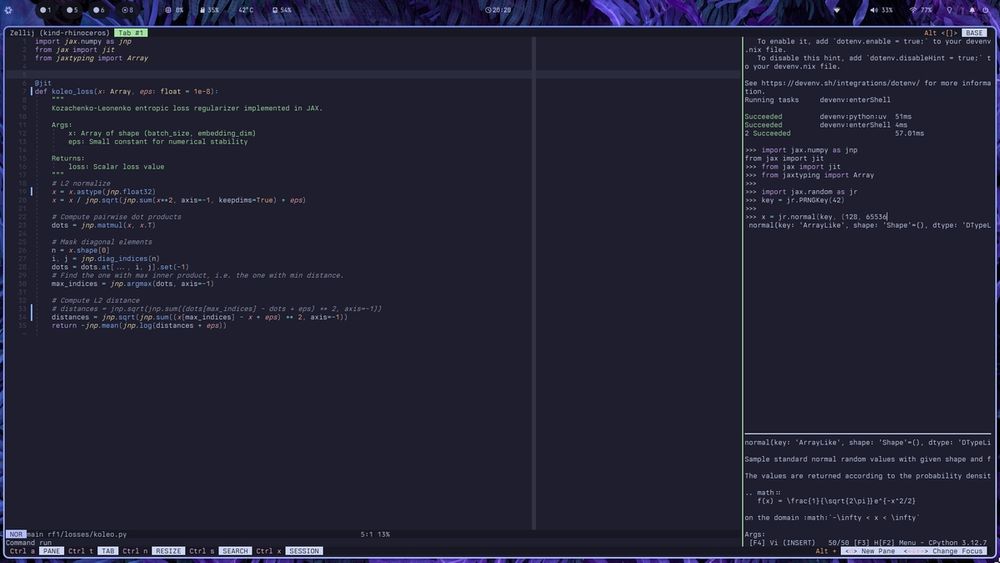

Curious about image retrieval and contrastive learning? We present:

📄 "All You Need to Know About Training Image Retrieval Models"

🔍 The most comprehensive retrieval benchmark—thousands of experiments across 4 datasets, dozens of losses, batch sizes, LRs, data labeling, and more!

Curious about image retrieval and contrastive learning? We present:

📄 "All You Need to Know About Training Image Retrieval Models"

🔍 The most comprehensive retrieval benchmark—thousands of experiments across 4 datasets, dozens of losses, batch sizes, LRs, data labeling, and more!

Learn more at: github.com/clementpoire...

TIPS paper: arxiv.org/abs/2410.16512

Learn more at: github.com/clementpoire...

TIPS paper: arxiv.org/abs/2410.16512

github.com/clementpoire...

github.com/clementpoire...

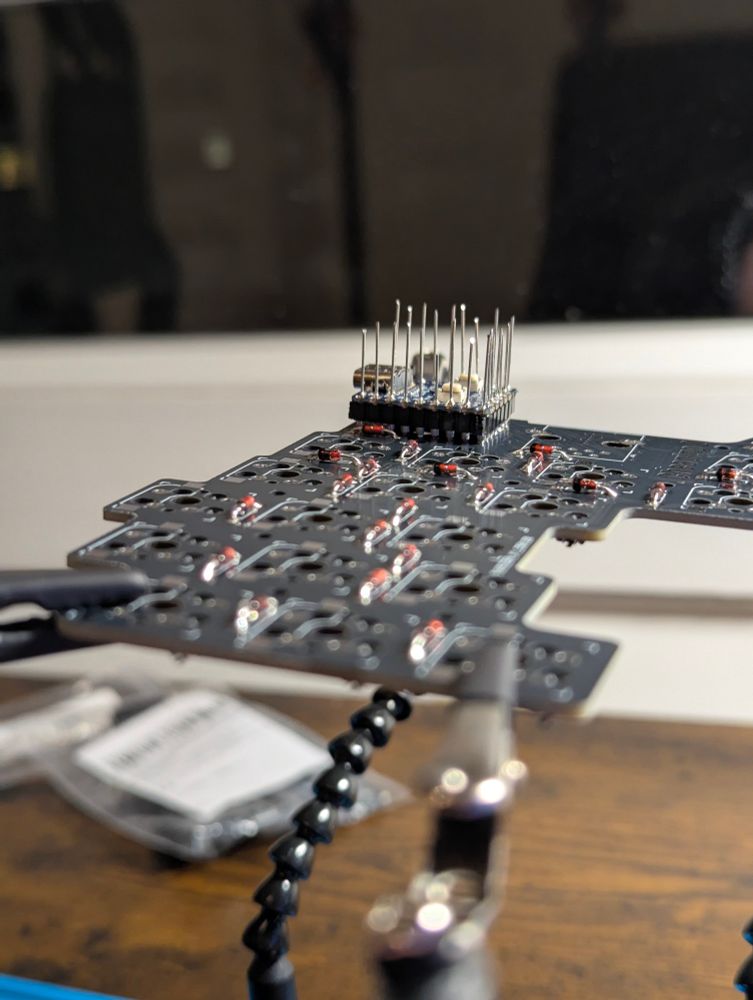

It's time to have some fun intermediate projects before the next big thing.

Let's take inspirations outside deep learning to come back stronger.

It's time to have some fun intermediate projects before the next big thing.

Let's take inspirations outside deep learning to come back stronger.

int8.tech/posts/repl-p...

int8.tech/posts/repl-p...

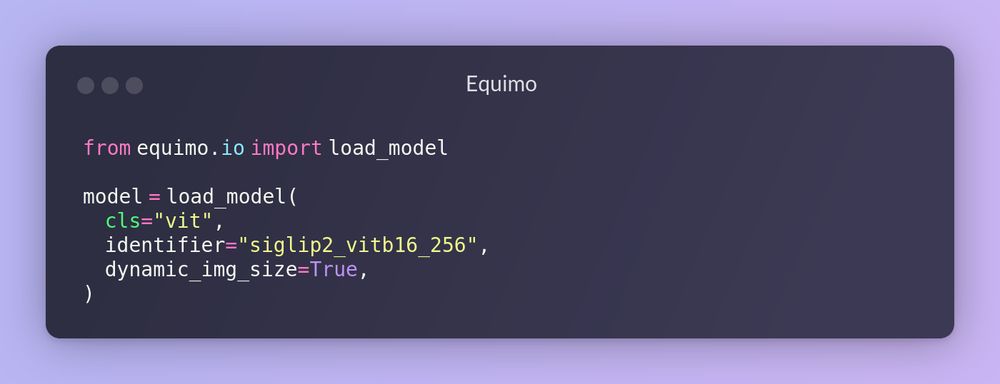

Equimo is a JAX/Equinox library implementing modern vision architectures (2023-24). Features FasterViT, MLLA, VSSD, and more state-space/transformer models. Pure JAX implementation with modular design.

#MLSky

Equimo is a JAX/Equinox library implementing modern vision architectures (2023-24). Features FasterViT, MLLA, VSSD, and more state-space/transformer models. Pure JAX implementation with modular design.

#MLSky

Since the title is misleading, let me also say: US academics do not need $100k for this. They used 2,000 GPU hours in this paper; NSF will give you that. #MLSky

Since the title is misleading, let me also say: US academics do not need $100k for this. They used 2,000 GPU hours in this paper; NSF will give you that. #MLSky

doi.org/10.1016/j.ij...

doi.org/10.1016/j.ij...