IDI

@institutional.org

A research center at Harvard working to strengthen society’s connection to knowledge by advancing our access to and understanding of the data that shapes AI.

Can a small visual language model read documents as effectively as models 27 times its size?

Next Friday, IDI will host Michele Dolfi and Peter Staar from IBM Research Zurich to discuss their work on SmolDocling, an “ultra-compact” model for diverse OCR tasks.

Next Friday, IDI will host Michele Dolfi and Peter Staar from IBM Research Zurich to discuss their work on SmolDocling, an “ultra-compact” model for diverse OCR tasks.

September 9, 2025 at 4:06 PM

Can a small visual language model read documents as effectively as models 27 times its size?

Next Friday, IDI will host Michele Dolfi and Peter Staar from IBM Research Zurich to discuss their work on SmolDocling, an “ultra-compact” model for diverse OCR tasks.

Next Friday, IDI will host Michele Dolfi and Peter Staar from IBM Research Zurich to discuss their work on SmolDocling, an “ultra-compact” model for diverse OCR tasks.

As part of our refinement work, we supplemented the original OCR-extracted text with a post-processed version that utilizes line detection to reassemble the text according to the line type.

June 12, 2025 at 9:12 PM

As part of our refinement work, we supplemented the original OCR-extracted text with a post-processed version that utilizes line detection to reassemble the text according to the line type.

We included extensive volume-level metadata with both original and generated components, such as results from text-level language detection.

June 12, 2025 at 9:12 PM

We included extensive volume-level metadata with both original and generated components, such as results from text-level language detection.

We analyzed the dataset’s coverage across time, topic, and language and found:

- 40% of English text + long tail of 254 languages

- 20 clear topical tranches

- Largely published in the 19th and 20th centuries

Technical report here: arxiv.org/abs/2506.08300

- 40% of English text + long tail of 254 languages

- 20 clear topical tranches

- Largely published in the 19th and 20th centuries

Technical report here: arxiv.org/abs/2506.08300

June 12, 2025 at 9:12 PM

We analyzed the dataset’s coverage across time, topic, and language and found:

- 40% of English text + long tail of 254 languages

- 20 clear topical tranches

- Largely published in the 19th and 20th centuries

Technical report here: arxiv.org/abs/2506.08300

- 40% of English text + long tail of 254 languages

- 20 clear topical tranches

- Largely published in the 19th and 20th centuries

Technical report here: arxiv.org/abs/2506.08300

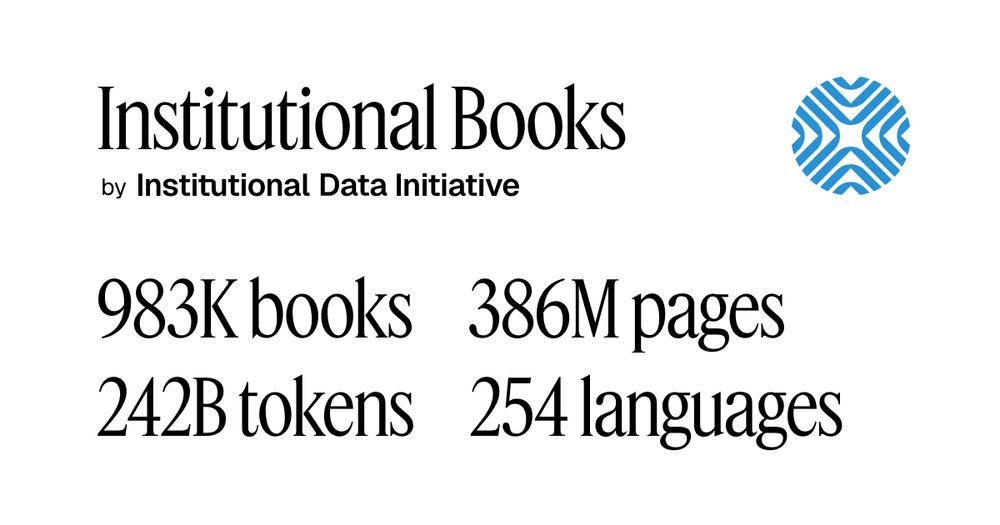

Today we released Institutional Books 1.0, a 242B token dataset from Harvard Library's collections, refined for accuracy and usability. 🧵

June 12, 2025 at 9:12 PM

Today we released Institutional Books 1.0, a 242B token dataset from Harvard Library's collections, refined for accuracy and usability. 🧵