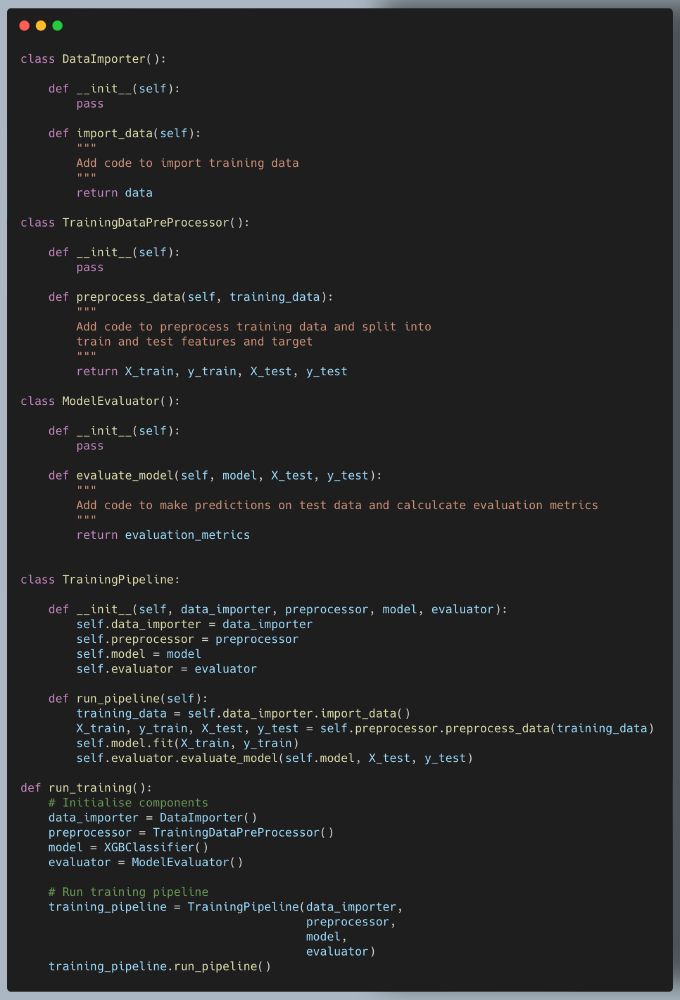

The orchestrator is responsible for managing the pipeline execution.

This allows us to easily switch components (e.g., swap XGBoost for RandomForest).

The orchestrator is responsible for managing the pipeline execution.

This allows us to easily switch components (e.g., swap XGBoost for RandomForest).

A TrainingPipeline ties together components into a single workflow.

Pipelines ensure a clear flow of data, from raw input to final evaluation.

A TrainingPipeline ties together components into a single workflow.

Pipelines ensure a clear flow of data, from raw input to final evaluation.

Each key function (data import, preprocessing, training, evaluation) is wrapped in its own class.

This makes it reusable, testable, and modular.

Example: A DataImporter class to load data!

Each key function (data import, preprocessing, training, evaluation) is wrapped in its own class.

This makes it reusable, testable, and modular.

Example: A DataImporter class to load data!

Here’s a simple CPO (components, pipelines, orchestrators) pattern example to structure your code.

Let's break this down with a training pipeline example👇

#MachineLearning #DataScience #MLOps

Here’s a simple CPO (components, pipelines, orchestrators) pattern example to structure your code.

Let's break this down with a training pipeline example👇

#MachineLearning #DataScience #MLOps

Why? They skip Exploratory Data Analysis (EDA).

EDA is an important step for performance and explainability.

Here’s how to start👇

#DataAnalytics #100DaysOfML #MachineLearning

Why? They skip Exploratory Data Analysis (EDA).

EDA is an important step for performance and explainability.

Here’s how to start👇

#DataAnalytics #100DaysOfML #MachineLearning