🌲 Academic Staff at Stanford University (AIMI Center)

🦴 Radiology AI is my stuff

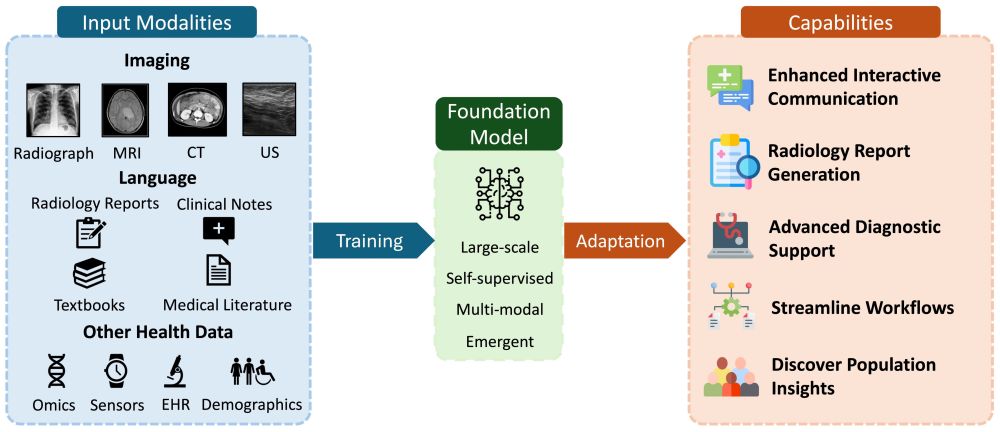

@radiology_rsna, colleagues at stanford dive into how foundation models (FMs) are set to revolutionize radiology!

@radiology_rsna, colleagues at stanford dive into how foundation models (FMs) are set to revolutionize radiology!

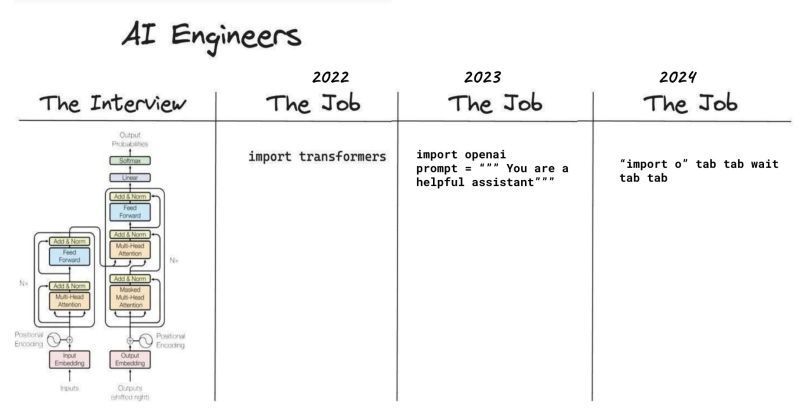

What a trick...

What a trick...

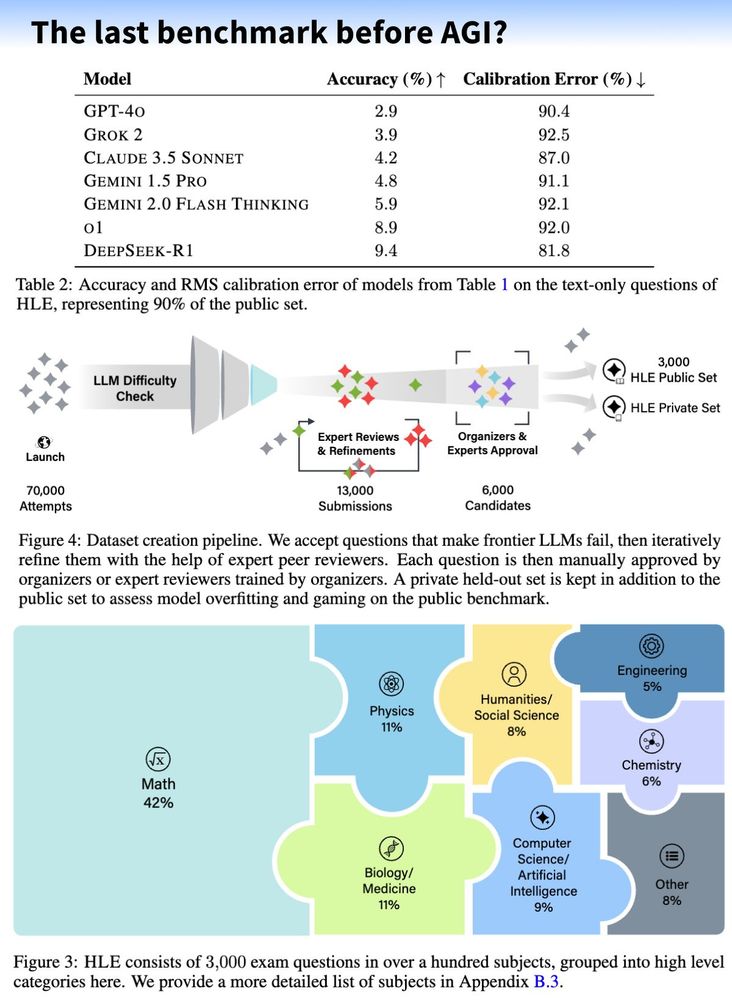

🤯 3,000 expert-level questions across 100+ subjects, created by nearly 1,000 subject matter experts globally.

🤯 3,000 expert-level questions across 100+ subjects, created by nearly 1,000 subject matter experts globally.

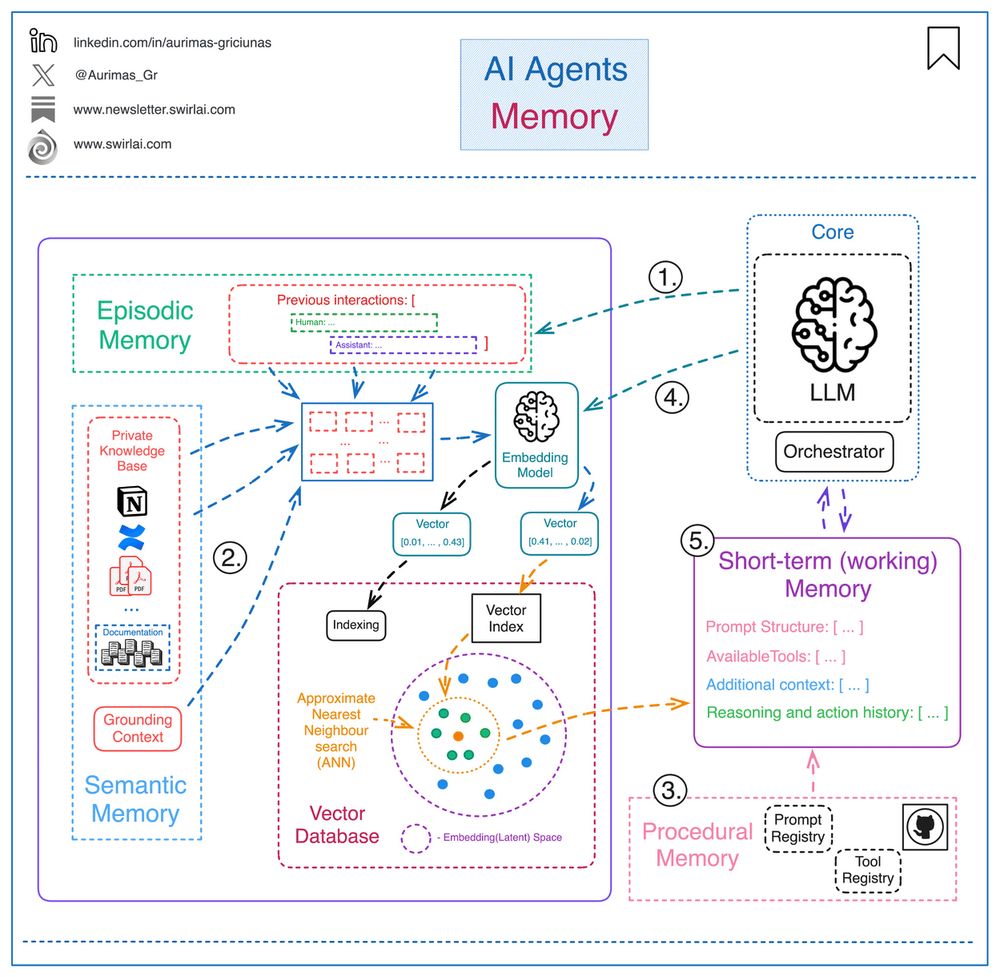

An agent's memory helps it plan and react by leveraging past interactions or external data via prompt context. Here’s a breakdown:

𝟭. Episodic Memory: Logs past actions/interactions (e.g., stored in a vector database for semantic search).

An agent's memory helps it plan and react by leveraging past interactions or external data via prompt context. Here’s a breakdown:

𝟭. Episodic Memory: Logs past actions/interactions (e.g., stored in a vector database for semantic search).

Kling AI just announced Elements

🌎 First, world building:

Craft your characters, environments, props. Plan your motion and VFX.

🎛️ Then, remixing:

Bring it all together into a cohesive story.

Kling AI just announced Elements

🌎 First, world building:

Craft your characters, environments, props. Plan your motion and VFX.

🎛️ Then, remixing:

Bring it all together into a cohesive story.

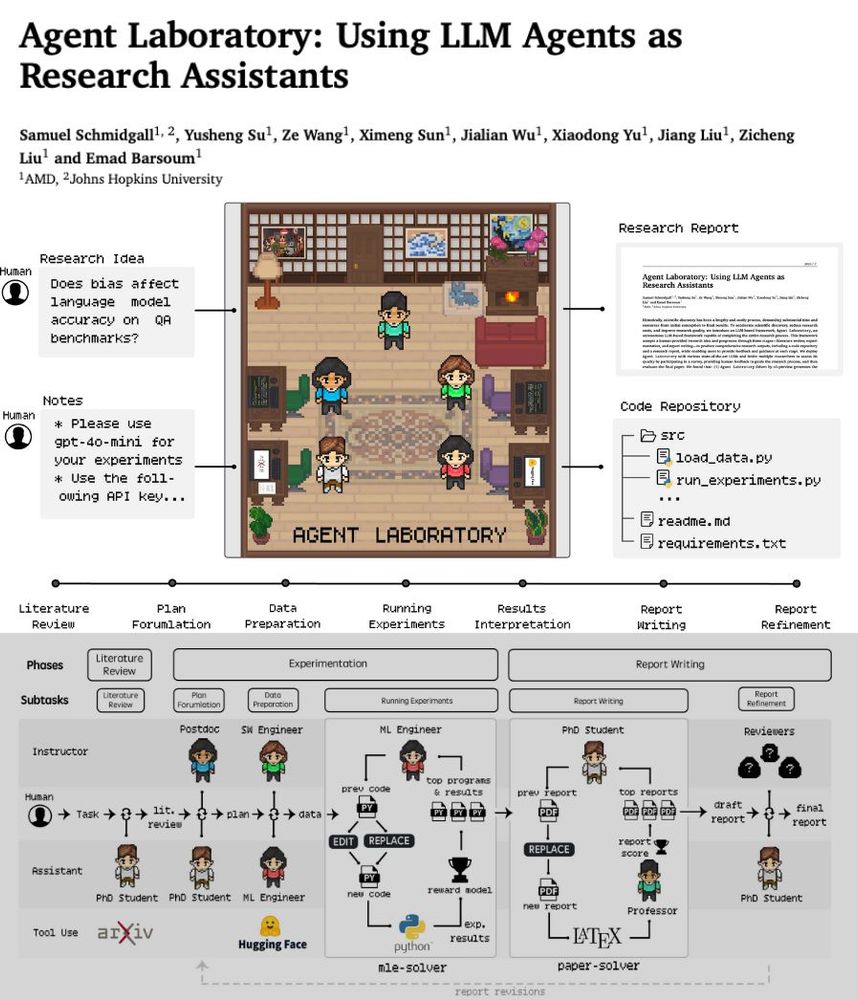

Amazing. Agent Roles:

⛳ PhD Agent: Conducts literature reviews, interprets results, writes reports.

⛳ Postdoc Agent: Plans research, designs experiments.

⛳ ML Engineer Agent: Prepares data, writes, optimizes code.

⛳ Professor Agent: Oversees, refines reports.

Amazing. Agent Roles:

⛳ PhD Agent: Conducts literature reviews, interprets results, writes reports.

⛳ Postdoc Agent: Plans research, designs experiments.

⛳ ML Engineer Agent: Prepares data, writes, optimizes code.

⛳ Professor Agent: Oversees, refines reports.

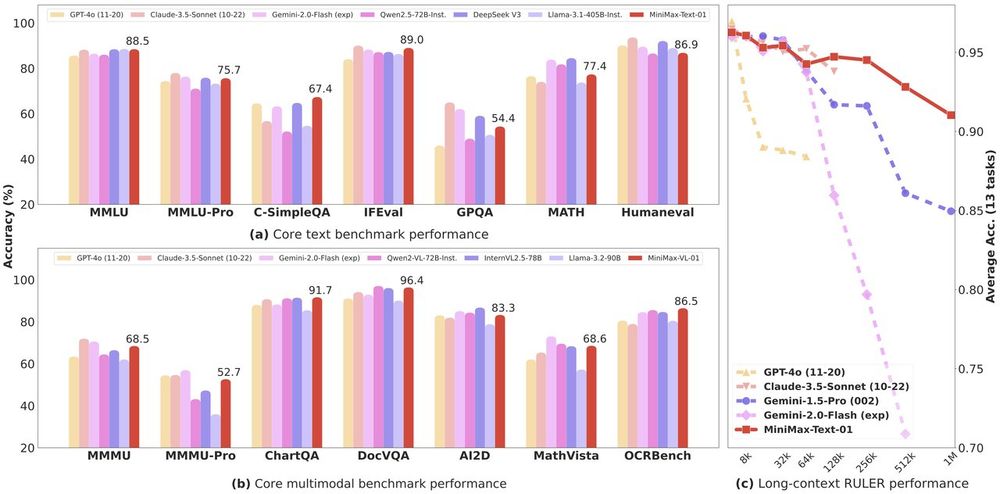

>> Hybrid linear-softmax attention working very well at large scale and long-context

filecdn.minimax.chat/_Arxiv_MiniM...

>> Hybrid linear-softmax attention working very well at large scale and long-context

filecdn.minimax.chat/_Arxiv_MiniM...

arxiv.org/pdf/2501.07301

arxiv.org/pdf/2501.07301

Contrib: Temporal Gaussian Hierarchy representation for long volumetric video.

Contrib: Temporal Gaussian Hierarchy representation for long volumetric video.

✨ Implicit PRM (no labels)

🔄 Online updates, zero overhead

🎯 Token-level rewards + RLOO

Scaling up with 3x more data!

✨ Implicit PRM (no labels)

🔄 Online updates, zero overhead

🎯 Token-level rewards + RLOO

Scaling up with 3x more data!

• Stable pretraining

• LR annealing, data curricula, and soups

• Tulu post-training

• Compute infrastructure

• Stable pretraining

• LR annealing, data curricula, and soups

• Tulu post-training

• Compute infrastructure

After DiffSensei yesterday, @ylecun is once again being style-transferred!

(⚡Llama 3.3) Chat with the paper: huggingface.co/spaces/hugg...

🤗 Model: huggingface.co/zongzhuofan...

🤗 Demo: huggingface.co/spaces/zong...

🤗 Paper: huggingface.co/papers/2412...

After DiffSensei yesterday, @ylecun is once again being style-transferred!

(⚡Llama 3.3) Chat with the paper: huggingface.co/spaces/hugg...

🤗 Model: huggingface.co/zongzhuofan...

🤗 Demo: huggingface.co/spaces/zong...

🤗 Paper: huggingface.co/papers/2412...

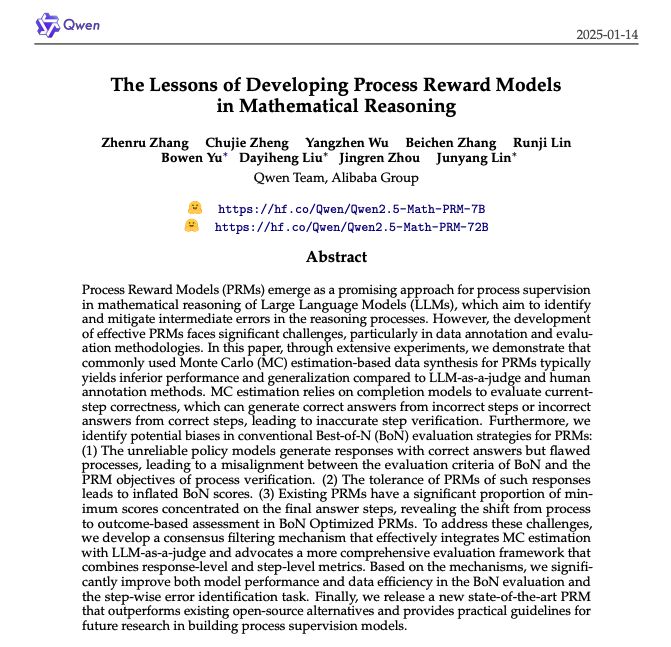

Also, this is the best paper heading I’ve seen in quite some time. The 'en tête' looks fantastic.

(⚡Llama 3.3) Chat with the paper: huggingface.co/spaces/hugg...

🤗 Model: huggingface.co/euclid-mult...

🤗 Dataset: huggingface.co/datasets/eu...

Also, this is the best paper heading I’ve seen in quite some time. The 'en tête' looks fantastic.

(⚡Llama 3.3) Chat with the paper: huggingface.co/spaces/hugg...

🤗 Model: huggingface.co/euclid-mult...

🤗 Dataset: huggingface.co/datasets/eu...

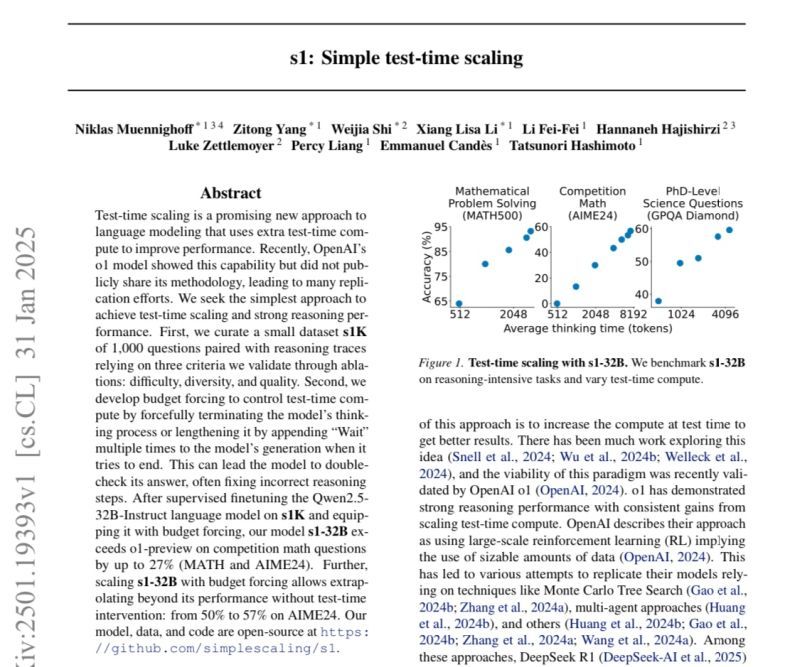

What are the main contributions, how does it compare to SOTA? Find out 👇

🤗 Chat with the paper (⚡Llama 3.3) huggingface.co/spaces/hugg...

What are the main contributions, how does it compare to SOTA? Find out 👇

🤗 Chat with the paper (⚡Llama 3.3) huggingface.co/spaces/hugg...

(⚡Llama 3.3) Chat with paper: huggingface.co/spaces/hugg...

🤗 Model: huggingface.co/internlm/in...

🤗 Paper: huggingface.co/papers/2412...

🐍 GitHub: github.com/InternLM/In...

(⚡Llama 3.3) Chat with paper: huggingface.co/spaces/hugg...

🤗 Model: huggingface.co/internlm/in...

🤗 Paper: huggingface.co/papers/2412...

🐍 GitHub: github.com/InternLM/In...

🤗 Dataset: huggingface.co/datasets/CS...

💡 An excellent way to get an overview in a glimpse: ask paper-central to summarize the scores in a markdown table (⚡by Llama 3.3!)

Try it out for any paper: huggingface.co/spaces/hugg...

🤗 Dataset: huggingface.co/datasets/CS...

💡 An excellent way to get an overview in a glimpse: ask paper-central to summarize the scores in a markdown table (⚡by Llama 3.3!)

Try it out for any paper: huggingface.co/spaces/hugg...