Raphael Hernandes

@hernandesraph.bsky.social

AI Ethics researcher, journalist working on tech, AI, and data.

Ex-The Guardian, Folha de S.Paulo.

Ethics of AI MPhil at the University of Cambridge.

(Ele/dele he/him)

Ex-The Guardian, Folha de S.Paulo.

Ethics of AI MPhil at the University of Cambridge.

(Ele/dele he/him)

With @elenamorresi.bsky.social @porcelinad.bsky.social @pablogutierrez.bsky.social @lydia-rachel.bsky.social @robynvinter.bsky.social @c-aguilargarcia.bsky.social (and + that I couldn't find on Bluesky)

September 28, 2025 at 10:34 AM

With @elenamorresi.bsky.social @porcelinad.bsky.social @pablogutierrez.bsky.social @lydia-rachel.bsky.social @robynvinter.bsky.social @c-aguilargarcia.bsky.social (and + that I couldn't find on Bluesky)

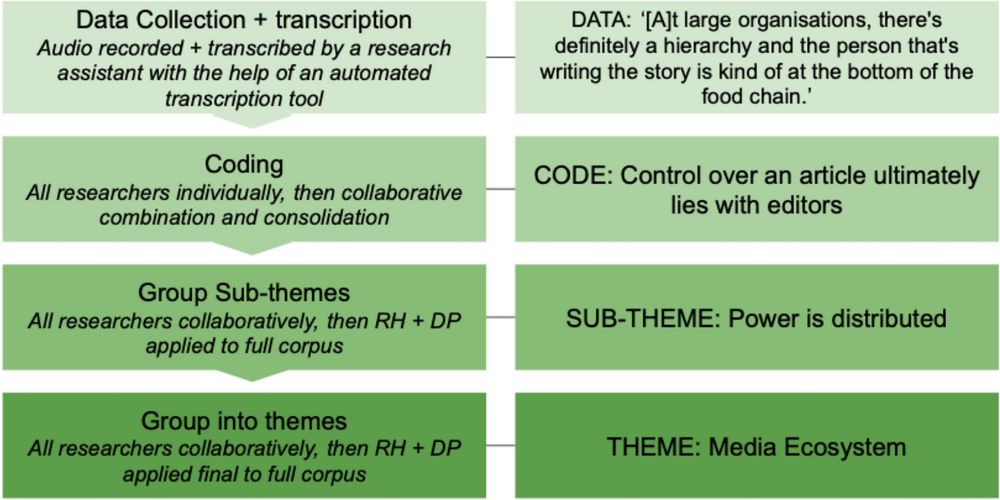

We have also published an explainer on our methods, including how we used artificial intelligence (a Large Language Model) to classify content.

www.theguardian.com/world/2025/s...

www.theguardian.com/world/2025/s...

Reading the post-riot posts: how we traced far-right radicalisation across 51,000 Facebook messages

Tracing profiles of those charged with online offences in summer 2024 helped us map a thriving social ecosystem trading far-right sentiment and political disillusionment

www.theguardian.com

September 28, 2025 at 10:34 AM

We have also published an explainer on our methods, including how we used artificial intelligence (a Large Language Model) to classify content.

www.theguardian.com/world/2025/s...

www.theguardian.com/world/2025/s...

This is a symptom of far-right ideas becoming mainstream in the UK.

September 28, 2025 at 10:34 AM

This is a symptom of far-right ideas becoming mainstream in the UK.

We analyzed thousands of posts to show how extreme anti-immigration, and often conspiratorial and even violent, thinking is gaining traction among demographics not usually associated with this kind of content online.

www.theguardian.com/world/ng-int...

www.theguardian.com/world/ng-int...

Inside the everyday Facebook networks where far-right ideas grow

The Guardian spent a year studying an online community trading in anti-immigration sentiment and misinformation. Experts say such spaces can play a role in radicalisation

www.theguardian.com

September 28, 2025 at 10:34 AM

We analyzed thousands of posts to show how extreme anti-immigration, and often conspiratorial and even violent, thinking is gaining traction among demographics not usually associated with this kind of content online.

www.theguardian.com/world/ng-int...

www.theguardian.com/world/ng-int...

And here is the link to the article: link.springer.com/article/10.1...

AI, journalism, and critical AI literacy: exploring journalists’ perspectives on AI and responsible reporting - AI & SOCIETY

This study explores the perspectives of media professionals on the concerns, needs, and responsibilities related to fostering AI literacy among journalists. We report on findings from two workshops wi...

link.springer.com

June 9, 2025 at 1:39 PM

And here is the link to the article: link.springer.com/article/10.1...

(On a not-completely-unrelated note: feel free to DM me any tips for dealing with a receding hairline!)

June 4, 2025 at 10:43 PM

(On a not-completely-unrelated note: feel free to DM me any tips for dealing with a receding hairline!)

I plan to continue exploring AI and journalism topics, as Large Language Models increasingly mediate news consumption and synthetic media threaten the information landscape. I'm weirdly glad that I’ll be stressing about this for the next couple of years.

June 4, 2025 at 10:43 PM

I plan to continue exploring AI and journalism topics, as Large Language Models increasingly mediate news consumption and synthetic media threaten the information landscape. I'm weirdly glad that I’ll be stressing about this for the next couple of years.

Either way, it skews the reading. Since we’re still accountable for the final product, in some instances, being transparent about use cases at a global level, such as a public-facing company policy, rather than flagging usage as it happens, might be preferable.

April 17, 2025 at 5:22 PM

Either way, it skews the reading. Since we’re still accountable for the final product, in some instances, being transparent about use cases at a global level, such as a public-facing company policy, rather than flagging usage as it happens, might be preferable.

There’s also the issue of perception. A disclaimer like “this headline was written by AI” might be perceived in wildly different ways ---some might dismiss it as garbage, others might assume it’s better than anything a biased human could write.

April 17, 2025 at 5:22 PM

There’s also the issue of perception. A disclaimer like “this headline was written by AI” might be perceived in wildly different ways ---some might dismiss it as garbage, others might assume it’s better than anything a biased human could write.

As AI becomes more pervasive and embedded in different parts of the workflow, we risk cluttering content and distracting the reader. In some cases, it might feel like disclosing that a text was written in Microsoft Word.

April 17, 2025 at 5:22 PM

As AI becomes more pervasive and embedded in different parts of the workflow, we risk cluttering content and distracting the reader. In some cases, it might feel like disclosing that a text was written in Microsoft Word.

→ When we talk about AI applied to journalism, transparency is often cited as a must. I agree with that, of course. However, we also need to be strategic about how we apply it. Total transparency along with every single use sounds good in theory but can be impractical, and even counterproductive.

April 17, 2025 at 5:22 PM

→ When we talk about AI applied to journalism, transparency is often cited as a must. I agree with that, of course. However, we also need to be strategic about how we apply it. Total transparency along with every single use sounds good in theory but can be impractical, and even counterproductive.

And that is particularly hard to navigate in a world of under-resourced media where fly-by reporters end up doing a lot of this work. Still, the balance is off.

April 17, 2025 at 5:22 PM

And that is particularly hard to navigate in a world of under-resourced media where fly-by reporters end up doing a lot of this work. Still, the balance is off.

I'm under no illusion: a lot of this is awfully boring for most readers. It’s easier to get excited about the new tool than the technical and philosophical discussions around it.

April 17, 2025 at 5:22 PM

I'm under no illusion: a lot of this is awfully boring for most readers. It’s easier to get excited about the new tool than the technical and philosophical discussions around it.

We need more reporting that explores tech’s impacts, limitations, and provide broader context.

April 17, 2025 at 5:22 PM

We need more reporting that explores tech’s impacts, limitations, and provide broader context.

→ There’s some amazing journalism about AI out there, but overall, we’re doing a bad job. Research shows that coverage is heavily shaped by industry actors in multiple countries. The result? A lot of product launches and how-to pieces that often lack critical perspective.

April 17, 2025 at 5:22 PM

→ There’s some amazing journalism about AI out there, but overall, we’re doing a bad job. Research shows that coverage is heavily shaped by industry actors in multiple countries. The result? A lot of product launches and how-to pieces that often lack critical perspective.

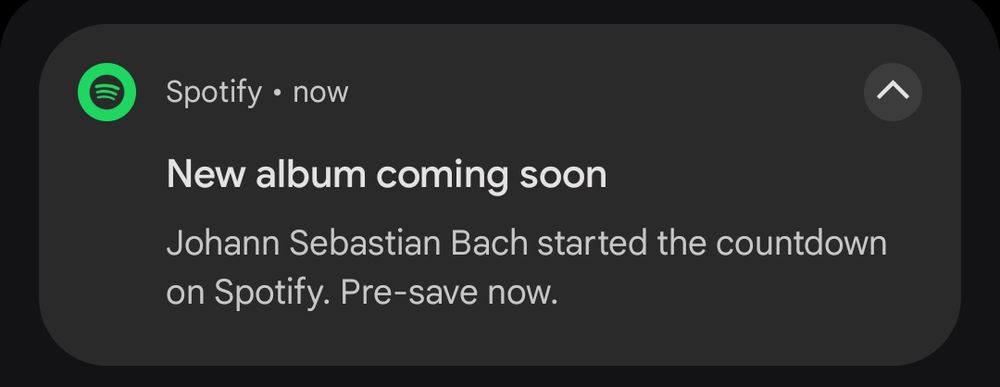

Come on! Drop it already

March 23, 2025 at 8:18 PM

Come on! Drop it already