🧵4/5

🧵4/5

From Qwen3-8B-Base

✅ 100K synthetic problems: better than Qwen3-8B

✅ Combining with human written problems: matches DeepSeek-R1-671B

🧵(1/5)

From Qwen3-8B-Base

✅ 100K synthetic problems: better than Qwen3-8B

✅ Combining with human written problems: matches DeepSeek-R1-671B

🧵(1/5)

@vamvas.bsky.social and @ricosennrich.bsky.social

Paper link:

arxiv.org/pdf/2503.10494

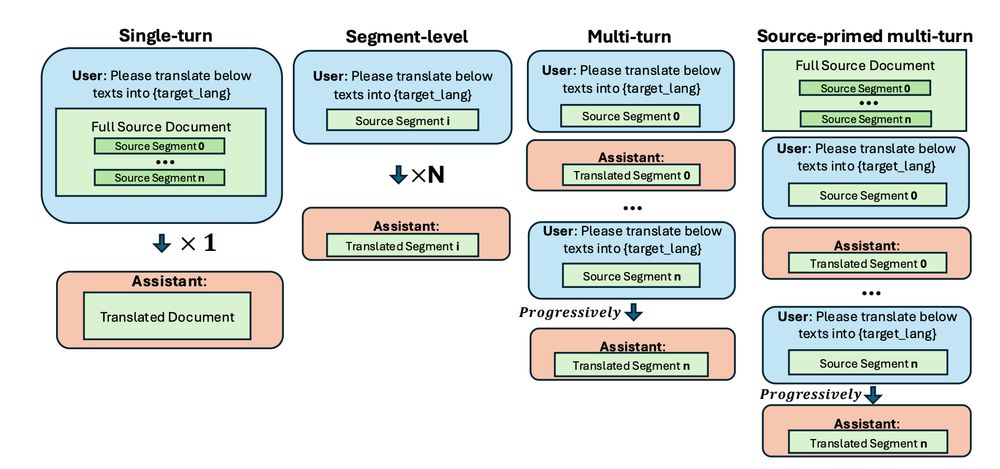

Long context LLMs have paved the way for document translation, but is simply inputting the whole content the optimal way?

Here's the thread 🧵 [1/n]

@vamvas.bsky.social and @ricosennrich.bsky.social

Paper link:

arxiv.org/pdf/2503.10494

Long context LLMs have paved the way for document translation, but is simply inputting the whole content the optimal way?

Here's the thread 🧵 [1/n]