Hannah Mechtenberg

@hannahmech.bsky.social

phd student in language and cognition @UConn. studying brains, speech, how to talk about science, with some freelance graphic design on the side. she/her 🏳️🌈

https://hmechtenberg.wixsite.com/hrmech

https://hmechtenberg.wixsite.com/hrmech

Is there a difference? hahaha

September 2, 2025 at 5:32 PM

Is there a difference? hahaha

Utterly - I never know what's going to happen next

September 2, 2025 at 3:57 PM

Utterly - I never know what's going to happen next

So exciting!!!!

September 2, 2025 at 2:47 PM

So exciting!!!!

Thanks @ariellekeller.bsky.social, it was a true team effort!

June 13, 2025 at 8:34 PM

Thanks @ariellekeller.bsky.social, it was a true team effort!

HUGE props to @jamiereillycog.bsky.social (check out his super cool SemanticDistance package in R too: osf.io/preprints/ps...), @jpeelle.bsky.social, and @emilymyers.bsky.social for getting this project out into the world. It's neat stuff.

OSF

osf.io

June 13, 2025 at 8:33 PM

HUGE props to @jamiereillycog.bsky.social (check out his super cool SemanticDistance package in R too: osf.io/preprints/ps...), @jpeelle.bsky.social, and @emilymyers.bsky.social for getting this project out into the world. It's neat stuff.

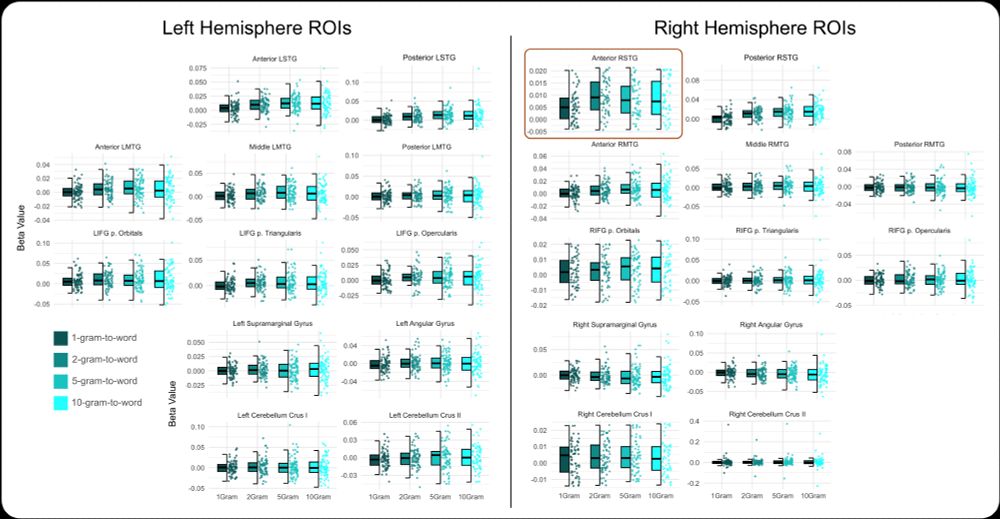

The results are interesting (if I am so biased as to say), maybe especially so because the anterior right temporal lobe was particularly sensitive to changes in context window size (so, from 2-gram to 10-gram) - perhaps suggesting a specialized role in semantic integration during listening.

June 13, 2025 at 8:33 PM

The results are interesting (if I am so biased as to say), maybe especially so because the anterior right temporal lobe was particularly sensitive to changes in context window size (so, from 2-gram to 10-gram) - perhaps suggesting a specialized role in semantic integration during listening.

I can't believe we didn't think about that! Maybe we can get starburst to sponsor??

May 6, 2025 at 3:52 PM

I can't believe we didn't think about that! Maybe we can get starburst to sponsor??

Bonus fact: Our title “Cents and Shenshibility” allowed us to sprinkle in Jane Austen easter eggs throughout the paper—try and find them all!

a woman in a green dress walks down a red carpet with the words welcome to the regency era written below her

Alt: a woman in a green dress walks down a red carpet with the words welcome to the regency era written below her

media.tenor.com

May 6, 2025 at 1:52 PM

Bonus fact: Our title “Cents and Shenshibility” allowed us to sprinkle in Jane Austen easter eggs throughout the paper—try and find them all!

This work is reasonably exciting (to us) as it suggests that this process is relatively insulated from external influence – which begs the question: why? Food for future study, and we hope this work inspires renewed interest in the role of attention and reward in talker-specific phonetic learning.

a woman with curly hair is sitting in a chair and saying money please .

Alt: a woman with curly hair is sitting in a chair and saying money please .

media.tenor.com

May 6, 2025 at 1:52 PM

This work is reasonably exciting (to us) as it suggests that this process is relatively insulated from external influence – which begs the question: why? Food for future study, and we hope this work inspires renewed interest in the role of attention and reward in talker-specific phonetic learning.

(5/) We then followed up with several alternations: what if we reward at the level of the phoneme instead of at the talker? What if listeners know the value of the reward before they hear the ambiguous word? Results converge on the possibility that reward does not influence phonetic recalibration

May 6, 2025 at 1:52 PM

(5/) We then followed up with several alternations: what if we reward at the level of the phoneme instead of at the talker? What if listeners know the value of the reward before they hear the ambiguous word? Results converge on the possibility that reward does not influence phonetic recalibration

(4/) In the first experiment, using a probabilistic reward schedule, we found that listeners learned just as much about the high reward talker as they did for the low reward talker, suggesting that perhaps external value may not factor into talker-specific phonetic recalibration. Curious

May 6, 2025 at 1:52 PM

(4/) In the first experiment, using a probabilistic reward schedule, we found that listeners learned just as much about the high reward talker as they did for the low reward talker, suggesting that perhaps external value may not factor into talker-specific phonetic recalibration. Curious

(3/) In our adaptation of a multitalker LGPL paradigm (randomly intermixed exposure then talker-specific test), we added a small monetary reward following correct trials more often for one talker vs the other. We then measured the amount of learning via a test for each talker. So, what did we find?

May 6, 2025 at 1:52 PM

(3/) In our adaptation of a multitalker LGPL paradigm (randomly intermixed exposure then talker-specific test), we added a small monetary reward following correct trials more often for one talker vs the other. We then measured the amount of learning via a test for each talker. So, what did we find?

(2/) We wanted to know if listeners might prioritize learning for one talker that was literally rewarded more often than another: something we might experience in real life. Might we learn more about the speech for those we value? And learn less about the speech for talkers that we value less?

a close up of a man 's face with the words `` i don 't care about your life . ''

Alt: A close up of a Tyrion Lanister from Game of Thrones with the words "I don't care about your life .''

media.tenor.com

May 6, 2025 at 1:52 PM

(2/) We wanted to know if listeners might prioritize learning for one talker that was literally rewarded more often than another: something we might experience in real life. Might we learn more about the speech for those we value? And learn less about the speech for talkers that we value less?