Out: safety, responsibility, sociotechnical, fairness, working w fed agencies, authenticating content, watermarking, RN of CBRN, autonomous replication, ctrl of physical systems

>

Out: safety, responsibility, sociotechnical, fairness, working w fed agencies, authenticating content, watermarking, RN of CBRN, autonomous replication, ctrl of physical systems

>

AI at Work: Building and Evaluating Trust

Presented by our Trustworthy AI in Law & Society (TRIALS) institute.

Feb 3-4

Washington DC

Open to all!

Details and registration at: trails.gwu.edu/trailscon-2025

Sponsorship details at: trails.gwu.edu/media/556

AI at Work: Building and Evaluating Trust

Presented by our Trustworthy AI in Law & Society (TRIALS) institute.

Feb 3-4

Washington DC

Open to all!

Details and registration at: trails.gwu.edu/trailscon-2025

Sponsorship details at: trails.gwu.edu/media/556

here, i kind of like kaplan+haenlein and also google. tesler is of course always correct and may really be the right answer.

![Poll results:

McCarthy (1956) "The science and engineering of making intelligent machines: [it is] the computational part of the ability to achieve goals in the world." - 3 votes

Marvin Minsky (1956) "The construction of computer programs that engage in tasks that are currently more satisfactorily performed by human beings because they require...: perceptual learning, memory organization and critical reasoning." - 19 votes

John Searle (1960s) "The appropriately programmed computer with the right inputs and outputs would thereby have a mind in exactly the same sense human beings have minds." - 17 votes

Kaplan & Haenlein (2019) "A system's ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation." - 60 votes

Google (2020s) "AI is a field of science concerned with building computers and machines that can reason, learn, and act in such a way that would normally require human intelligence or that involves data whose scale exceeds what humans can analyze." - 71 votes

Larry Tesler (1980s, debated) "AI is whatever hasn't been done yet." - 2 votes](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:aexevhwfk5oz5vmv4bljwows/bafkreidv7gy74z2kryzc4brf7jsnaaivnvvbafzkkl43xcuy5hm24gd3z4@jpeg)

here, i kind of like kaplan+haenlein and also google. tesler is of course always correct and may really be the right answer.

i kind of like gardner and legg+hutter fwiw.

![Poll results.

Alfred Binet: Judgment, otherwise called "good sense", "practical sense", "initiative", the faculty of adapting one's self to circumstances ... auto-critique. - 15 votes

David Wechsler: The aggregate or global capacity of the individual to act purposefully, to think rationally, and to deal effectively with his environment. - 26 votes

Lloyd Humphreys: The resultant of the process of acquiring, storing in memory, retrieving, combining, comparing, and using in new contexts information and conceptual skills. - 49 votes

Howard Gardner: [Intelligence] entail[s] a set of skills of problem solving—enabling the individual to resolve genuine problems ... they encounter[] —... and the potential for finding or creating problems. - 41 votes

Robert Sternberg & William Salter: Goal-directed adaptive behavior. - 8 votes

Reuven Feuerstein: The unique propensity of human beings to change or modify the structure of their cognitive functioning to adapt to the changing demands of a life situation. - 23 votes

Shane Legg & Marcus Hutter: Intelligence measures an agent's ability to achieve goals in a wide range of environments. - 10 votes](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:aexevhwfk5oz5vmv4bljwows/bafkreifwo34zcsfgriywotiemyy5fqh2a7bzk6lphisrsclv7aazqukaa4@jpeg)

i kind of like gardner and legg+hutter fwiw.

![modified distracted boyfriend meme. he's looking away from two women, one labeled [pa], one saying [ka], and looking toward another labeled [ta].](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:aexevhwfk5oz5vmv4bljwows/bafkreigz775ovalop7bb6cfber2ucb6xo6dksvpng4iittybffm35sezgm@jpeg)

We show that a carefully constructed temporal contrastive loss leads to effective, multitask RL pretraining.

by Ruijie Zheng +al ICML’24

hal3.name/docs/daume24...

(eos)

We show that a carefully constructed temporal contrastive loss leads to effective, multitask RL pretraining.

by Ruijie Zheng +al ICML’24

hal3.name/docs/daume24...

(eos)

There are lots of hate datasets with different nuances. We show how to pretrain on a COT-enhanced dataset to get great performance on data du jour.

by Huy Nghiem +al EMNLP’24

hal3.name/docs/daume24...

>

There are lots of hate datasets with different nuances. We show how to pretrain on a COT-enhanced dataset to get great performance on data du jour.

by Huy Nghiem +al EMNLP’24

hal3.name/docs/daume24...

>

We use item response theory to compare the capabilities of 155 people vs 70 chatbots at answering questions, teasing apart complementarities; implications for design.

by Maharshi Gor +al EMNLP’24

hal3.name/docs/daume24...

>

We use item response theory to compare the capabilities of 155 people vs 70 chatbots at answering questions, teasing apart complementarities; implications for design.

by Maharshi Gor +al EMNLP’24

hal3.name/docs/daume24...

>

We find AAL speakers expend significant invisible labor in order to achieve parity of outputs in LT systems; fairness measures don't capture this.

by Jay Cunningham +al ACL’24

hal3.name/docs/daume24...

>

We find AAL speakers expend significant invisible labor in order to achieve parity of outputs in LT systems; fairness measures don't capture this.

by Jay Cunningham +al ACL’24

hal3.name/docs/daume24...

>

We show in RL that explicitly minimizing the dormant ratio (fraction of non-active neurons) improves exploration, rewards, etc.

by Guowei Xu, Ruijie Zheng, Yongyuan Liang +al ICLR’24

hal3.name/docs/daume24...

>

We show in RL that explicitly minimizing the dormant ratio (fraction of non-active neurons) improves exploration, rewards, etc.

by Guowei Xu, Ruijie Zheng, Yongyuan Liang +al ICLR’24

hal3.name/docs/daume24...

>

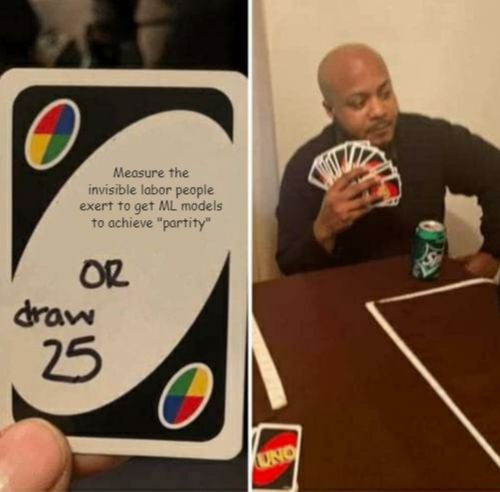

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

We show that LLM-style byte-pair encoding can be used to compress action sequences to give “meta-actions” of different lengths, improving RL performance.

by Ruijie Zheng +al ICML ‘24

hal3.name/docs/daume24...

>

We show that LLM-style byte-pair encoding can be used to compress action sequences to give “meta-actions” of different lengths, improving RL performance.

by Ruijie Zheng +al ICML ‘24

hal3.name/docs/daume24...

>

We observe that anon discussions between revs led to slightly more discussion, less influence of seniority, no diff in politeness, & is slightly preferred by revs.

by Charvi Rastogi et al PLOS1’24

hal3.name/docs/daume24...

>

We observe that anon discussions between revs led to slightly more discussion, less influence of seniority, no diff in politeness, & is slightly preferred by revs.

by Charvi Rastogi et al PLOS1’24

hal3.name/docs/daume24...

>

Chatbots routinely prefer candidates with White, female-sounding names over others, even when candidates have identical qualifications.

by Huy Nghiem et al EMNLP’24

hal3.name/docs/daume24...

>

Chatbots routinely prefer candidates with White, female-sounding names over others, even when candidates have identical qualifications.

by Huy Nghiem et al EMNLP’24

hal3.name/docs/daume24...

>

Should one use chatbots or web search to fact check? Chatbots help more on avg, but people uncritically accept their suggestions much more often.

by Chenglei Si +al NAACL’24

hal3.name/docs/daume24...

>

Should one use chatbots or web search to fact check? Chatbots help more on avg, but people uncritically accept their suggestions much more often.

by Chenglei Si +al NAACL’24

hal3.name/docs/daume24...

>

When generating instructions for people, we can help them by highlighting potential confabs, AND by suggesting alternatives.

by Lingjun Zhao EMNLP’24

hal3.name/docs/daume24...

>

When generating instructions for people, we can help them by highlighting potential confabs, AND by suggesting alternatives.

by Lingjun Zhao EMNLP’24

hal3.name/docs/daume24...

>

also france: you’ve heard of black friday? let me introduce you to

also france: you’ve heard of black friday? let me introduce you to

We develop a continuous signing dataset for ASL on a STEM subset of Wikipedia; challenges suggest problems related to fingerspelling detection, sign linking, & translation.

by Kayo Yin et al EMNLP’24

hal3.name/docs/daume24...

>

We develop a continuous signing dataset for ASL on a STEM subset of Wikipedia; challenges suggest problems related to fingerspelling detection, sign linking, & translation.

by Kayo Yin et al EMNLP’24

hal3.name/docs/daume24...

>

This event is only open to individuals who work for a member of AISIC.

📅Register by Dec 2

👉Learn more: go.umd.edu/1u0n

This event is only open to individuals who work for a member of AISIC.

📅Register by Dec 2

👉Learn more: go.umd.edu/1u0n

Much work aims to help moderators by automation; much focusing on toxicity. Turns out that’s a small part of what volunteer mods want.

by Yang Trista Cao +al EMNLP’24

hal3.name/docs/daume24...

>

Much work aims to help moderators by automation; much focusing on toxicity. Turns out that’s a small part of what volunteer mods want.

by Yang Trista Cao +al EMNLP’24

hal3.name/docs/daume24...

>

Authors overestimate the chances their papers'll be accepted; co-auths disagree about paper value about as much as auth/revs.

by Charvi Rastogi +al PLOS One'24

hal3.name/docs/daume24...

>

Authors overestimate the chances their papers'll be accepted; co-auths disagree about paper value about as much as auth/revs.

by Charvi Rastogi +al PLOS One'24

hal3.name/docs/daume24...

>