arxiv.org/pdf/2407.04295

arxiv.org/pdf/2407.04295

The stream will be recorded—catch it later if you can't join live! 🚀

The stream will be recorded—catch it later if you can't join live! 🚀

bsky.app/profile/yoav...

Did you look at pre-post-training models?

(show some hyphen love ❤️)

Models that learn from feedback train on their own outputs, so you see performance 📈 but language diversity 📉. We show that if you couple comprehension and generation you learn faster 🏎️ AND get richer language!

arxiv.org/abs/2408.15992

Demo and video ⬇ + in EMNLP!

bsky.app/profile/yoav...

Did you look at pre-post-training models?

(show some hyphen love ❤️)

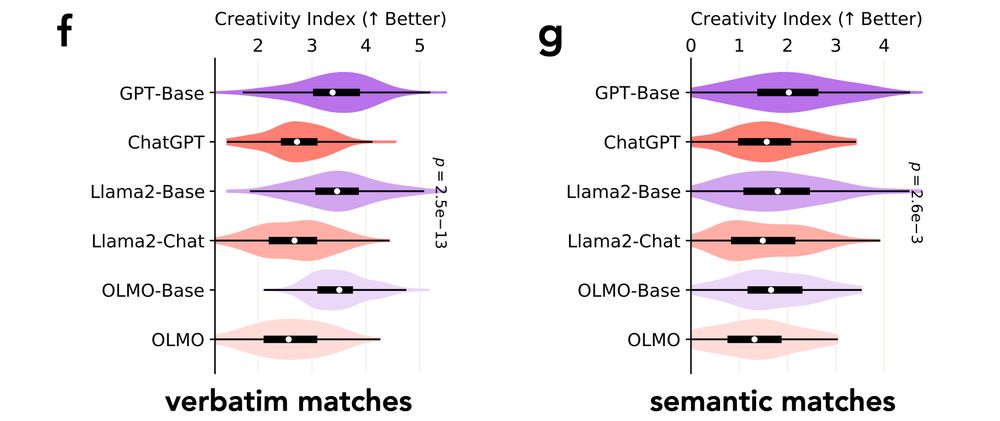

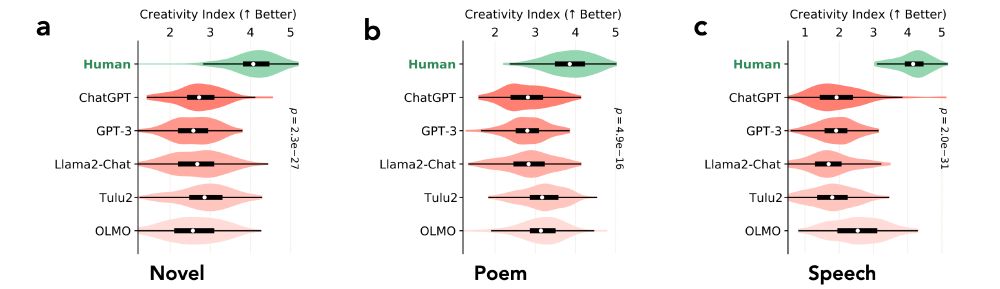

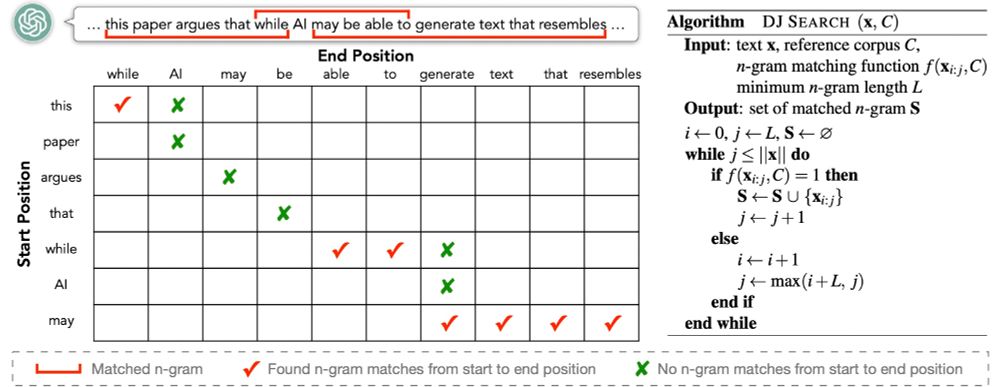

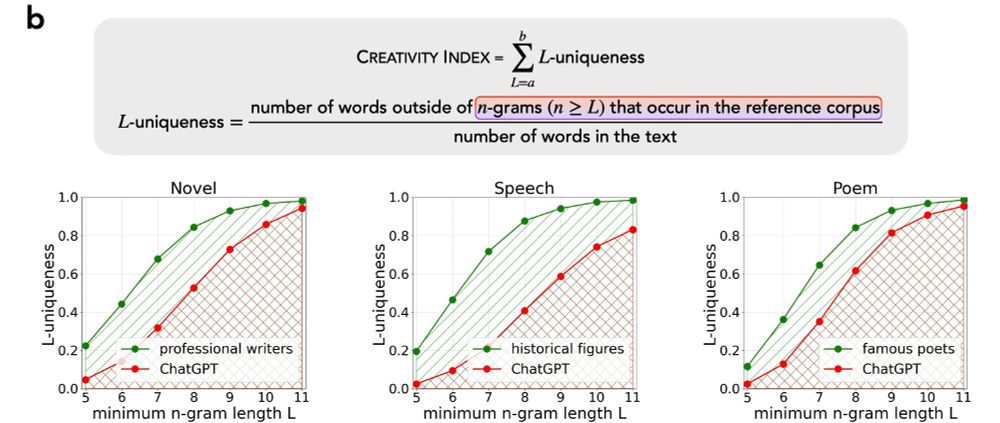

The CREATIVITY INDEX is then defined as the area under the L-uniqueness curve across a range of minimum n-gram lengths L.

The CREATIVITY INDEX is then defined as the area under the L-uniqueness curve across a range of minimum n-gram lengths L.