📝 "Localization by design via semantic dropout masks"

Many recent works try to localize model behaviors to params and intervene upon them. Acknowledging how hard it is to do after training, several works have tried to train models that allow localization.

📝 "Localization by design via semantic dropout masks"

Many recent works try to localize model behaviors to params and intervene upon them. Acknowledging how hard it is to do after training, several works have tried to train models that allow localization.

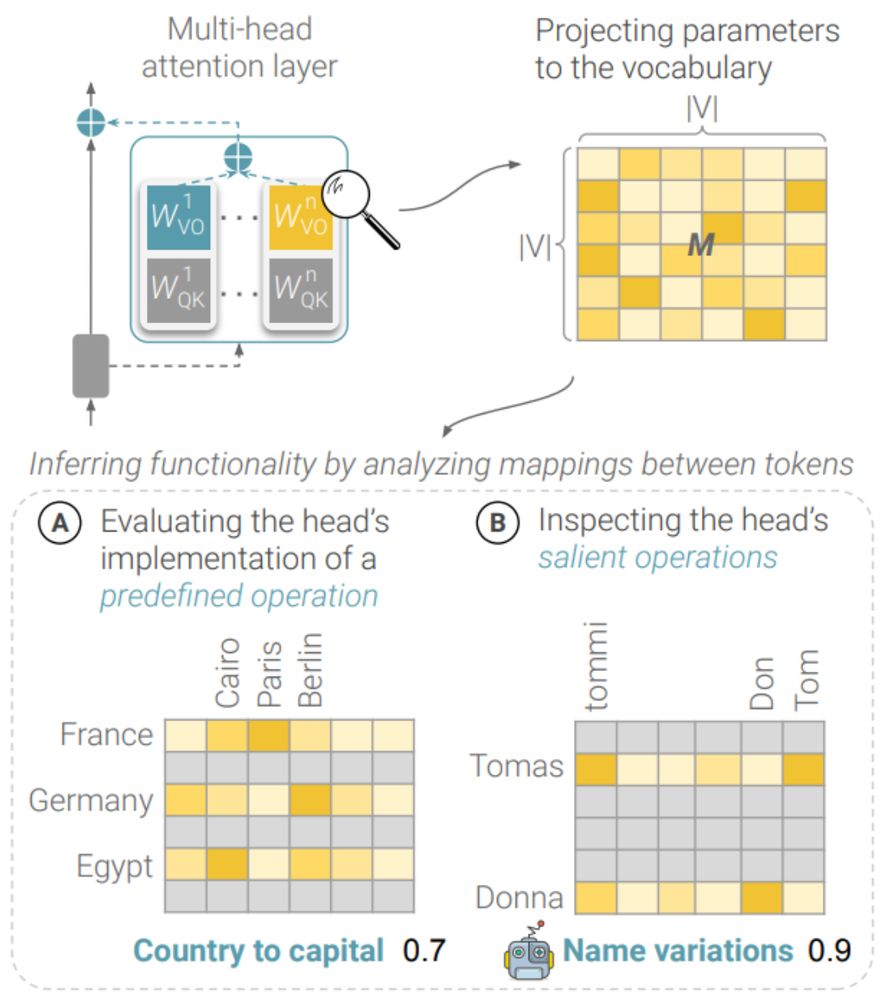

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)