https://cmp.felk.cvut.cz/~toliageo

Torsten Sattler, Paul-Edouard Sarlin, Vicky Kalogeiton, Spyros Gidaris, Anna Kukleva, and Lukas Neumann.

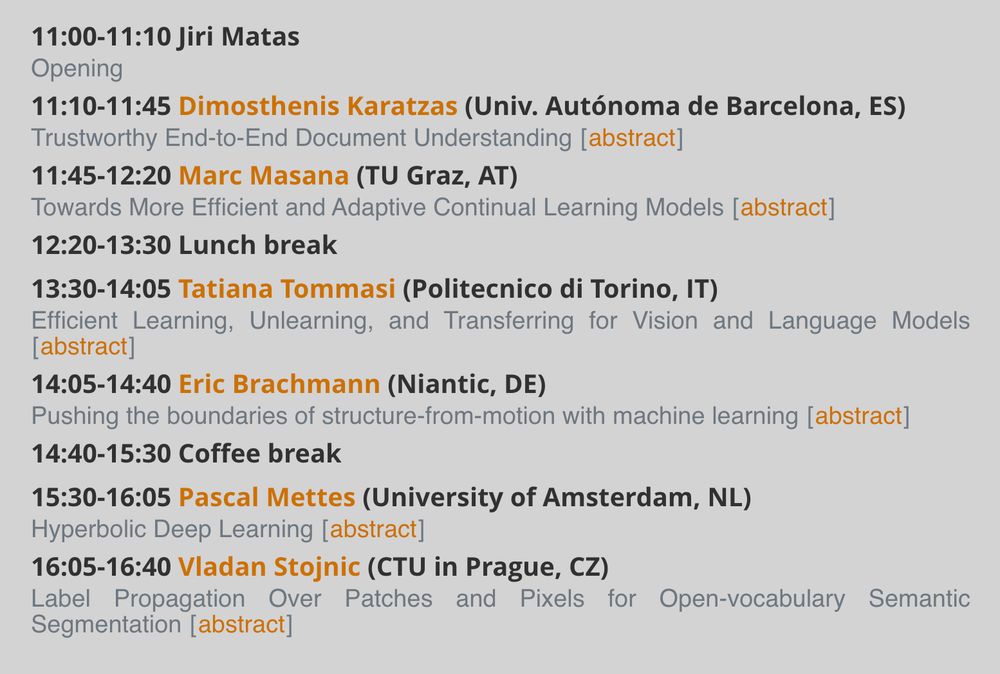

On Thursday Oct 9, 11:00-17:00.

cmp.felk.cvut.cz/colloquium/

Torsten Sattler, Paul-Edouard Sarlin, Vicky Kalogeiton, Spyros Gidaris, Anna Kukleva, and Lukas Neumann.

On Thursday Oct 9, 11:00-17:00.

cmp.felk.cvut.cz/colloquium/

cmp.felk.cvut.cz/colloquium/#...

cmp.felk.cvut.cz/colloquium/#...