algorithmic.ink

https://x.com/gottapatchemall

There are some avenues here though e.g. coexpress with another less sensitive channelrhodopsin. And I guess if you have to choose between attenuating or amplifying light from the environment, it’s easier to attenuate

There are some avenues here though e.g. coexpress with another less sensitive channelrhodopsin. And I guess if you have to choose between attenuating or amplifying light from the environment, it’s easier to attenuate

Re AAV vs lipofection - best guess is that it’s to do with higher and more variable copy number from lipofection; seems like it’s easy to make cells unhappy when lipofecting certain opsins in

Re AAV vs lipofection - best guess is that it’s to do with higher and more variable copy number from lipofection; seems like it’s easy to make cells unhappy when lipofecting certain opsins in

This lowercase science is brought to you by uppercase Science

science.xyz/news/new-fr...

This lowercase science is brought to you by uppercase Science

science.xyz/news/new-fr...

Please send thoughts/feedback. And holler at us if you want to try WAChRs out for your own experiments, we'll send you some

Please send thoughts/feedback. And holler at us if you want to try WAChRs out for your own experiments, we'll send you some

Simple - but a sanity check for being able to do something like optogenetic vision restoration.

Simple - but a sanity check for being able to do something like optogenetic vision restoration.

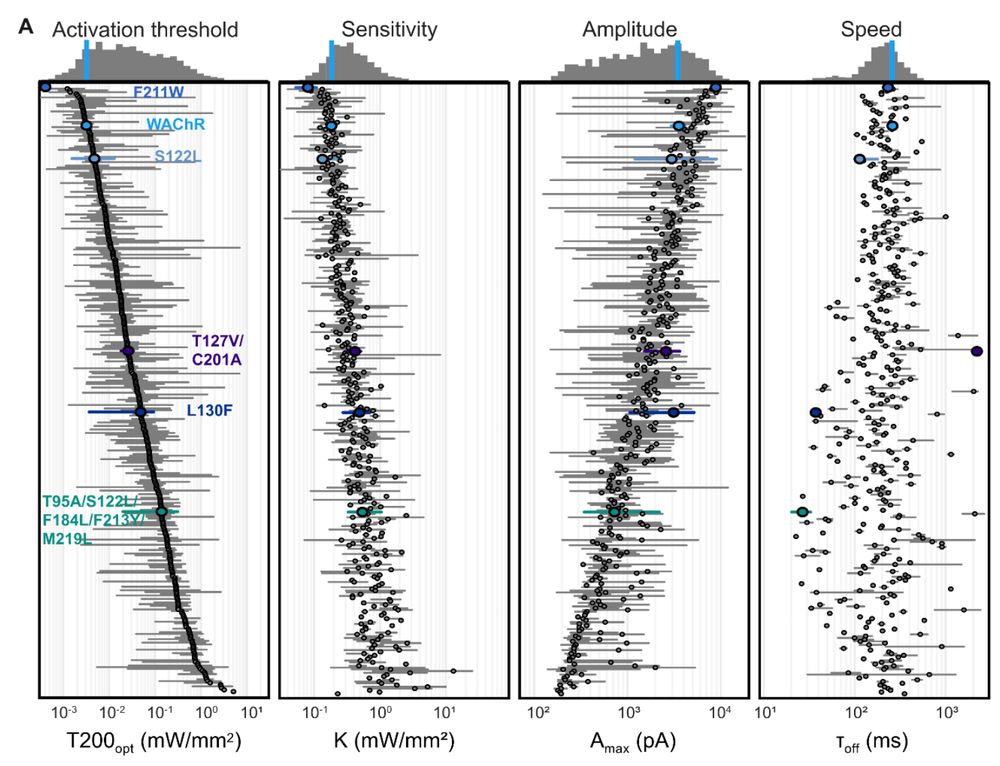

We benchmarked these against some existing optogenetic tools on our manual patch clamp rig ("Patchrig Swayze").

We think they offer superior performance for a lot of applications.

We benchmarked these against some existing optogenetic tools on our manual patch clamp rig ("Patchrig Swayze").

We think they offer superior performance for a lot of applications.

(We named him Al Patchino)

(We named him Al Patchino)

WiChR is a strong, sensitive optogenetic silencer. We applied a mutation that breaks K+ selectivity, which converts it into an excitatory channel. We call this mutant WAChR (pronounced "watcher").

WiChR is a strong, sensitive optogenetic silencer. We applied a mutation that breaks K+ selectivity, which converts it into an excitatory channel. We call this mutant WAChR (pronounced "watcher").