| BS @Stanford|

| 🔗 https://garciakathy.github.io/ |

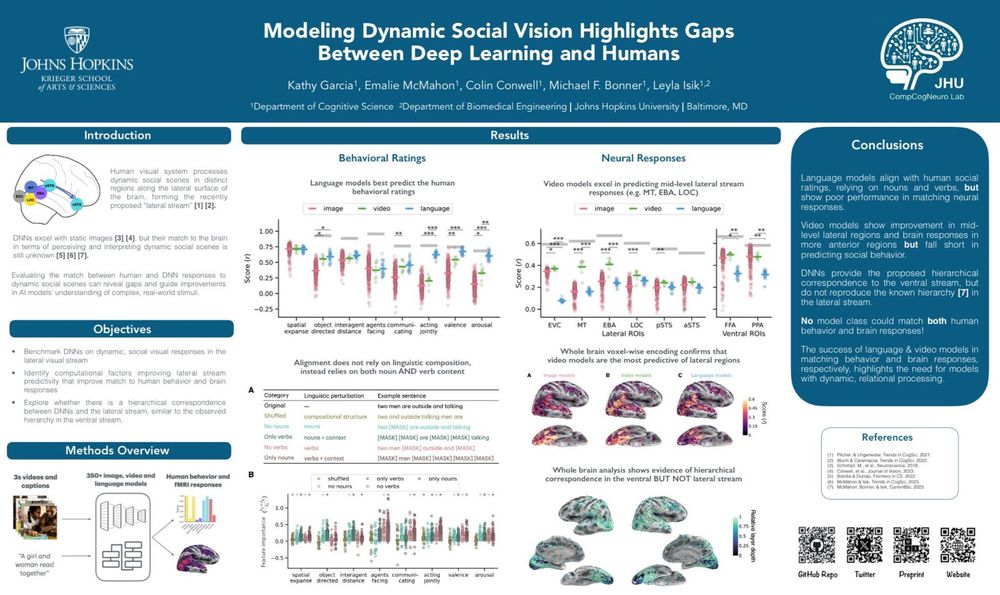

Aligning Video Models with Human Social Judgments via Behavior-Guided Fine-Tuning

We introduce a ~49k triplet social video dataset, uncover a modality gap (language > video), and close via novel behavior-guided fine-tuning.

🔗 arxiv.org/abs/2510.01502

Aligning Video Models with Human Social Judgments via Behavior-Guided Fine-Tuning

We introduce a ~49k triplet social video dataset, uncover a modality gap (language > video), and close via novel behavior-guided fine-tuning.

🔗 arxiv.org/abs/2510.01502

📆 Thur, Apr, 24: 3:00-5:30 - Poster session 2 (#64)

📄 bit.ly/4jISKES%E2%8... [1/6]

📆 Thur, Apr, 24: 3:00-5:30 - Poster session 2 (#64)

📄 bit.ly/4jISKES%E2%8... [1/6]