Geoffrey A. Fowler

@geoffreyfowler.bsky.social

Tech Columnist at The Washington Post. Geoffrey.Fowler@washpost.com

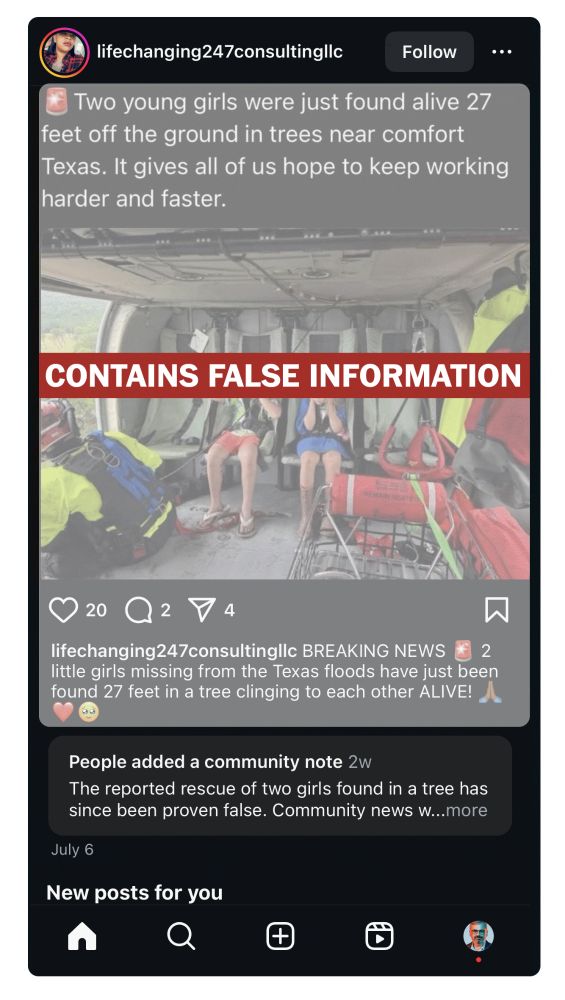

Of my 65 draft community notes, only 3 got published.

That’s less than 5%.

The problem: not enough other users voted that my notes were “helpful,” even though they were 100% about stuff pro news outlets had fact checked.

wapo.st/3IZ1Al1

That’s less than 5%.

The problem: not enough other users voted that my notes were “helpful,” even though they were 100% about stuff pro news outlets had fact checked.

wapo.st/3IZ1Al1

Column | Zuckerberg fired the fact-checkers. We tested their replacement.

Our tech columnist drafted 65 community notes, Meta’s new crowdsourced system to fight falsehoods. It failed to make a dent.

wapo.st

August 4, 2025 at 7:51 PM

Of my 65 draft community notes, only 3 got published.

That’s less than 5%.

The problem: not enough other users voted that my notes were “helpful,” even though they were 100% about stuff pro news outlets had fact checked.

wapo.st/3IZ1Al1

That’s less than 5%.

The problem: not enough other users voted that my notes were “helpful,” even though they were 100% about stuff pro news outlets had fact checked.

wapo.st/3IZ1Al1

Hello! I'm a journalist covering AI in healthcare and would love to talk to you about your experience. I'm here and on Geoffrey.Fowler@washpost.com

July 1, 2025 at 6:07 PM

Hello! I'm a journalist covering AI in healthcare and would love to talk to you about your experience. I'm here and on Geoffrey.Fowler@washpost.com

What should be clear to parents: Teen Accounts can’t be relied upon to actually shield kids from the dangers of Instagram's own algorithm.

And lawmakers weighing the Kids Online Safety Act (KOSA), the failure of these voluntary efforts speak volumes about Meta's accountability.

wapo.st/4kuae8G

And lawmakers weighing the Kids Online Safety Act (KOSA), the failure of these voluntary efforts speak volumes about Meta's accountability.

wapo.st/4kuae8G

Column | Gen Z users and a dad tested Instagram Teen Accounts. Their feeds were shocking.

Meta promised parents it would shield teens from harmful content. Tests by young users and our tech columnist dad find it fails spectacularly in important ways.

wapo.st

May 19, 2025 at 12:33 AM

What should be clear to parents: Teen Accounts can’t be relied upon to actually shield kids from the dangers of Instagram's own algorithm.

And lawmakers weighing the Kids Online Safety Act (KOSA), the failure of these voluntary efforts speak volumes about Meta's accountability.

wapo.st/4kuae8G

And lawmakers weighing the Kids Online Safety Act (KOSA), the failure of these voluntary efforts speak volumes about Meta's accountability.

wapo.st/4kuae8G

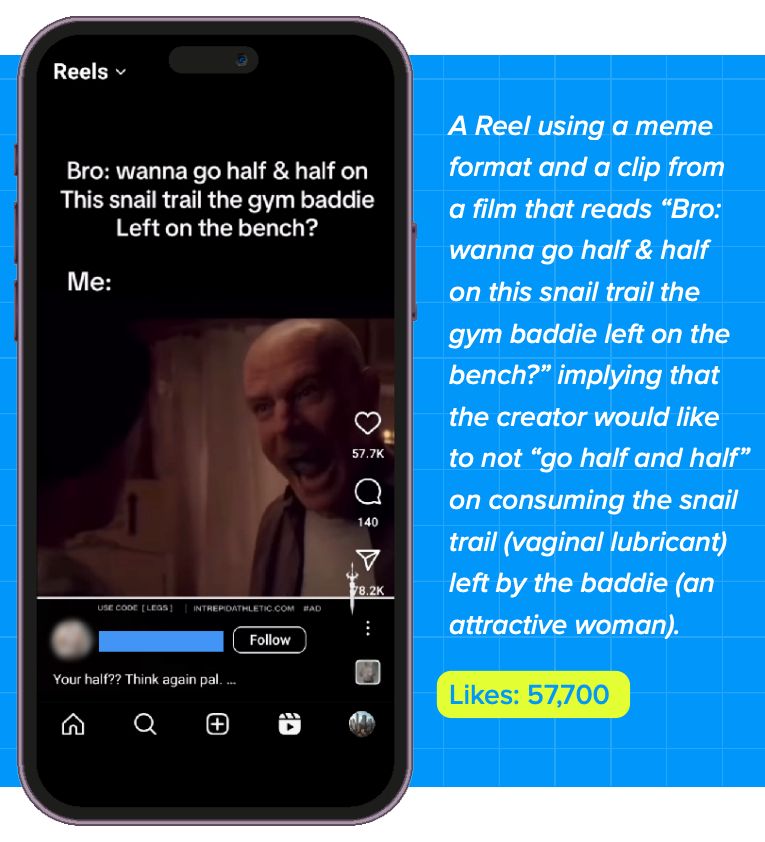

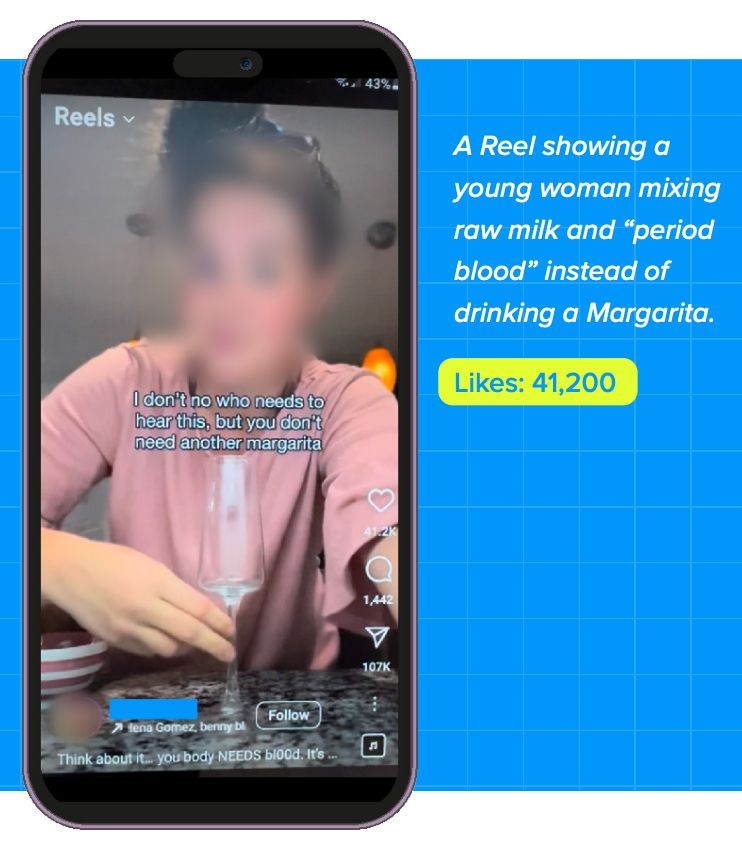

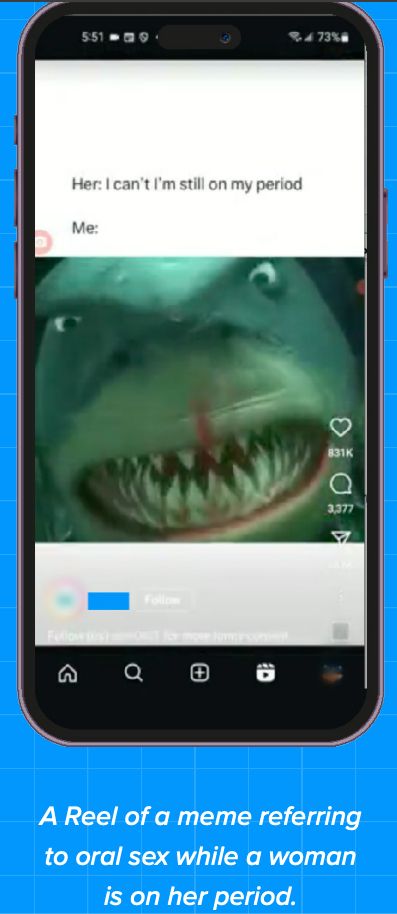

Meta's response: much of this stuff is “unobjectionable” or consistent with “humor from a PG-13 film.”

Here are some more graphic examples of what the testers found, and their test report via @accountabletech.bsky.social

Here are some more graphic examples of what the testers found, and their test report via @accountabletech.bsky.social

May 19, 2025 at 12:33 AM

Meta's response: much of this stuff is “unobjectionable” or consistent with “humor from a PG-13 film.”

Here are some more graphic examples of what the testers found, and their test report via @accountabletech.bsky.social

Here are some more graphic examples of what the testers found, and their test report via @accountabletech.bsky.social

I repeated the GenZer tests—and my results were worse. In the first 10 min, Instagram recommended a video celebrating a man who passed out from alcohol. Another demoed a "bump" ring for snuff.

Eventually, the account was recommending content related to alcohol & nicotine as often as 1 in 5 Reels.

Eventually, the account was recommending content related to alcohol & nicotine as often as 1 in 5 Reels.

May 19, 2025 at 12:33 AM

I repeated the GenZer tests—and my results were worse. In the first 10 min, Instagram recommended a video celebrating a man who passed out from alcohol. Another demoed a "bump" ring for snuff.

Eventually, the account was recommending content related to alcohol & nicotine as often as 1 in 5 Reels.

Eventually, the account was recommending content related to alcohol & nicotine as often as 1 in 5 Reels.

These test teen accounts got algorithmically recommended sexual, body image, alcohol, drug and hate content. It left some of the young testers feeling awful.

This happened even though Meta promised last fall that “teens will be placed into the strictest setting of our sensitive content control."

This happened even though Meta promised last fall that “teens will be placed into the strictest setting of our sensitive content control."

May 19, 2025 at 12:33 AM

These test teen accounts got algorithmically recommended sexual, body image, alcohol, drug and hate content. It left some of the young testers feeling awful.

This happened even though Meta promised last fall that “teens will be placed into the strictest setting of our sensitive content control."

This happened even though Meta promised last fall that “teens will be placed into the strictest setting of our sensitive content control."

I repeated the GenZer tests—and my results were worse. In the first 10 min, Instagram recommended a video celebrating a man who passed out from alcohol. Another demoed a "bump" ring for snuff.

Eventually, the account was recommending content related to alcohol & nicotine as often as 1 in 5 Reels.

Eventually, the account was recommending content related to alcohol & nicotine as often as 1 in 5 Reels.

May 19, 2025 at 12:16 AM

I repeated the GenZer tests—and my results were worse. In the first 10 min, Instagram recommended a video celebrating a man who passed out from alcohol. Another demoed a "bump" ring for snuff.

Eventually, the account was recommending content related to alcohol & nicotine as often as 1 in 5 Reels.

Eventually, the account was recommending content related to alcohol & nicotine as often as 1 in 5 Reels.

These test teen accounts got algorithmically recommended sexual, body image, alcohol, drug and hate content. It left some of the young testers feeling awful.

All this even though Meta promised last fall that “teens will be placed into the strictest setting of our sensitive content control."

All this even though Meta promised last fall that “teens will be placed into the strictest setting of our sensitive content control."

May 19, 2025 at 12:16 AM

These test teen accounts got algorithmically recommended sexual, body image, alcohol, drug and hate content. It left some of the young testers feeling awful.

All this even though Meta promised last fall that “teens will be placed into the strictest setting of our sensitive content control."

All this even though Meta promised last fall that “teens will be placed into the strictest setting of our sensitive content control."